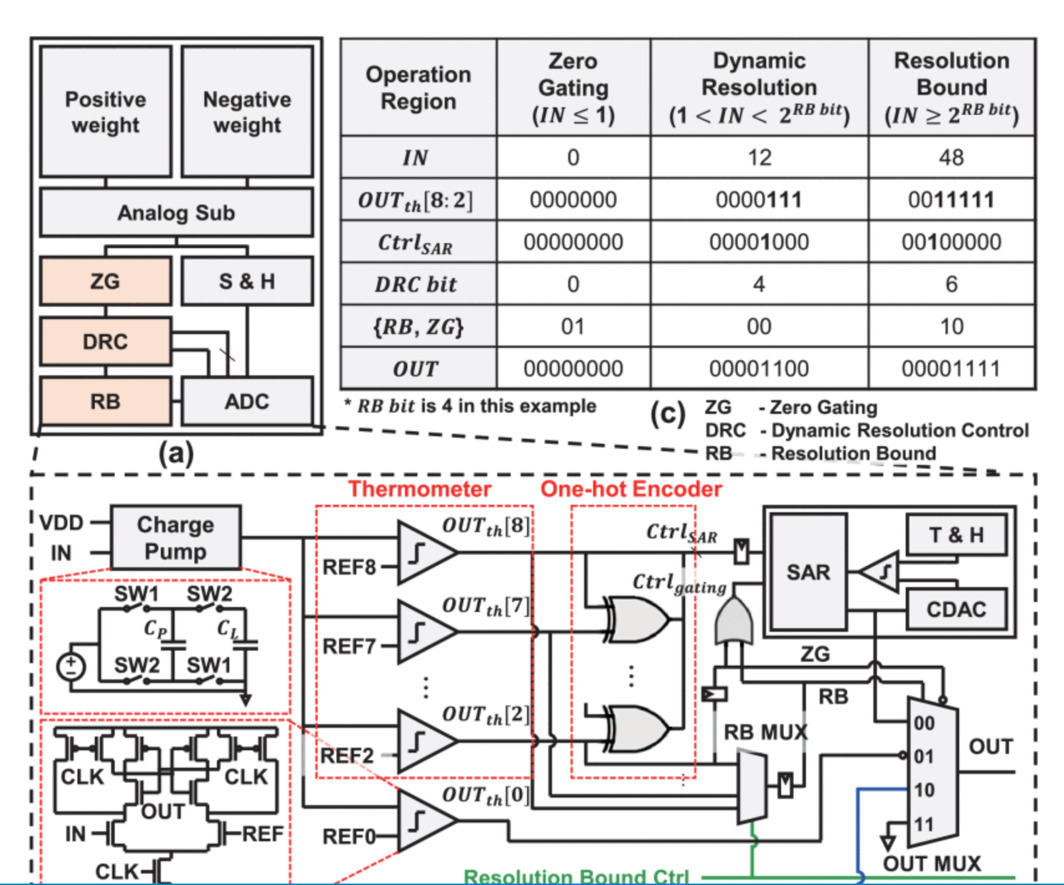

Title: Optimizing ADC Utilization through Value-Aware Bypass in ReRAM-based DNN Accelerator

Author: Hancheon Yun, Hyein Shin, Myeonggu Kang, Lee-Sup Kim

Conference : IEEE/ACM Design Automation Conference (DAC) 2021

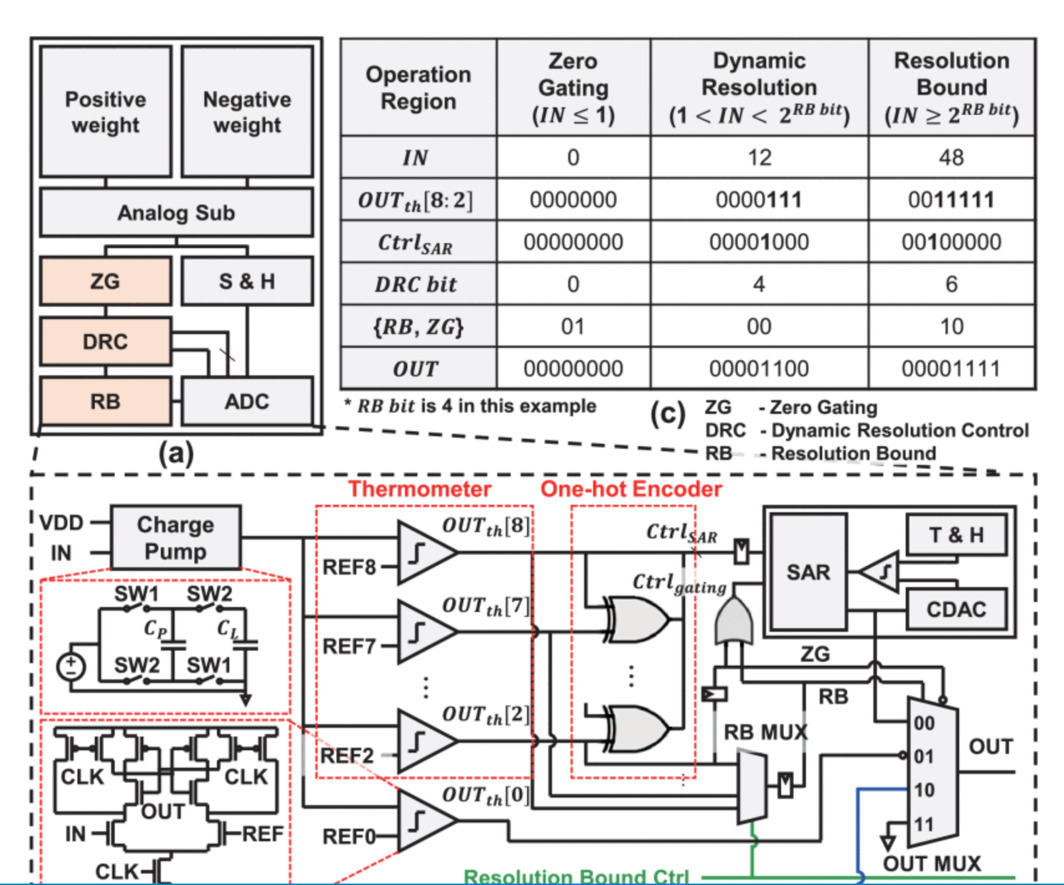

Abstract: ReRAM-based Processing-In-Memory (PIM) has been widely studied as a promising approach for Deep Neural Networks (DNN) accelerator with its energy-efficient analog operations. However, the domain conversion process for the analog operation requires frequent accesses to power-hungry Analog-to-Digital Converter (ADC), hindering the overall energy efficiency. Although previous research has been suggested to address this problem, the ADC cost has not been sufficiently reduced because of its unsuitable approach for ReRAM. In this paper, we propose mixed-signal-based value-aware bypass techniques to optimize the ADC utilization of the ReRAM-based PIM. By utilizing the property of bit-line (BL) level value distribution, the proposed work bypasses the redundant ADC operations depending on the magnitude of value. Evaluation results show that our techniques successfully reduce ADC access and improve overall energy efficiency by 2.48 × -3.07 × compared to ISAAC.

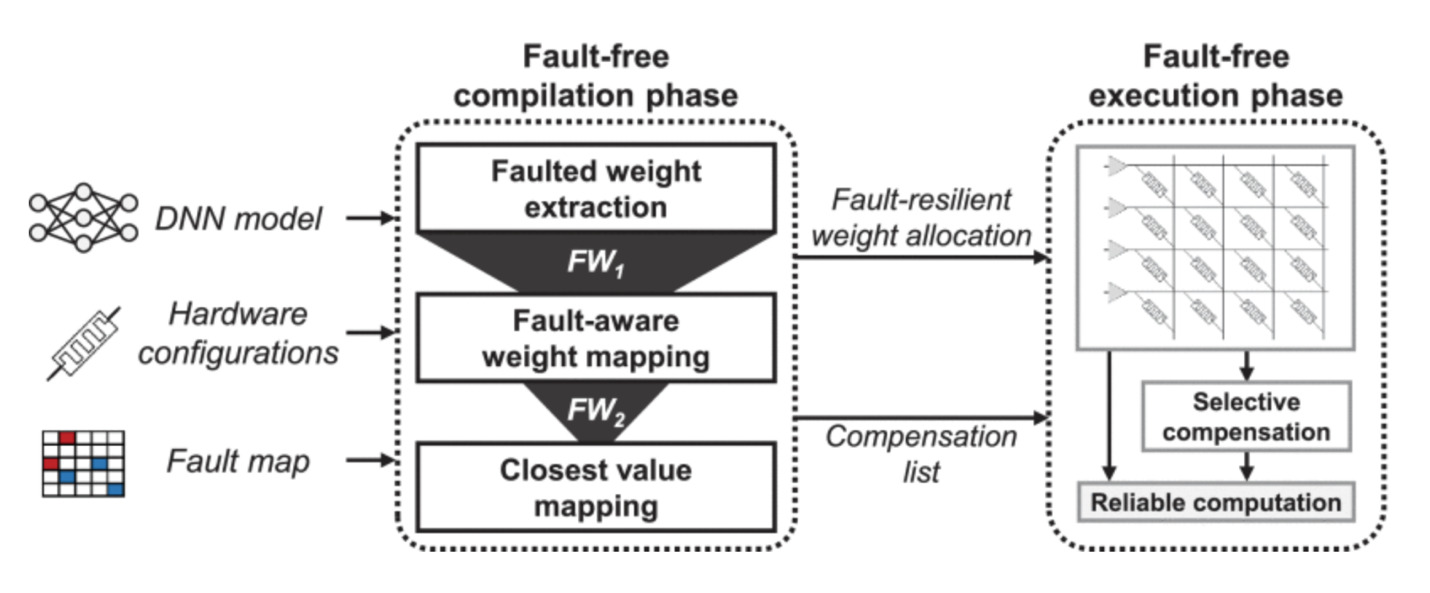

Title : Fault-free: A Fault-resilient Deep Neural Network Accelerator based on Realistic ReRAM Devices

Author: Hyein Shin, Myeonggu Kang, Lee-Sup Kim

Conference: IEEE/ACM Design Automation Conference (DAC) 2021

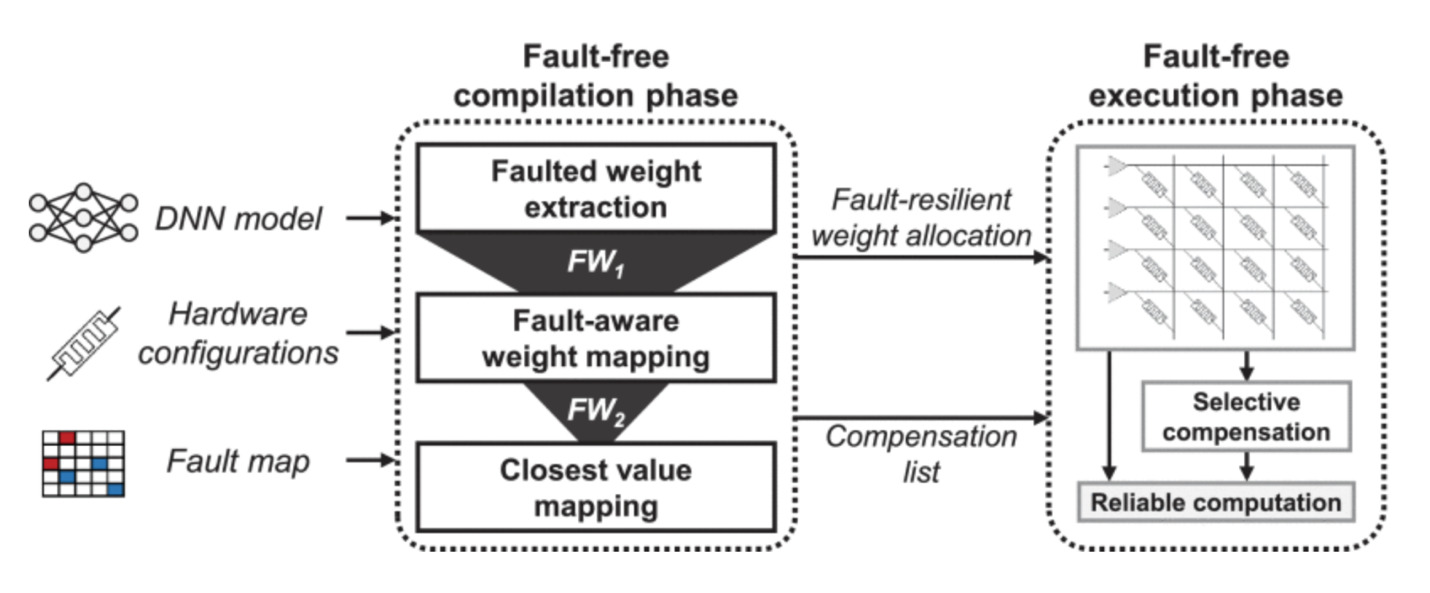

Abstract: Energy-efficient Resistive RAM (ReRAM) based deep neural network (DNN) accelerator suffers from severe Stuck-At-Fault (SAF) problem that drastically degrades the inference accuracy. The SAF problem gets even worse in realistic ReRAM devices with low cell resolution. To address the issue, we propose a fault-resilient DNN accelerator based on realistic ReRAM devices. We first analyze the SAF problem in a realistic ReRAM device and propose a 3-stage offline fault-resilient compilation and lightweight online compensation. The proposed work enables the reliable execution of DNN with only 5% area and 0.8% energy overhead from the ideal ReRAM-based DNN accelerator.

Title : A Convergence Monitoring Method for DNN Training of On-Device Task Adaptation

Author : Seungkyu Choi, Jaekang Shin, Lee-Sup Kim

Conference : IEEE/ACM International Conference On Computer Aided Design 2021

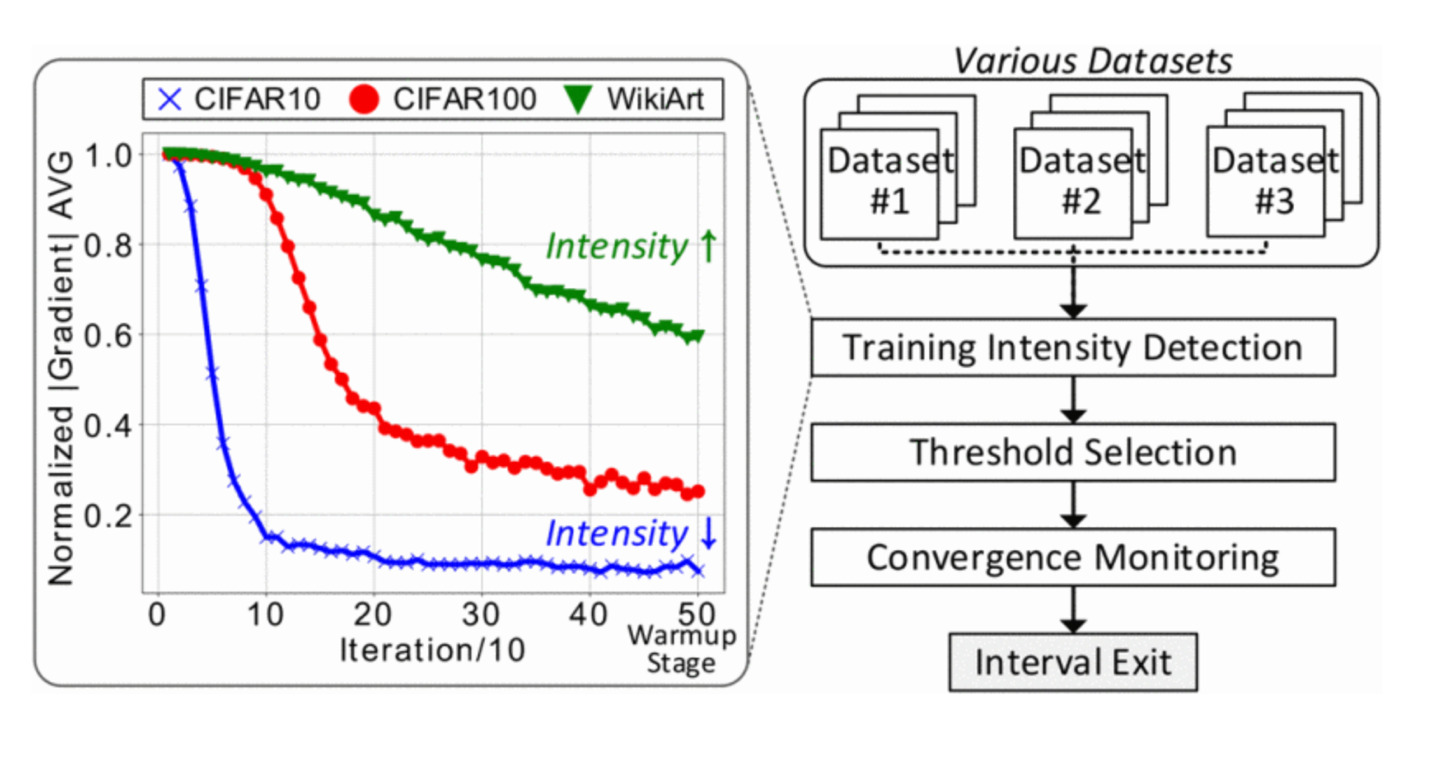

Abstract: DNN training has become a major workload in on-device situations to execute various vision tasks with high performance. Accordingly, training architectures accompanying approximate computing have been steadily studied for efficient acceleration. However, most of the works examine their scheme on from-the-scratch training where inaccurate computing is not tolerable. Moreover, previous solutions are mostly provided as an extended version of the inference works, e.g., sparsity/pruning, quantization, dataflow, etc. Therefore, unresolved issues in practical workloads that hinder the total speed of the DNN training process remain still. In this work, with targeting the transfer learning-based task adaptation of the practical on-device training workload, we propose a convergence monitoring method to resolve the redundancy in massive training iterations. By utilizing the network’s output value, we detect the training intensity of incoming tasks and monitor the prediction convergence with the given intensity to provide early-exits in the scheduled training iteration. As a result, an accurate approximation over various tasks is performed with minimal overhead. Unlike the sparsity-driven approximation, our method enables runtime optimization and can be easily applicable to off-the-shelf accelerators achieving significant speedup. Evaluation results on various datasets show a geomean of 2.2× speedup over baseline and 1.8× speedup over the latest convergence-related training method.

Title : Deferred Dropout: An Algorithm-Hardware Co-Design DNN Training Method Provisioning Consistent High Activation Sparsity

Author: Kangkyu Park, Yunki Han, Lee-Sup Kim

Conference : IEEE/ACM International Conference On Computer Aided Design 2021

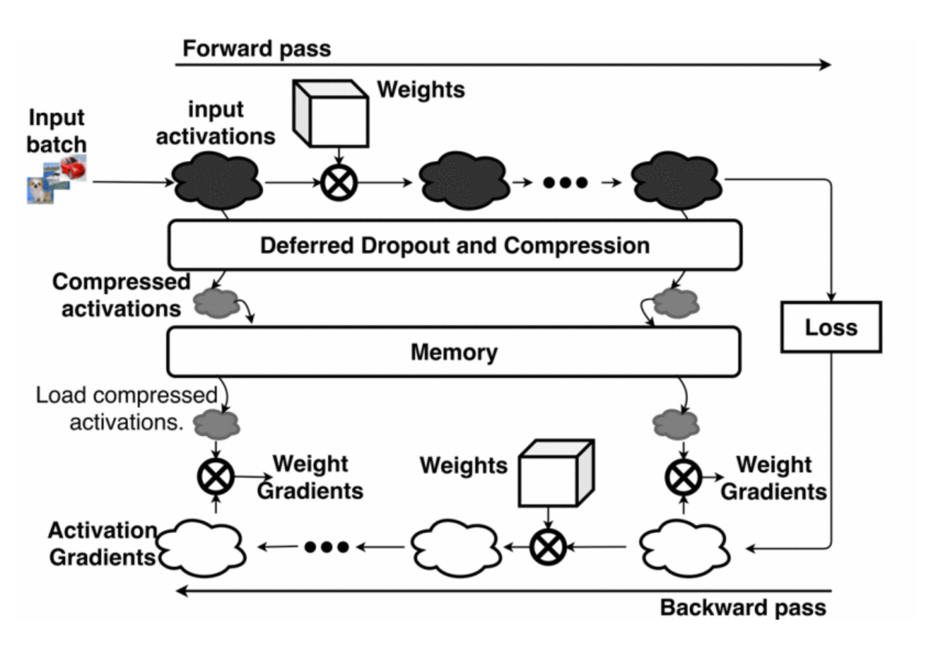

Abstract: This paper proposes a deep neural network training method that provisions consistent high activation sparsity and the ability to adjust the sparsity. To improve training performance, prior work reduces the memory footprint for training by exploiting input activation sparsity which is observed due to the ReLU function. However, the previous approach relies solely on the inherent sparsity caused by the function, and thus the footprint reduction is not guaranteed. In particular, models for natural language processing tasks like BERT do not use the function, so the models have almost zero activation sparsity and the previous approach loses its efficiency. In this paper, a new training method, Deferred Dropout, and its hardware architecture are proposed. With the proposed method, input activations are dropped out after the conventional forward-pass computation. In contrast to the conventional dropout where activations are zeroed before forward-pass computation, the dropping timing is deferred until the completion of the computation. Then, the sparsified activations are compressed and stashed in memory. This approach is based on our observation that networks preserve training quality even if only a few high magnitude activations are used in the backward pass. The hardware architecture enables designers to exploit the tradeoff between training quality and activation sparsity. Evaluation results demonstrate that the proposed method achieves 1.21-3.60 × memory footprint reduction and 1.06-1.43 x speedup on the TPUv3 architecture, compared to the prior work

Title : A Framework for Area-efficient Multi-task BERT Execution on ReRAM-based Accelerators

Author : Myeonggu Kang, Hyein Shin, Jaekang Shin, Lee-Sup Kim

Conference : IEEE/ACM International Conference On Computer Aided Design 2021

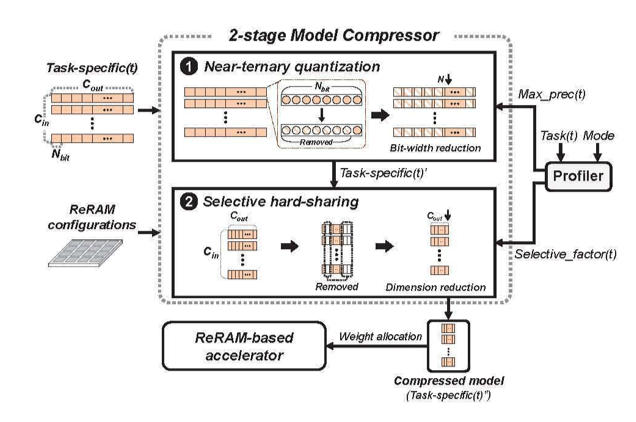

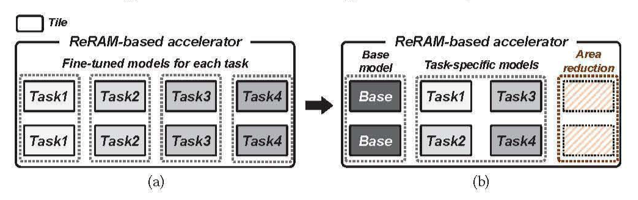

Abstract : With the superior algorithmic performances, BERT has become the de-facto standard model for various NLP tasks. Accordingly, multiple BERT models have been adopted on a single system, which is also called multi-task BERT. Although the ReRAM-based accelerator shows the sufficient potential to execute a single BERT model by adopting in-memory computation, processing multi-task BERT on the ReRAM-based accelerator extremely increases the overall area due to multiple fine-tuned models. In this paper, we propose a framework for area-efficient multi-task BERT execution on the ReRAM-based accelerator. Firstly, we decompose the fine-tuned model of each task by utilizing the base-model. After that, we propose a two-stage weight compressor, which shrinks the decomposed models by analyzing the properties of the ReRAM-based accelerator. We also present a profiler to generate hyper-parameters for the proposed compressor. By sharing the base-model and compressing the decomposed models, the proposed framework successfully reduces the total area of the ReRAM-based accelerator without an additional training procedure. It achieves a 0.26 x area than baseline while maintaining the algorithmic performances.

Title : Energy-Efficient CNN Personalized Training by Adaptive Data Reformation

Author: Youngbeom Jung, Hyeonuk Kim, Seungkyu Choi, Jaekang Shin, Lee-Sup Kim

Journal : IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems

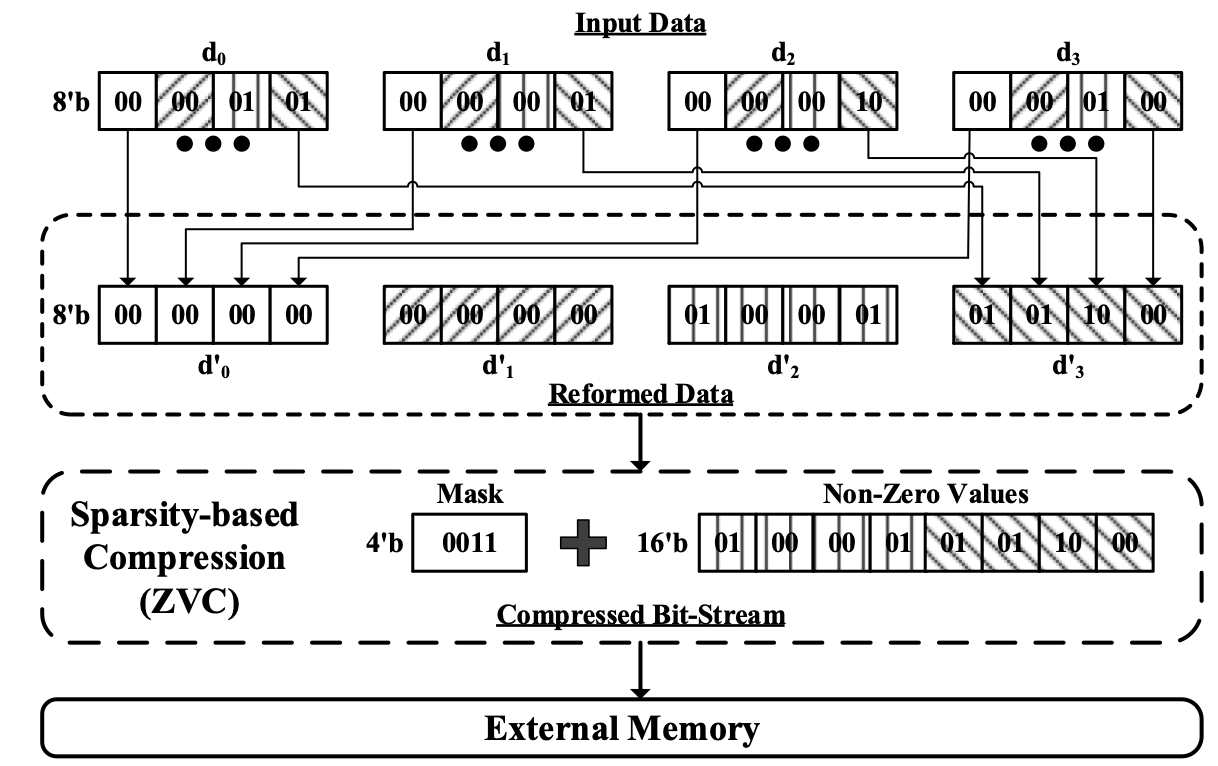

Abstract : To adopt deep neural networks in resource constrained edge devices, various energy-and memory-efficient embedded accelerators have been proposed. However, most off-the-shelf networks are well-trained with vast amounts of data, but unexplored users’ data or accelerator’s constraints can lead to unexpected accuracy loss. Therefore, a network adaptation suitable for each user and device is essential to make a high confidence prediction in given environment. We propose simple but efficient data reformation methods that can effectively reduce the communication cost with off-chip memory during the adaptation. Our proposal utilizes the data’s zero-centered distribution and spatial correlation to concentrate the sporadically spread bit-level zeros to the units of value. Consequently, we reduced communication volume by up to 55.6% per task with an area overhead of 0.79% during the personalization training.

Title : A Framework for Accelerating Transformer-based Language Model on ReRAM-based Architecture

Author: Myeonggu Kang, Hyein Shin, Lee-Sup Kim

Journal : IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems

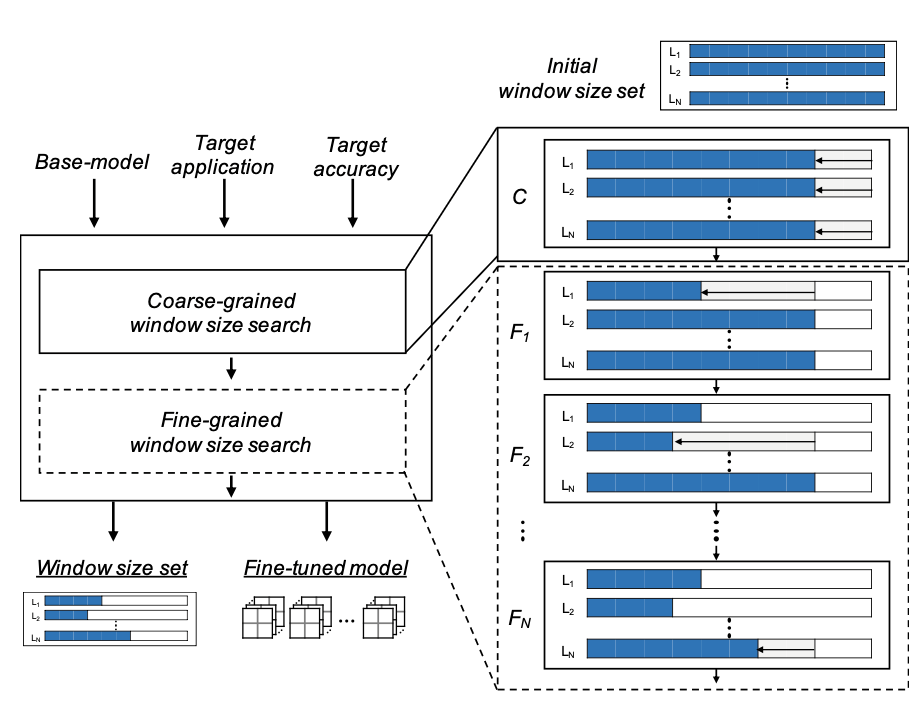

Abstract : Transformer-based language models have become the de-facto standard model for various NLP applications given the superior algorithmic performances. Processing a transformer-based language model on a conventional accelerator induces the memory wall problem, and the ReRAM-based accelerator is a promising solution to this problem. However, due to the characteristics of the self-attention mechanism and the ReRAM-based accelerator, the pipeline hazard arises when processing the transformer-based language model on the ReRAM-based accelerator. This hazard issue greatly increases the overall execution time. In this paper, we propose a framework to resolve the hazard issue. Firstly, we propose the concept of window self-attention to reduce the attention computation scope by analyzing the properties of the self-attention mechanism. After that, we present a window-size search algorithm, which finds an optimal window size set according to the target application/algorithmic performance. We also suggest a hardware design that exploits the advantages of the proposed algorithm optimization on the general ReRAM-based accelerator. The proposed work successfully alleviates the hazard issue while maintaining the algorithmic performance, leading to a 5.8× speedup over the provisioned baseline. It also delivers up to 39.2×/643.2× speedup/higher energy efficiency over GPU, respectively.

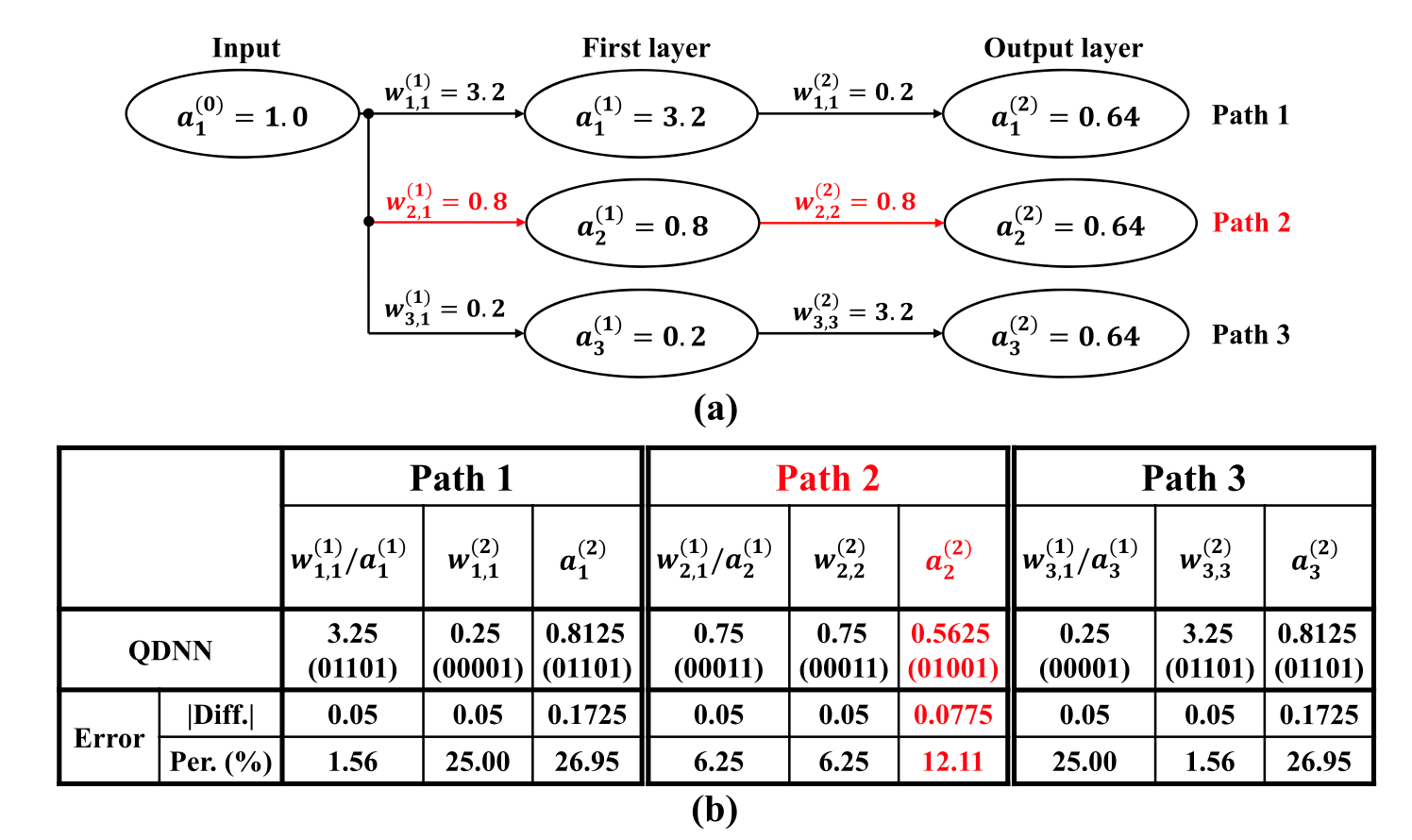

Title : Quantization-Error-Robust Deep Neural Network for Embedded Accelerators

Author: Youngbeom Jung, Hyeonuk Kim, Yeongjae Choi, Lee-Sup Kim

Journal : IEEE Transactions on Circuits and Systems II: Express Briefs

Abstract : Quantization with low precision has become an essential technique for adopting deep neural networks in energy- and memory-constrained devices. However, there is a limit to the reducing precision by the inevitable loss of accuracy due to the quantization error. To overcome this obstacle, we propose methods reforming and quantizing a network that achieves high accuracy even at low precision without any runtime overhead in embedded accelerators. Our proposition consists of two analytical approaches: 1) network optimization to find the most error-resilient equivalent network in the precision-constrained environment and 2) quantization exploiting adaptive rounding offset control. The experimental results show accuracies of up to 98.31% and 99.96% of floating-point results in 6-bit and 8-bit quantization networks, respectively. Besides, our methods allow the lower precision accelerator design, reducing the energy consumption by 8.5%.

[Award ceremony picture, Li Zhiyong, left side]

KAIST EE Ph.D. student Zhiyong Li (Advised by Prof. Hoi-Jun Yoo) won the Outstanding Student Design Award at the 2022 IEEE Custom Integrated Circuits Conference (CICC). The conference was held in California, U.S. from April 24th to 27th. CICC is an international conference held annually by IEEE. Ph.D. student Zhiyong Li has published a paper titled “An 0.92mJ/frame High-quality FHD Super-resolution Mobile Accelerator SoC with Hybrid-precision and Energy-efficient Cache”.

Details are as follows. Congratulations once again to Ph.D. student Zhiyong Li and Professor Hoi-Jun Yoo!

Conference: 2022 IEEE Custom Integrated Circuits Conference (CICC)

Date: April 24-27, 2022

Award: Intel & Analog Devices Outstanding Student Paper Award

Authors: Zhiyong Li, Sangjin Kim, Dongseok Im, Donghyeon Han, and Hoi-Jun Yoo (Advisory Professor)

Paper Title: An 0.92mJ/frame High-quality FHD Super-resolution Mobile Accelerator SoC with Hybrid-precision and Energy-efficient Cache

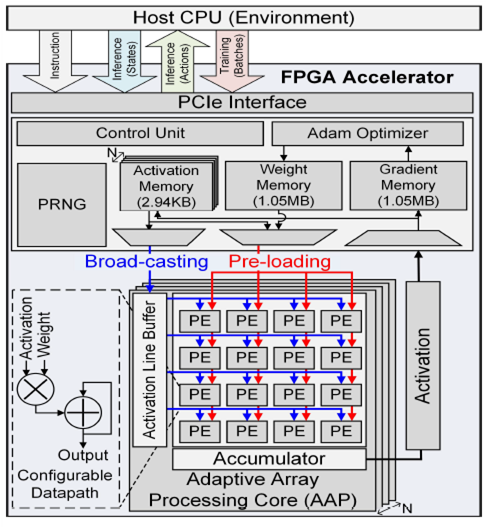

Deep reinforcement learning (DRL) is a powerful technology to deal with decision-making problem in various application domains such as robotics and gaming, by allowing an agent to learn its action policy in an environment to maximize a cumulative reward. Unlike supervised models which actively use data quantization, DRL still uses the single-precision floating-point for training accuracy while it suffers from computationally intensive deep neural network (DNN) computations.

In this paper, we present a deep reinforcement learning acceleration platform named FIXAR, which employs fixed-point data types and arithmetic units for the first time using a SW/HW co-design approach. We propose a quantization-aware training algorithm in fixed-point, which enables to reduce the data precision by half after a certain amount of training time without losing accuracy. We also design a FPGA accelerator that employs adaptive dataflow and parallelism to handle both inference and training operations. Its processing element has configurable datapath to efficiently support the proposed quantized-aware training. We validate our FIXAR platform, where the host CPU emulates the DRL environment and the FPGA accelerates the agent’s DNN operations, by running multiple benchmarks in continuous action spaces based on a latest DRL algorithm called DDPG. Finally, the FIXAR platform achieves 25293.3 inferences per second (IPS) training throughput, which is 2.7 times higher than the CPU-GPU platform. In addition, its FPGA accelerator shows 53826.8 IPS and 2638.0 IPS/W energy efficiency, which are 5.5 times higher and 15.4 times more energy efficient than those of GPU, respectively. FIXAR also shows the best IPS throughput and energy efficiency among other state-of-the-art acceleration platforms using FPGA, even it targets one of the most complex DNN models.