Title : T-PIM: A 2.21-to-161.08TOPS/W Processing-In-Memory Accelerator for End-to-End On-Device Training

Authors : Jaehoon Heo, Junsoo Kim, Wontak Han, Sukbin Lim, Joo-Young Kim

Publications : IEEE Custom Integrated Circuits Conference (CICC) 2022

As the number of edge devices grows to tens of billions, the importance of intelligent computing has been shifted from cloud datacenters to edge devices. On-device training, which enables the personalization of a machine learning (ML) model for each user, is crucial in the success of edge intelligence. However, battery-powered edge devices cannot afford huge computations and memory accesses involved in the training. Processing-in-Memory (PIM) is a promising technology to overcome the memory bandwidth and energy problem by combining processing logic into the memory. Many PIM chips [1-5] have accelerated ML inference using analog or digital-based logic with sparsity handling. Two-way transpose PIM [6] supports backpropagation, but it lacks gradient calculation and weight update, required for end-to-end ML training.

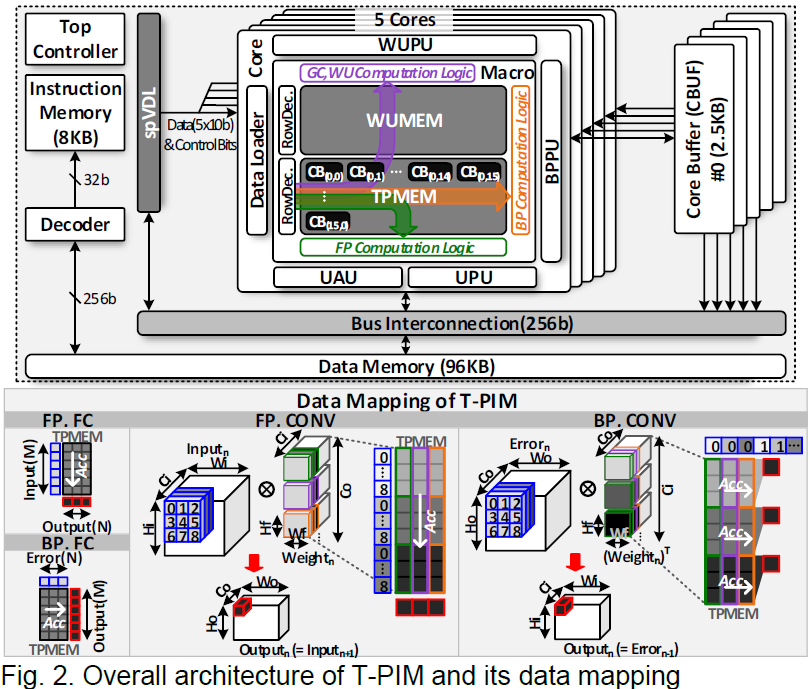

This paper presents T-PIM, the first PIM accelerator that can perform end-to-end on-device training with sparsity handling and support low-latency ML inference. T-PIM makes the four key contributions: 1) T-PIM can run the complete four computational stages of ML training on a chip (Fig. 1). 2) T-PIM allows various data mapping strategies for two major computational layers, i.e., fully-connected (FC) and convolutional (CONV), as well as two computational directions, i.e., forward and backward. 3) T-PIM supports fully variable bit-width for input data and power-of-two bit-width for weight data using serial and configurable arithmetic units. 4) T-PIM accelerates and saves energy consumption in ML training by exploiting fine-grained sparsity in all data types (act., error, and weight).