Changho Hwang and Taehyun Kim, Ph.D. candidates at the School of EE (with advisor, prof. KyoungSoo Park, collaborating with prof. Jinwoo Shin, and Sunghyun Kim at MIT CSAIL), have developed the CoDDL system, a scalable GPU resource management system framework that accelerates deep learning model training. This system is developed under collaboration with the Electronics and Telecommunications Research Institute (ETRI).

The demand for GPU resources in training AI models has dramatically increased over time. Accordingly, many enterprises and cloud computing providers build their own GPU cluster for sharing the resources with AI model developers for training computations. As a GPU cluster is often highly costly to build out while it consumes a vast amount of electric power, it is critically important to efficiently manage the GPU resources across the entire cluster.

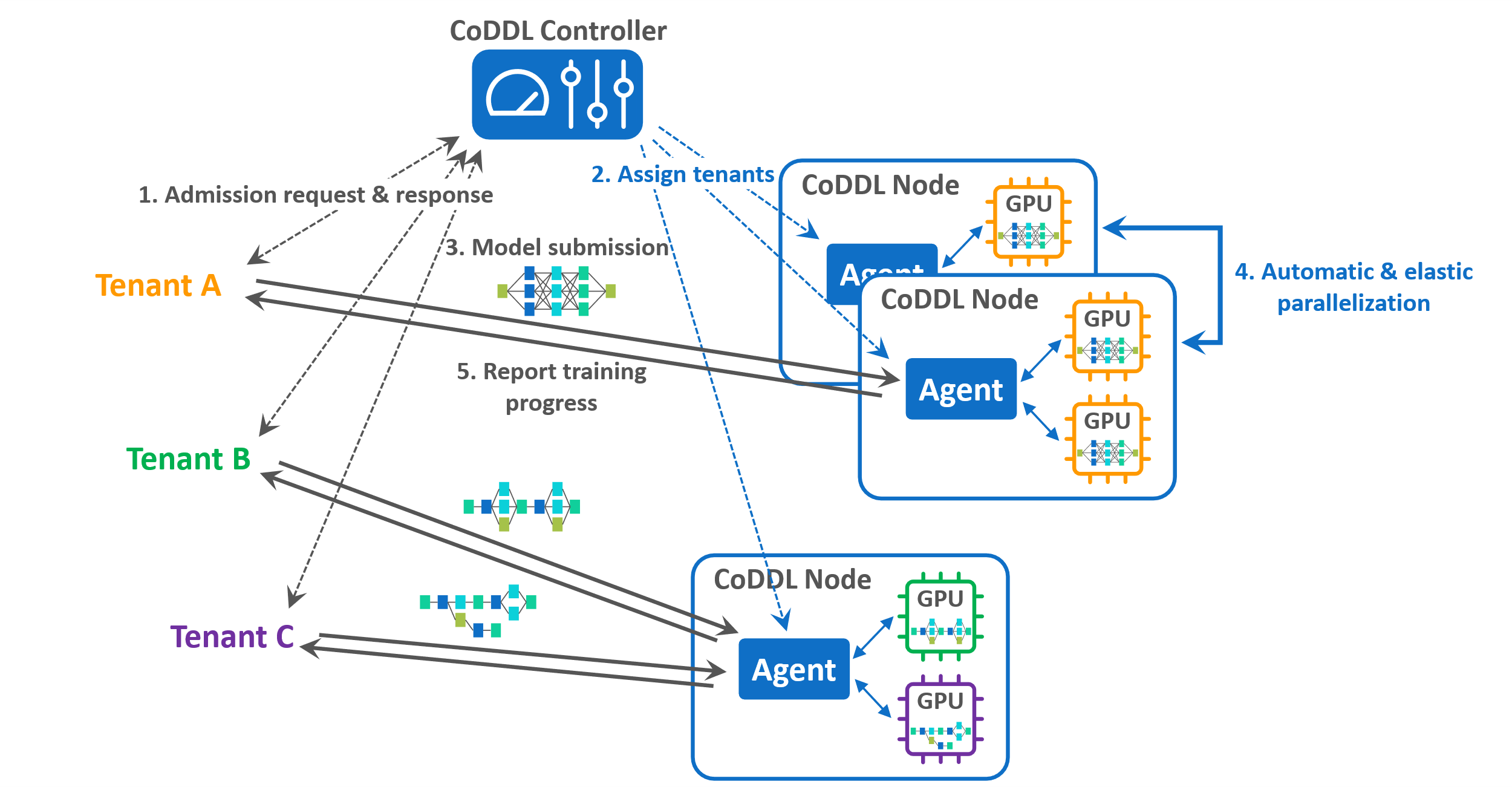

The CoDDL system automatically manages the training of multiple AI models to run fast and efficiently in a GPU cluster. When a developer submits a model for training, the system automatically accelerates the training by parallelizing its execution with multiple GPUs to utilize them simultaneously. Especially, CoDDL provides a high-performance job scheduler that optimizes the cluster-wide performance by elastically re-adjusting the GPU shares across multiple training jobs, even when some of the jobs are already running. CoDDL is designed to minimize the system overhead to re-adjust the GPU shares, which enables the job scheduler to make precise and efficient resource allocation decisions that substantially increase the overall cluster performance.

The AFS-P job scheduler that is presented along with the CoDDL system reduces the average job completion time by up to 3.11x using a public DNN training workload trace released by Microsoft. The results have been presented in USENIX NSDI 2021, one of the top networked computing systems conferences.

Figure: The overview of the CoDDL system

More details on the research are found at the links below.

Paper: https://www.usenix.org/system/files/nsdi21-hwang.pdf

Presentation video: https://www.usenix.org/conference/nsdi21/presentation/hwang