Title : Incorporating Multi-Context Into the Traversability Map for Urban Autonomous Driving Using Deep Inverse Reinforcement Learning.

Authors: Chanyoung Jung and David Hyunchul Shim

Journal: IEEE Robotics and Automation Letters (IEEE ICRA 2021 presentation)

Abstract : Autonomous driving in an urban environment with surrounding agents remains challenging. One of the key challenges is to accurately predict the traversability map that probabilistically represents future trajectories considering multiple contexts: inertial, environmental, and social. To address this, various approaches have been proposed; however, they mainly focus on considering the individual context. In addition, most studies utilize expensive prior information (such as HD maps) of the driving environment, which is not a scalable approach.

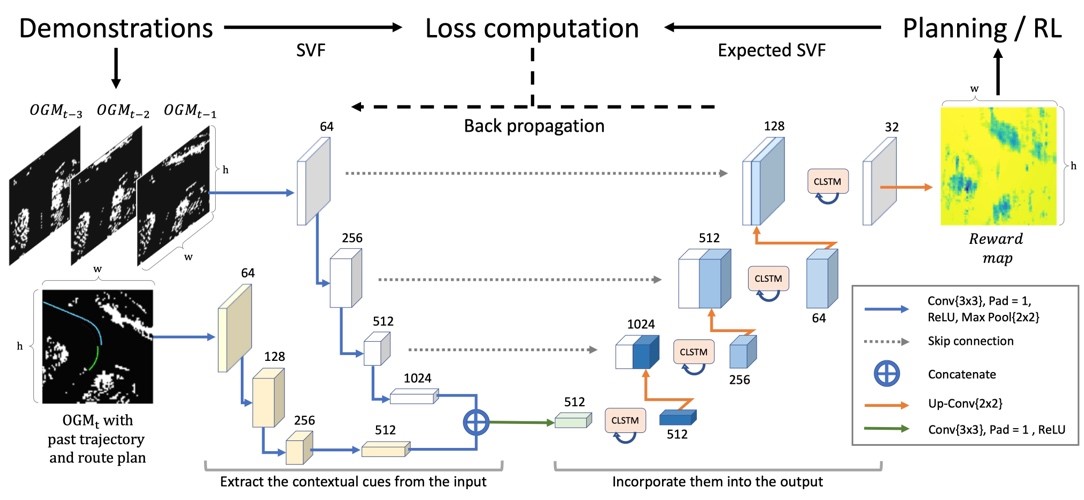

In this study, we extend a deep inverse reinforcement learning-based approach that can predict the traversability map while incorporating multiple contexts for autonomous driving in a dynamic environment. Instead of using expensive prior information of the driving scene, we propose a novel deep neural network to extract contextual cues from sensing data and effectively incorporate them in the output, i.e., the reward map. Based on the reward map, our method predicts the ego-centric traversability map that represents the probability distribution of the plausible and socially acceptable future trajectories.

The proposed method is qualitatively and quantitatively evaluated in real-world traffic scenarios with various baselines. The experimental results show that our method improves the prediction accuracy compared to other baseline methods and can predict future trajectories similar to those followed by a human driver.

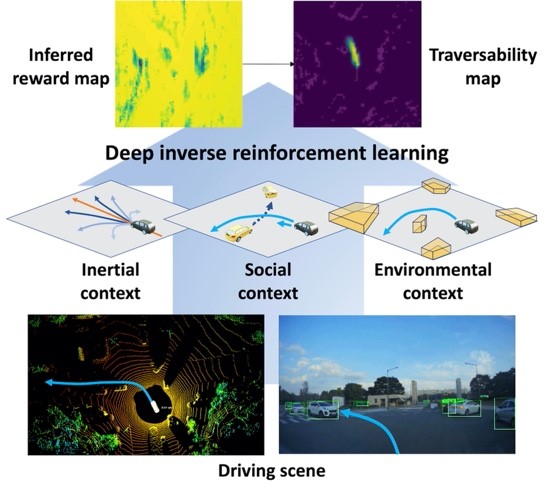

Caption 1. Scheme for predicting traversability map that incorporates inertial, environmental, and social contexts using the deep inverse reinforcement learning (DIRL) framework.

Caption 2. Illustration of the proposed network architecture as a reward function approximator, and training procedures. The encoder module with two branches extracts the contextual cues from the input data. The convolutional long short-term memory (ConvLSTM)-based decoder module is added to incorporate them into the output reward map. With the inferred reward map, the difference between the demonstration and the expected state visitation frequency (SVF) is used as a training signal.

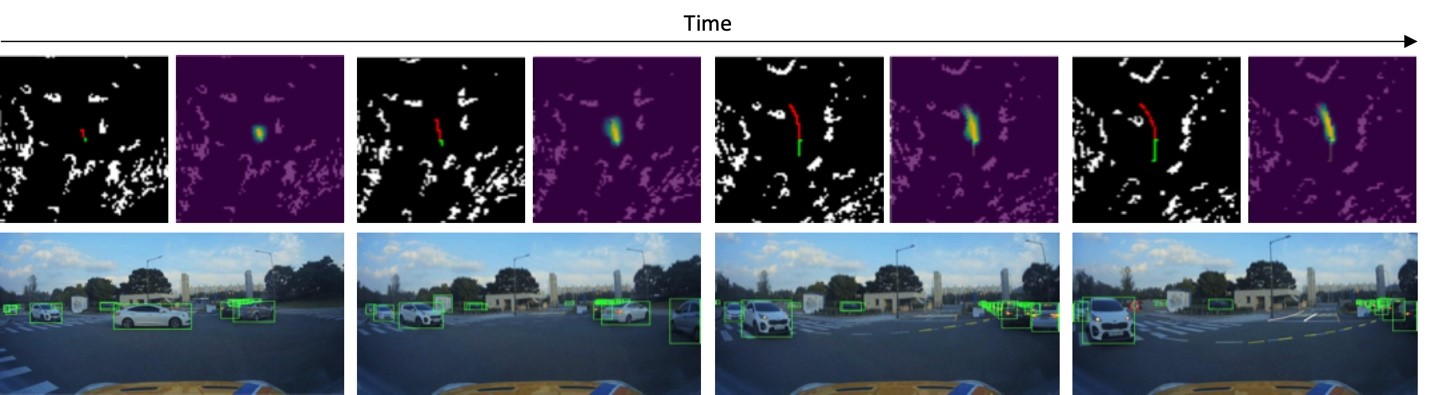

Caption 3. Visualization of traversability map prediction result over time. The first row shows the occupied grid map (OGM) and the traversability map overlaid on the demonstration (in red) in order. The second row shows the front view image with the neighboring vehicles marked in green bounding boxes.