Sangwon Lee, Gyuyoung Park, and Myoungsoo Jung

12th USENIX Workshop on Hot Topics in Storage and File Systems (HotStorage), 2020, Poster

https://www.usenix.org/conference/hotstorage20/presentation/lee

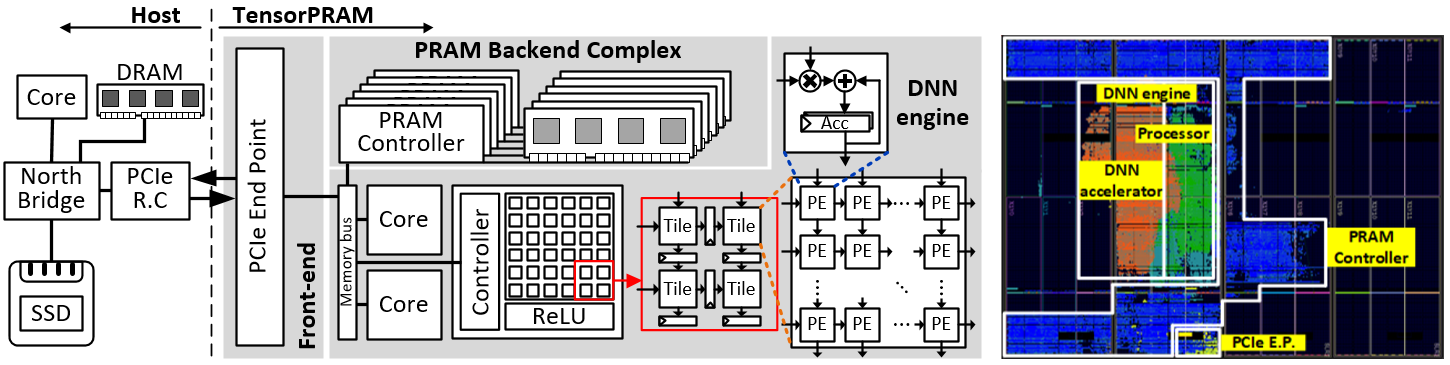

We present TensorPRAM, a scalable heterogeneous deep learning accelerator that realizes FPGA-based domain specific architecture, and it can be used for forming a computational array for deep neural networks (DNNs). The current design of TensorPRAM includes a systolic-array hardware, which accelerates general matrix multiplication (GEMM) and convolution of DNNs. To reduce data movement overhead between a host and the accelerator, we further replace TensorPRAM’s on-board memory with a dense, but byte-addressable storage class memory (PRAM). We prototype TensorPRAM by placing all the logic of a general processor, front-end host interface module, systolic-array and PRAM controllers into a single FPGA chip, such that one or more TensorPRAMs can be attached to the host over PCIe fabric as a scalable option. Our real system evaluations show that TensorPRAM can reduce the execution time of various DNN workloads, compared to a processor only accelerator and a systolic-array only accelerator by 99% and 48%, on average, respectively.