EE Professor Rhu, Minsoo’s Research Team Build First-ever Privacy-aware Artificial Intelligence Semiconductor, Speeding Up the Differentially Private Learning Process 3.6 Times Google’s TPUv3

[Professor Rhu, Minsoo]

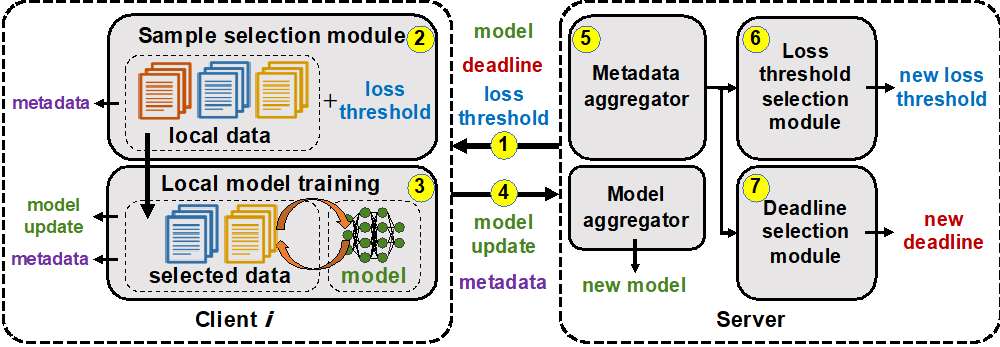

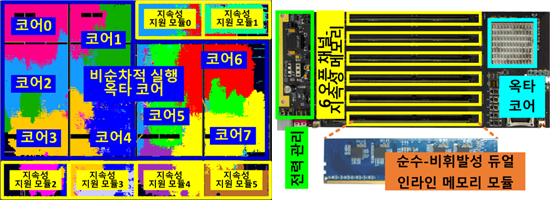

EE Professor Rhu and his research team have taken artificial intelligence semiconductors a big leap forward in the application of differentially private machine learning. Professor Rhu’s team analyzed the bottleneck component in the differentially private machine learning performance and devised a semiconductor chip greatly improving differentially private machine learning application performance.

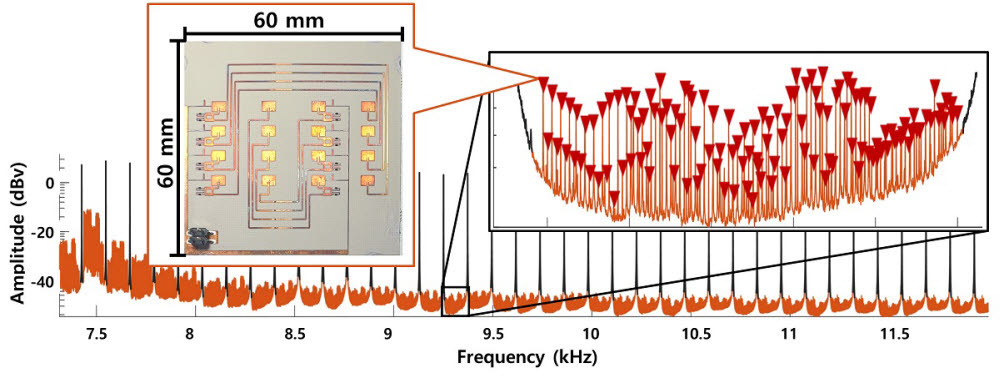

Professor Rhu’s artificial intelligence chip consists of, among others, a cross-product-based arithmetic unit and an addition tree-based post-processing arithmetic unit and is capable of 3.6 times faster machine learning process compared with that of Google’s TPUv3, today’s most widely used AI processor.

The new chip also boasts comparable performance to that of NVIDIA’s A100 GPU, even with 10 times less resources.

[From left, Co-lead authors Park, Beomsik and Hwang, Ranggi; co-authors Yoon, Dongho and Choi, Yoonhyuk]

This work, with EE researchers Park, Beomsik and Hwang, Ranggi as co-first authors, will be presented as DiVa: An Accelerator for Differentially Private Machine Learning at the 55th IEEE/ACM International Symposium on Microarchitectures (MICRO 2022), the premier research venue for computer architecture research coming October 1 through 5 in Chicago, USA.

Professor Rhu’s achievements have been reported in multiple press coverage.

Links:

AI Times: http://www.aitimes.com/news/articleView.html?idxno=146435

Yonhap : https://www.yna.co.kr/view/AKR20201116072400063?input=1195m

Financial News : https://www.fnnews.com/news/202208212349474072

Donga Science : https://www.dongascience.com/news.php?idx=55893

Industry News : http://www.industrynews.co.kr/news/articleView.html?idxno=46829