Juseung Yun, Byungjoo Kim and Junmo Kim

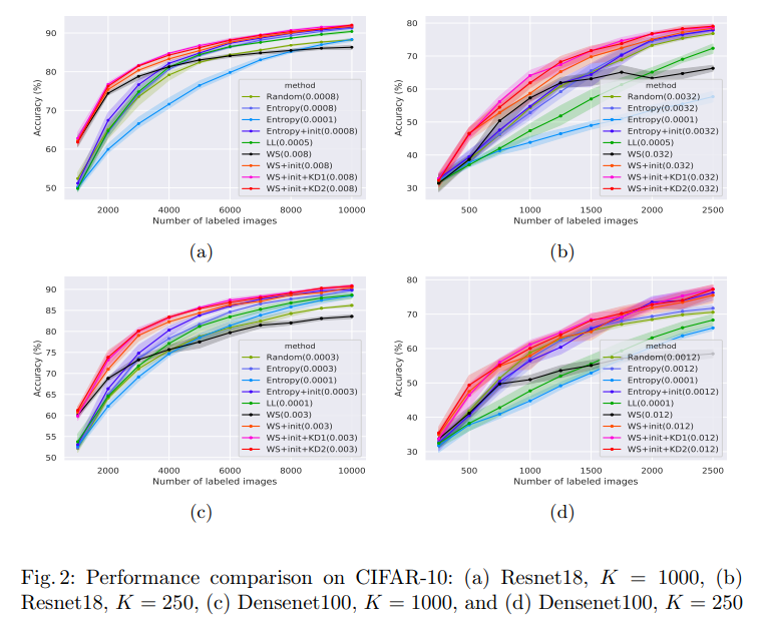

Although convolutional neural networks perform extremely well for numerous computer vision tasks, a considerably large amount of labeled data is required to ensure a good outcome. Data labeling is labor-intensive, and in some cases, the labeling budget may be limited. Active learning is a technique that can reduce the labeling required. With this technique, the neural network selects on its own the unlabeled data most helpful for learning, and then requests the human annotator for the labels. Most existing active learning methods have focused on acquisition functions for an effective selection of the informative samples. However, in this paper, we focus on the data-incremental nature of active learning, and propose a method for properly tuning the weight decay as the amount of data increases. We also demonstrate that the performance can be improved by knowledge distillation using a low-performance teacher model trained from the previous acquisition step. In addition, we present a novel perspective of the weight decay, which provides a regularization effect by limiting the number of effective parameters and channels in the convolutional filter. We validate our methods on the MNIST, CIFAR-10, and CIFAR-100 datasets using convolutional neural networks of various sizes