KAIST PhD candidates Soo Ye Kim from the School of Electrical Engineering (advisor: Prof. Munchurl Kim), Sanghyun Woo from the School of Electrical Engineering (advisor: Prof. In So Kweon), and Hae Beom Lee from the Kim Jaechul Graduate School of AI (advisor: Prof. Sung Ju Hwang) were selected as recipients of the 2021 Google PhD Fellowship.

< The 2021 Google PhD fellow Soo Ye Kim, Sanghyun Woo, and Hae Beom Lee (from left) >

The Google PhD Fellowship is a scholarship program that recognizes outstanding graduate students for their exceptional and innovative research in areas relevant to computer science and related fields. This year, 75 students from around the world have received the fellowship. Selected fellows receive a $10,000 scholarship and an opportunity to discuss research along with feedback from experts at Google.

Soo Ye Kim and Sanghyun Woo were named fellows in the field of “Machine Perception, Speech Technology and Computer Vision”. Soo Ye Kim was selected for her outstanding achievements in deep learning based super-resolution, and Sanghyun Woo was selected for his outstanding achievements in the field of computer vision. Hae Beom Lee was named a fellow in the field of “Machine Learning” for his outstanding achievements in meta-learning.

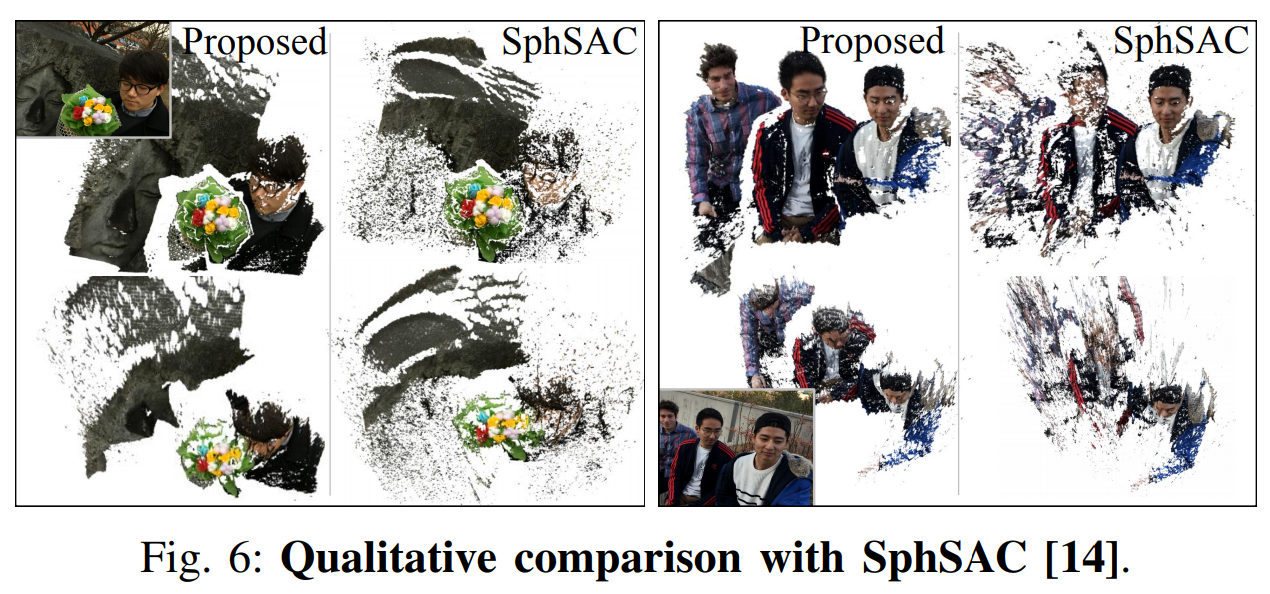

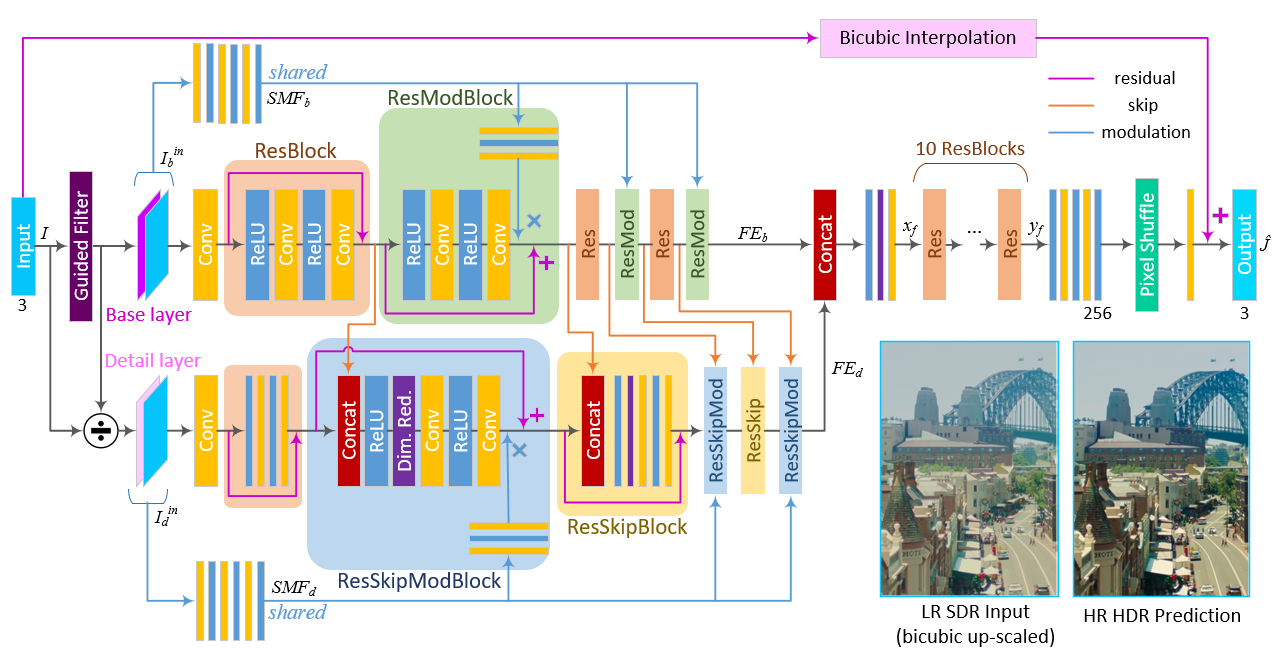

Soo Ye Kim’s research achievements include the formulation of novel methods for super-resolution and HDR video restoration and deep joint frame interpolation and super-resolution methods. Many of her works have been presented in leading conferences in computer vision and AI such as CVPR, ICCV, and AAAI. In addition, she has been collaborating as a research intern with the Vision Group Team at Adobe Research to study depth map refinement techniques.

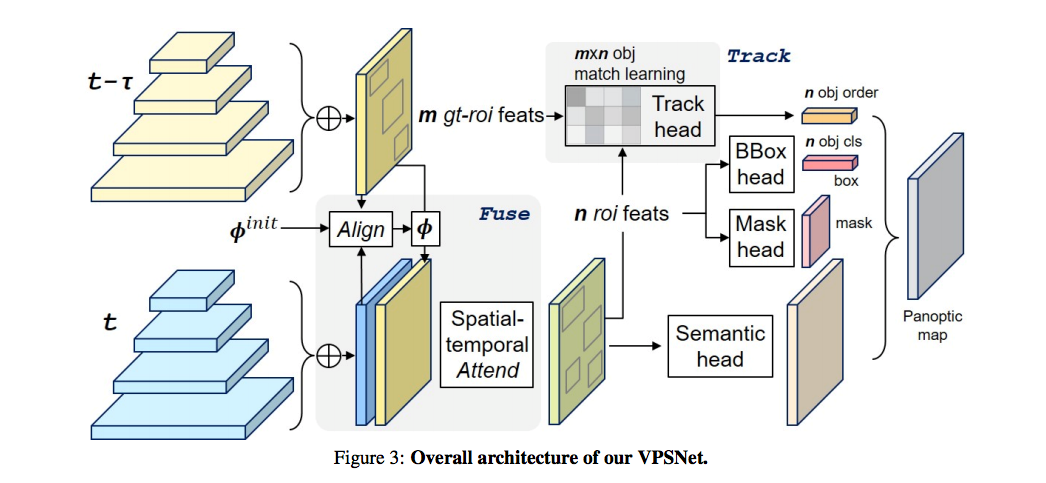

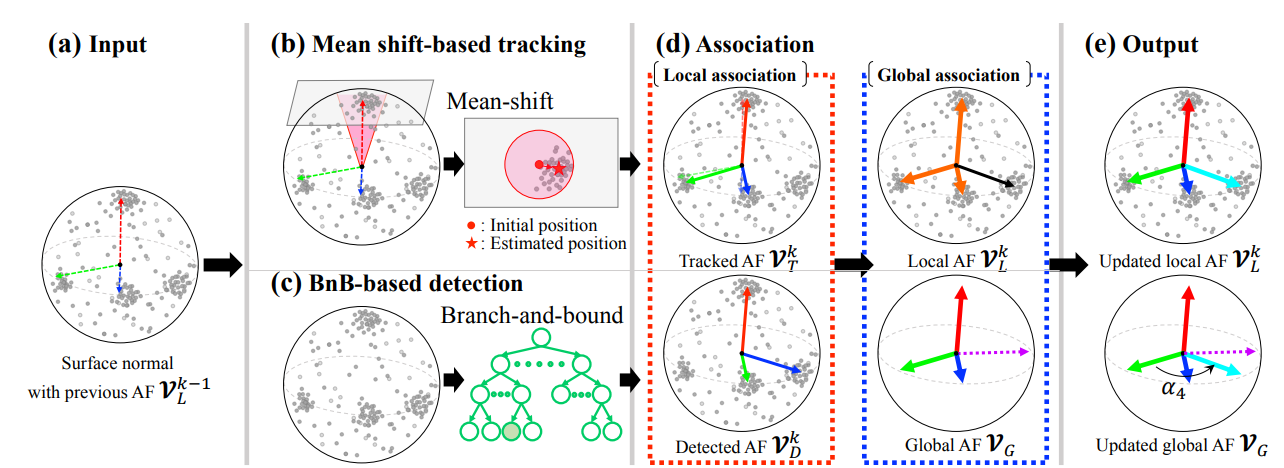

Sanghyun Woo’s research achievements include an effective deep learning model design based on the attention mechanism and learning methods based on self-learning and simulators. His works have been also presented in leading conferences such as CVPR, ECCV, and NeurIPS. In particular, his work on the Convolutional Block Attention Module (CBAM) which was presented at ECCV in 2018 has surpassed over 2700 citations on Google Scholar after being referenced in many computer vision applications. He was also a recipient of Microsoft Research PhD Fellowship in 2020.

Prof. Joonhyuk Kang, the head of KAIST EE, congratulated and encouraged Sanghyun Woo for receiving the fellowship by carrying out it as a research personnel for military service and Soo Ye Kim for great achievement through industrial cooperations sprightly.

Due to the COVID-19 pandemic, the award ceremony was held virtually at the Google PhD Fellow Summit from August 31st to September 1st. The list of fellowship recipients is displayed on the Google webpage.

(Link: https://research.google/outreach/phd-fellowship/recipients/ )

[Research achievements of Soo Ye Kim: Deep learning based joint super-resolution and inverse tone-mapping framework for HDR videos]

[Research achievements of Sanghyun Woo: Attention mechanism based deep learning models]