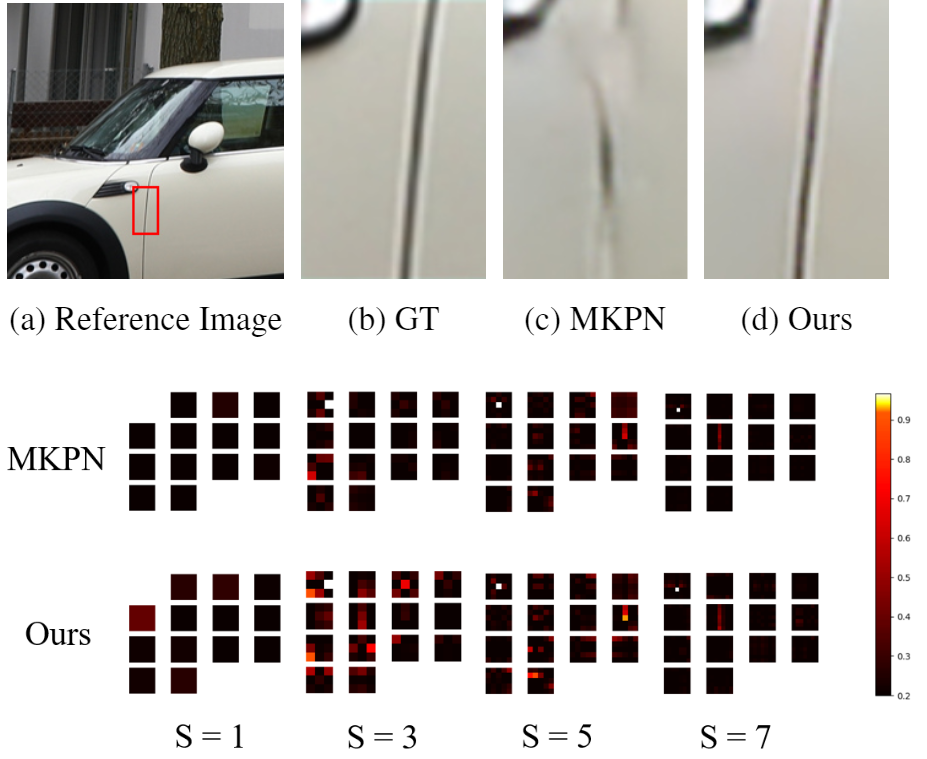

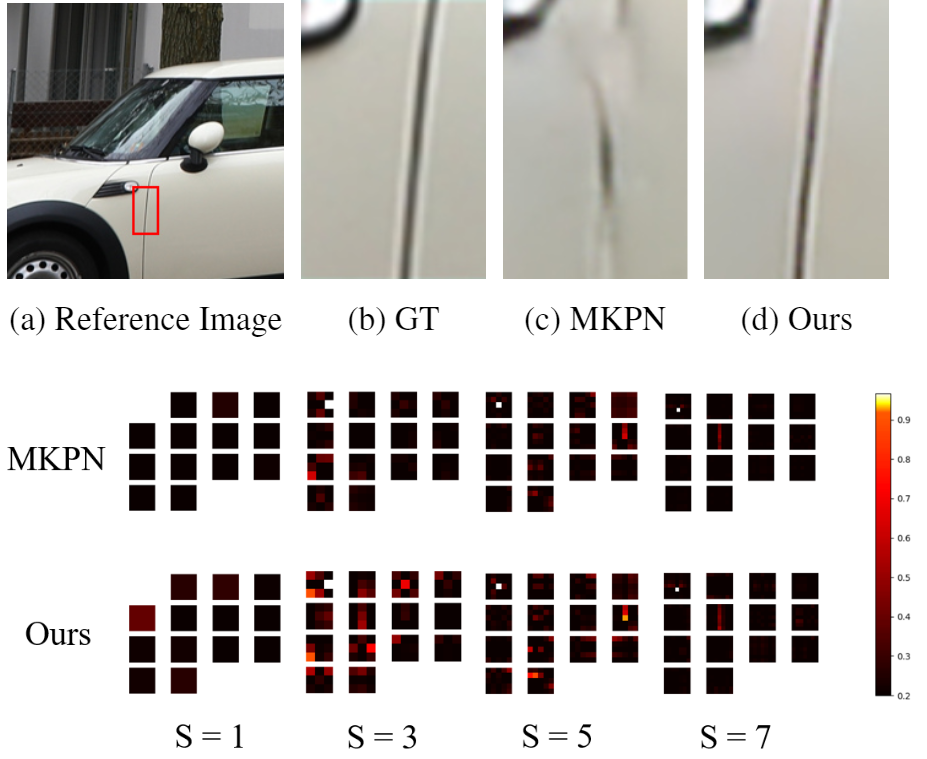

Burst image super-resolution is an ill-posed problem that aims to restore a high-resolution (HR) image from a se- quence of low-resolution (LR) burst images. To restore a photo-realistic HR image using their abundant information, it is essential to align each burst of frames containing ran- dom hand-held motion. Some kernel prediction networks (KPNs) that are operated without external motion compen- sation such as optical flow estimation have been applied to burst image processing as implicit image alignment mod- ules. However, the existing methods do not consider the interdependencies among the kernels of different sizes that have a significant effect on each pixel. In this paper, we propose a novel weighted multi-kernel prediction network (WMKPN) that can learn the discriminative features on each pixel for burst image super-resolution. Our experi- mental results demonstrate that WMKPN improves the vi- sual quality of super-resolved images. To the best of our knowledge, it outperforms the state-of-the-art within ker- nel prediction methods and multiple frame super-resolution (MFSR) on both the Zurich RAW to RGB and BurstSR datasets.

Conference/Journal, Year: AAAI, 2020

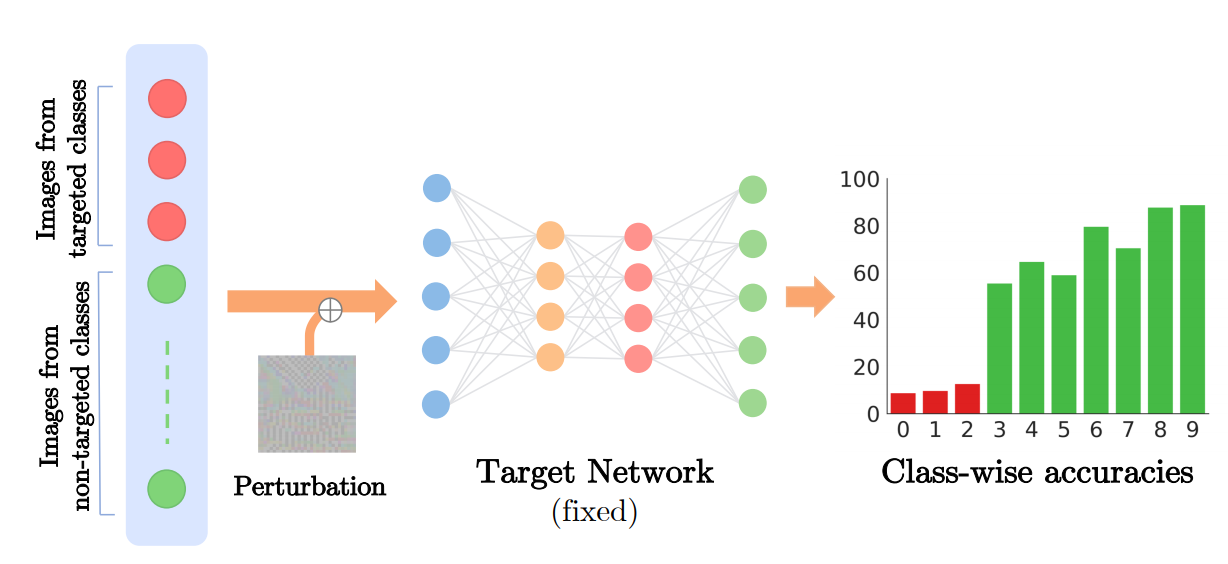

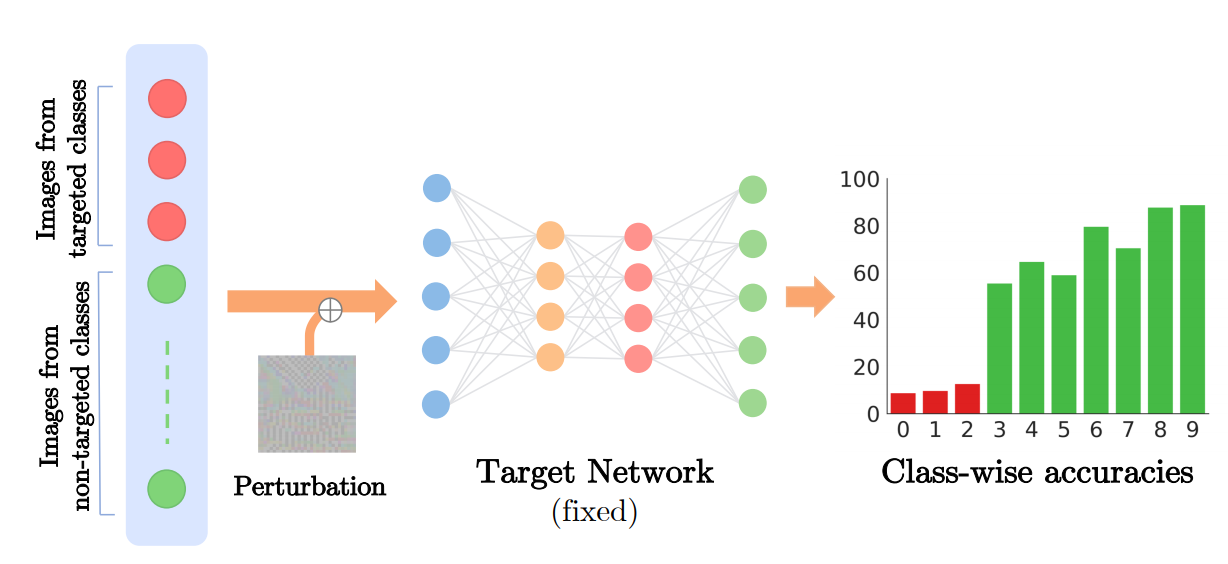

A single universal adversarial perturbation (UAP) can be added to all natural images to change most of their predicted class labels. It is of high practical relevance for an attacker to have flexible control over the targeted classes to be attacked, however, the existing UAP method attacks samples from all classes. In this work, we propose a new universal attack method to generate a single perturbation that fools a target network to misclassify only a chosen group of classes, while having limited influence on the remaining classes. Since the proposed attack generates a universal adversarial perturbation that is discriminative to targeted and non-targeted classes, we term it class discriminative universal adversarial perturbation (CD-UAP). We propose one simple yet effective algorithm framework, under which we design and compare various loss function configurations tailored for the class discriminative universal attack. The proposed approach has been evaluated with extensive experiments on various benchmark datasets. Additionally, our proposed approach achieves state-of-the-art performance for the original task of UAP attacking all classes, which demonstrates the effectiveness of our approach.

Conference/Journal, Year: WACV, 2020

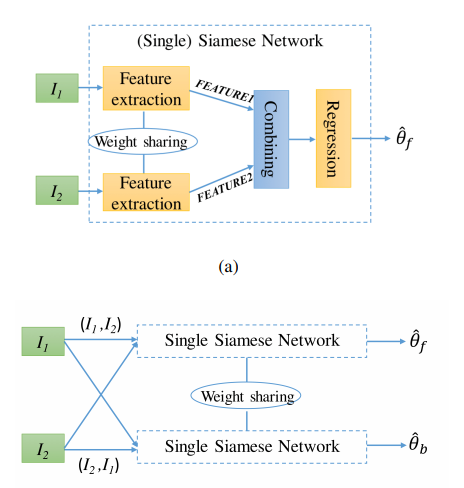

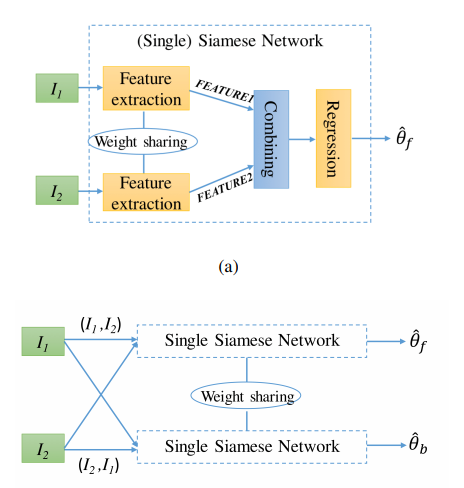

Rotating and zooming cameras, also called PTZ (PanTilt-Zoom) cameras, are widely used in modern surveillance systems. While their zooming ability allows acquiring detailed images of the scene, it also makes their calibration more challenging since any zooming action results in a modification of their intrinsic parameters. Therefore, such camera calibration has to be computed online; this process is called self-calibration. In this paper, given an image pair captured by a PTZ camera, we propose a deep learning based approach to automatically estimate the focal length and distortion parameters of both images as well as the rotation angles between them. The proposed approach relies on a dual-Siamese structure, imposing bidirectional constraints. The proposed network is trained on a largescale dataset automatically generated from a set of panoramas. Empirically, we demonstrate that our proposed approach achieves competitive performance with respect to both deep learning based and traditional state-of-the art methods. Our code and model will be publicly available at https://github.com/ChaoningZhang/DeepPTZ.

Conference/Journal, Year: IEEE Robotics and Automation Letters (RA-L) / 2021

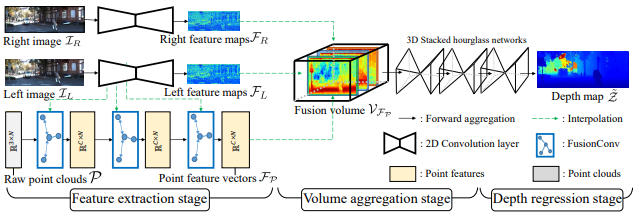

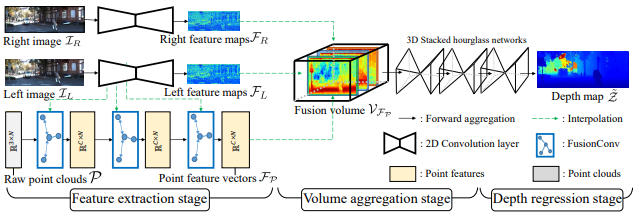

Stereo-LiDAR fusion is a promising task in that we can utilize two different types of 3D perceptions for practical usage – dense 3D information (stereo cameras) and highly accurate sparse point clouds (LiDAR). However, due to their different modalities and structures, the method of aligning sensor data is the key for successful sensor fusion. To this end, we propose a geometry-aware stereo-LiDAR fusion network for long-range depth estimation, called volumetric propagation network. The key idea of our network is to exploit sparse and accurate point clouds as a cue for guiding correspondences of stereo images in a unified 3D volume space. Unlike existing fusion strategies, we directly embed point clouds into the volume, which enables us to propagate valid information into nearby voxels in the volume, and to reduce the uncertainty of correspondences. Thus, it allows us to fuse two different input modalities seamlessly and regress a long-range depth map. Our fusion is further enhanced by a newly proposed feature extraction layer for point clouds guided by images: FusionConv. FusionConv extracts point cloud features that consider both semantic (2D image domain) and geometric (3D domain) relations and aid fusion at the volume. Our network achieves state-of-the-art performance on the KITTI and the VirtualKITTI datasets among recent stereo-LiDAR fusion methods.

Professor Munchurl Kim is Awarded Research Grand Award for 2021 KAIST Research Day

On the past 25th of May, our department’s professor Munchurl Kim was awarded the Grand Award for the 2021 KAIST Research Day. Professor Changho Suh and

Professor Hoi-Jun Yu were also selected for the top 10 R&D research achievements along with various other achievements being introduced in the press.

Professor Kim’s research was highly recognized for AI based methods of enhancing low resolution video to high resolution quality video and has greatly contributed to video and resolution restoration in the field of AI.

We congratulate our department’s students and professors for their research and awards.

Website link : https://www.etnews.com/20210525000246

http://www.kyosu.net/news/articleView.html?idxno=67974

http://www.ccnnews.co.kr/news/articleView.html?idxno=220028

Our department’s professor Dong Eui Chang was been awarded the Academy Innovation Prize from the 2020 KAIA.

The KAIA (Chair professor Yu) has held the 2020 award ceremony at the Seongnam-KAIST ICT center.

Our department’s professor Dong Eui Chang received the award for his contribution to autonomous drone flight technologies and mathematical interpretation of deep learning. Additionally, Ph.D candidate Xiaowei Xing from professor Chang’s lab was rewarded the fall conference poster award for programming toolbox research.

This ceremony was organized for the announcement of this year’s research achievements of domestic AI research and for the encouragement of all related researchers.

Once again, we congratulate professor Chang for his reward.

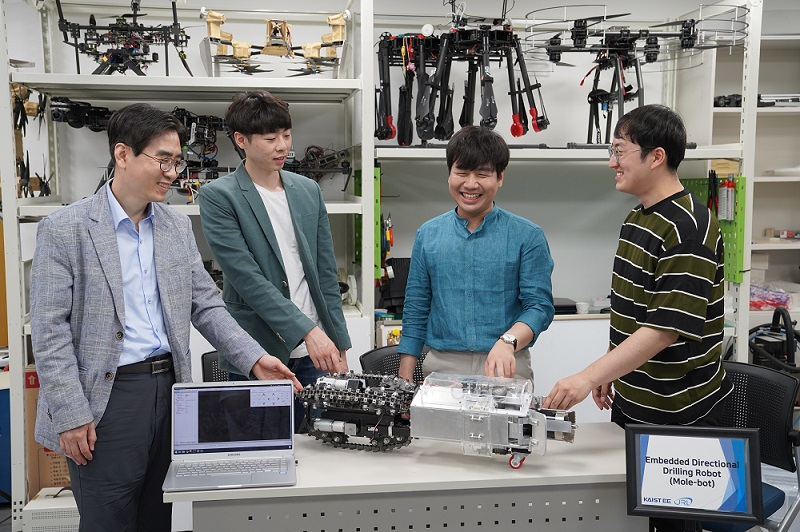

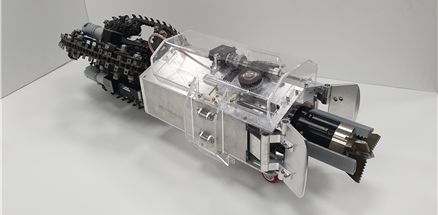

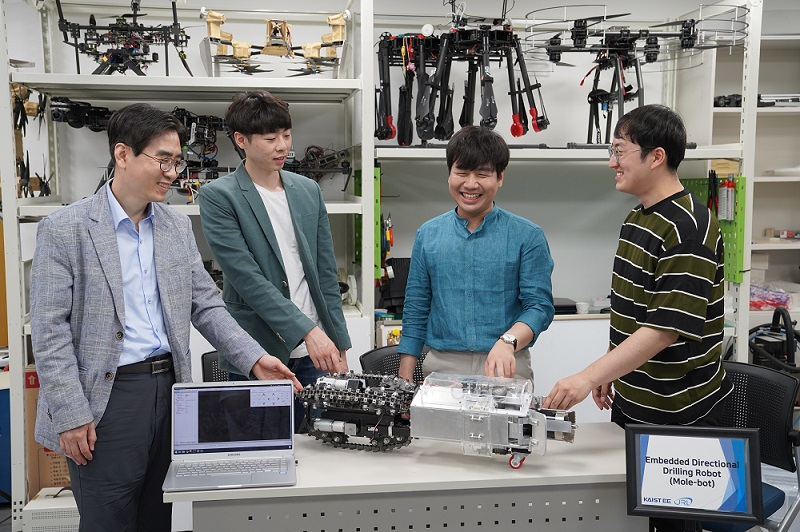

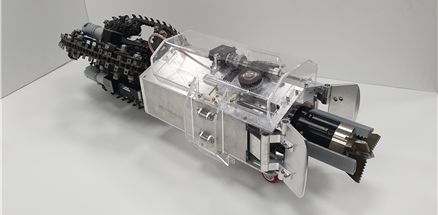

rofessor Hyun-Myung’s research team succeeded in developing a biomimetic mole robot (Mole-bot) to explore underground spaces. The research results have been reported in the leading domestic media, including YTN.

Professor Hyun-Myung’s lab has been accelerating research on various kinds of robot development, including the development of robots to repel jellyfish.

The developed Mole-bot is a biomimetic robot that can be effectively used for unmanned underground, extreme regions, and space exploration by imitating the mole’s biological structure and excavation habits.

Mole-bot was developed to explore the area where coal-fired methane gas that can be used as a new energy source by replacing existing energy sources such as petroleum and coal, or rare-earth used in electronic devices is buried. Furthermore, it can be used for soil sampling of space planets.

The research team expects that the Mole-bot is likely to be used in various fields, and it is also possible to enter the world market thanks to its excellent economic efficiency.

The research has been conducted for three years since 2017, and the research team has published many papers in international journals and wined awards from international academic conferences.

This study was supported by the Industrial Technology Innovation Project of the Ministry of Trade, Industry, and Energy.

Congratulations on Professor Hyun-Myung’s excellent research achievements, and we look forward to future successes.

[Link]

https://news.kaist.ac.kr/news/html/news/?mode=V&mng_no=8190

https://www.ytn.co.kr/_ln/0115_202006050248129678

https://youtu.be/pEnKy5UYEYQ

Research by Professor Jong-Hwan Kim’s lab on I-Keyboard was reported in overseas media.

The study was introduced in the “The Next Web” webpage under the heading “AI researchers developed a fully imaginary keyboard for touchscreens and VR”. Their research involves the development of machine learning technology that allows the users to type on the touchscreen without a keyboard. The research paper will be published on IEEE Trans. On Cybernetics (Impact Factor: 10.387).

For future work, Professor Jong-Hwan Kim’s lab is focusing on developing an imaginary keyboard that can recognize the user’s input even when typed in the air, starting from any random point. They are also planning to apply this technology to smartphones. With further development and better touch interfaces, the team believes that I-Keyboard could be improved to become a fully virtual replacement for physical keyboards.

Congratulations on the excellent achievement by Professor Jong-Hwan Kim’s lab! We wish you the utmost success in your future research. Please refer to the link below for more details.

[Link]

https://thenextweb.com/artificial-intelligence/2019/08/01/ai-researchers-developed-a-fully-imaginary-keyboard-for-touchscreens-and-vr/

Ph.D. student Sung-Hoon Im (Advised by In-So Kweon) won the 2018 MSRA (Microsoft Research Asia) Fellowship Award.

This year, 102 distinguished Ph.D. students from 40 leading research universities or institutions were nominated for fellowships.

Only, 11 students were awarded the fellowships.

A cash award and Microsoft Research Asia Internship opportunity (3 months) were provided to Fellowship winner.

Sung-hoon Im was invited to the 2018 MSRA Fellowship Award Ceremony held in Beijing, China, 0n Nov. 6th.

Congratulations to Sung-Hoon Im and Prof. In-so Kweon.

Ph.D. student Da-Hoon Kim, M.S student Sang-Hyun Woo, and Dr. Joon-young Lee (Advised by In-So Kweon) awarded the 1st place prize in “ChaLearn Looking at People Challenge” co-organized by Google, Amazon, and DisneyLab of the European Conference on Computer Vision (ECCV) held on September 9th.

The ‘Video Decaptioning’ challenge is a “video inpainting” task that recovers raw video by recognizing and eliminating arbitrarily mixed text in the video.

In particular, this work was recognized as a meaningful academic achievement by proposing a new concept “DVDNet: Deep Blind Video Decaptioning with 3D-2D Gated Convolutions” for video processing and they succeeded in restoring images with the overwhelming performance.

Congratulations.