Ph.D Candidate Jaehong Kim from professor Dongsu Han’s research team has developed a high-quality live video streaming system based on online learning.

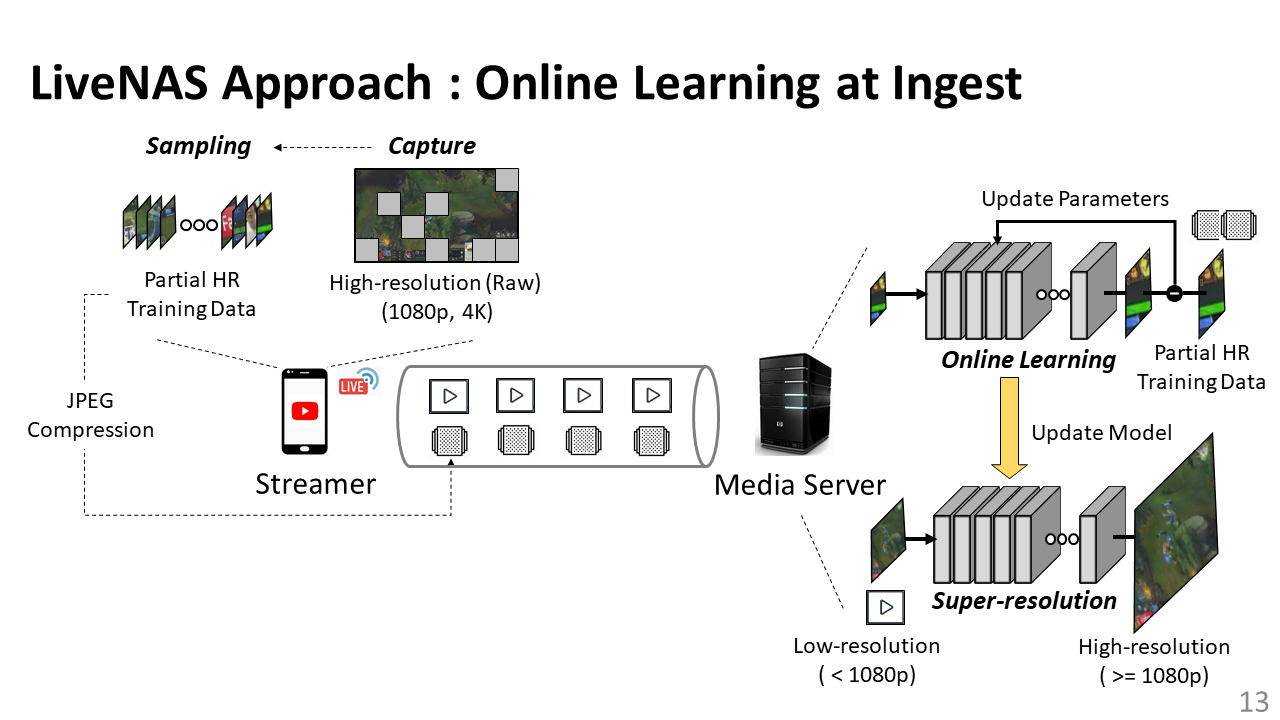

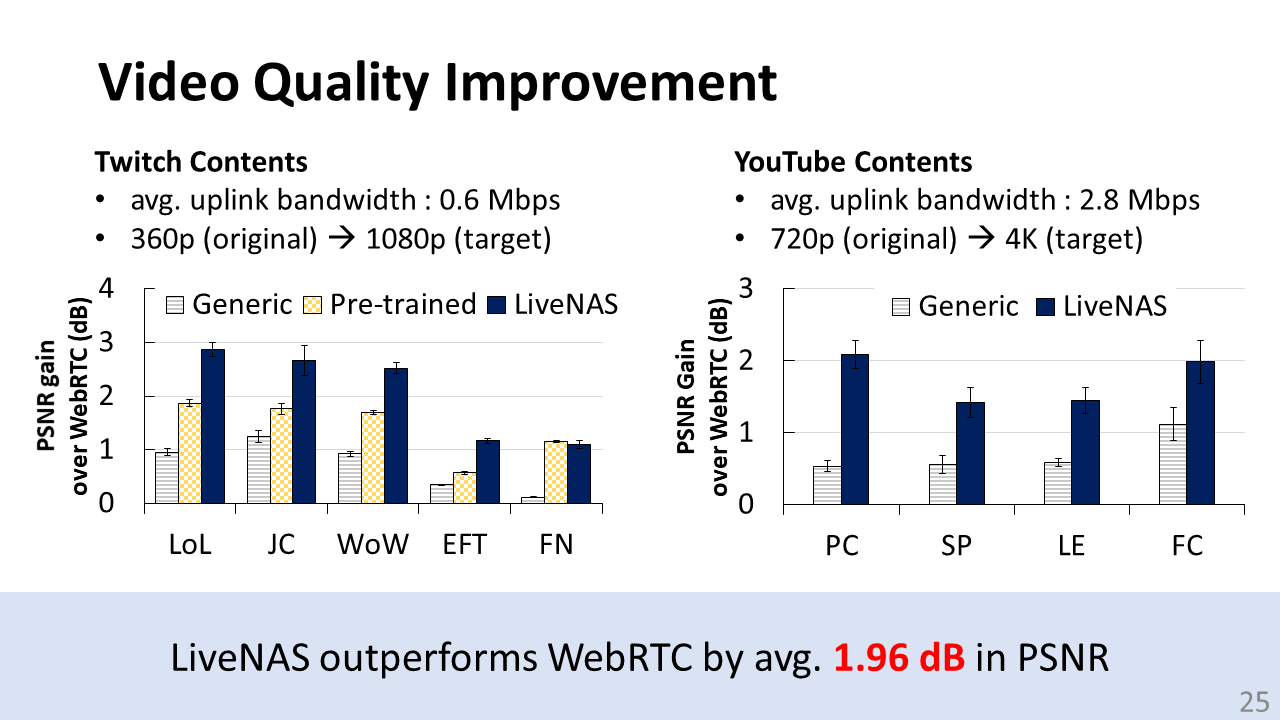

The research team improved the quality of live video collected in real time from streamers through deep-learning-based super-resolution technology using the computing resources of the media server. Furthermore, they have overcome the limitations of previous works, performance limit that occurs when applying a previously trained neural network model to a new live video, by incorporating the online learning. In this system, a streamer transmits a patch, a part of a high-definition live video frame, to the media server through a separate transmission bandwidth with the live video. Then, the server optimizes the performance of the neural network model for the live video collected in real time, using the collected patches.

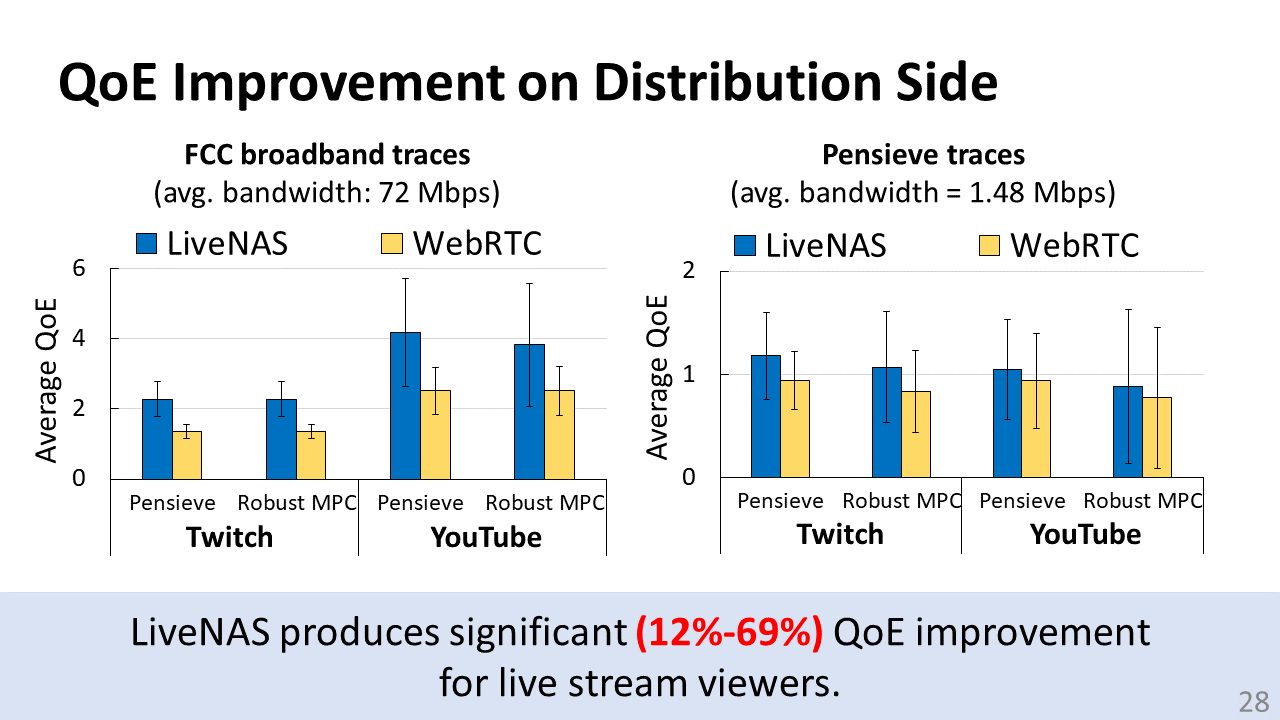

The research team reported that using this technology reduces the dependence on the streaming environment of the streamer and the performance constraints of the terminal in providing live streaming services. The technology also can provide live videos of various resolutions to the viewers of the distribution side. This technology improved user QoE of live video streaming system by 12-69% compared to the previous works.

Meanwhile, the results of this research were accepted and announced by ACM SIGCOMM, the best academic conference in the field of computer networking.

Detailed research information can be found at the link below.

Congratulations on Professor Dongsu Han’s remarkable achievement!

Link]

Project website: http://ina.kaist.ac.kr/~livenas/

Paper Title: Neural-Enhanced Live Streaming: Improving Live Video Ingest via Online Learning

Paper link: https://dl.acm.org/doi/abs/10.1145/3387514.3405856

Conference presentation video: https://youtu.be/1giVlO6Rumg