As generative AI technology advances, so do concerns about its potential misuse in manipulating online public opinion. Although detection tools for AI-generated text have been developed previously, most are based on long, standardized English texts and therefore perform poorly on short (average 51 characters), colloquial Korean news comments. The research team from KAIST has made headlines by developing the first technology to detect AI-generated comments in Korean.

A research team led by Professor Yongdae Kim from KAIST’s School of Electrical Engineering, in collaboration with the National Security Research Institute, has developed XDAC, the world’s first system for detecting AI-generated comments in Korean.

Recent generative AI can adjust sentiment and tone to match the context of a news article and can automatically produce hundreds of thousands of comments within hours—enabling large-scale manipulation of public discourse. Based on the pricing of OpenAI’s GPT-4o API, generating a single comment costs approximately 1 KRW. At this rate, producing the average 200,000 daily comments on major news platforms would cost only about 200,000 KRW (approx. USD 150) per day. Public LLMs, with their own GPU infrastructure, can generate massive volumes of comments at virtually no cost.

The team conducted a human evaluation to see whether people could distinguish AI-generated comments from human-written ones. Of 210 comments tested, participants mistook 67% of AI-generated comments for human-written, while only 73% of genuine human comments were correctly identified. In other words, even humans find it difficult to accurately tell AI comments apart. Moreover, AI-generated comments scored higher than human comments in relevance to article context (95% vs. 87%), fluency (71% vs. 45%), and exhibited a lower perceived bias rate (33% vs. 50%).

Until now, AI-generated text detectors have relied on long, formal English prose and fail to perform well on brief, informal Korean comments. Such short comments lack sufficient statistical features and abound in nonstandard colloquial elements, such as emojis, slang, repeated characters, where existing models do not generalize well. Additionally, realistic datasets of Korean AI-generated comments have been scarce, and simple prompt-based generation methods produced limited diversity and authenticity.

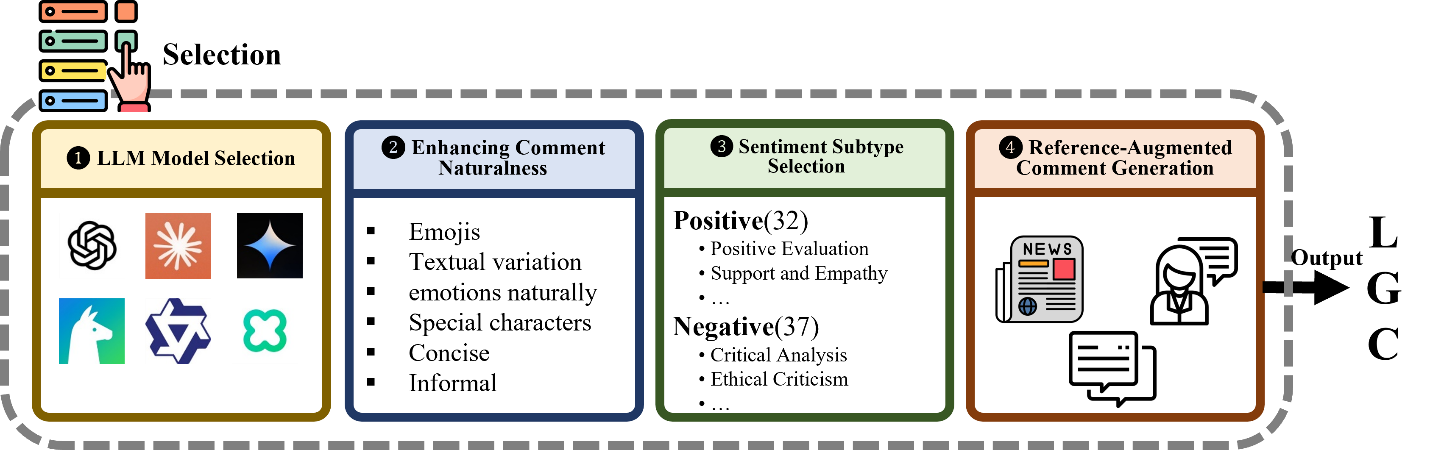

To overcome these challenges, the team developed an AI comment generation framework that employs four core strategies: 1) leveraging 14 different LLMs, 2) enhancing naturalness, 3) fine-grained emotion control, and 4) reference-based augmented generation, to build a dataset mirroring real user styles. A subset of this dataset has been released as a benchmark. By applying explainable AI (XAI) techniques to precise linguistic analysis, they uncovered unique linguistic and stylistic features of AI-generated comments through XAI analysis.

For example, AI-generated comments tended to use formal expressions like “것 같다” (“it seems”) and “에 대해” (“about”), along with a high frequency of conjunctions, whereas human commentators favored repeated characters (ㅋㅋㅋㅋ), emotional interjections, line breaks, and special symbols.

In the use of special characters, AI models predominantly employed globally standardized emojis, while real humans incorporated culturally specific characters including Korean consonants (ㅋ, ㅠ, ㅜ) and symbols (ㆍ, ♡, ★, •).

Notably, 26% of human comments included formatting characters (line breaks, multiple spaces), compared to just 1% of AI-generated ones. Similarly, repeated-character usage (e.g. ㅋㅋㅋㅋ, ㅎㅎㅎㅎ, etc.) appeared in 52% of human comments but only 12% of AI comments.

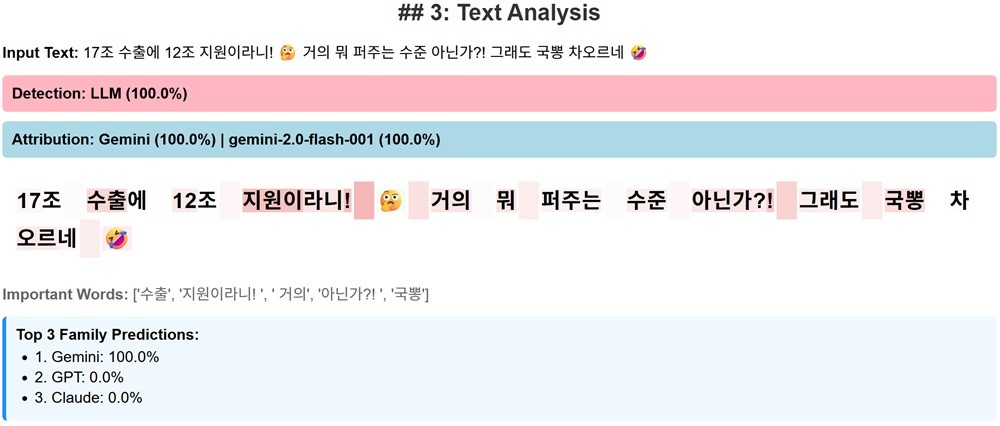

XDAC captures these distinctions to boost detection accuracy. It transforms formatting characters (line breaks, spaces) and normalizes repeated-character patterns into machine-readable features. It also learns each LLM’s unique linguistic fingerprint, enabling it to identify which model generated a given comment.

With these optimizations, XDAC achieves a 98.5% F1 score in detecting AI-generated comments, a 68% improvement over previous methods, and records an 84.3% F1 score in identifying the specific LLM used for generation.

Professor Yongdae Kim emphasized, “This study is the world’s first to detect short comments written by generative AI with high accuracy and to attribute them to their source model. It lays a crucial technical foundation for countering AI-based public opinion manipulation.”

The team also notes that XDAC’s detection capability may have a chilling effect, much like sobriety checkpoints, drug testing, or CCTV installation, which can reduce the incentive to misuse AI simply through its existence.

Platform operators can deploy XDAC to monitor and respond to suspicious accounts or coordinated manipulation attempts, with strong potential for expansion into real-time surveillance systems or automated countermeasures.

The core contribution of this work is the XAI-driven detection framework. It has been accepted to the main conference of ACL 2025, the premier venue in natural language processing, taking place on July 27th.

※Paper Title:

XDAC: XAI-Driven Detection and Attribution of LLM-Generated News Comments in Korean

※Full Paper:

https://github.com/airobotlab/XDAC/blob/main/paper/250611_XDAC_ACL2025_camera_ready.pdf

This research was conducted under the supervision of Professor Yongdae Kim at KAIST, with Senior Researcher Wooyoung Go (NSR and PhD candidate at KAIST) as the first author, and Professors Hyoungshick Kim (Sungkyunkwan University) and Alice Oh (KAIST) as co-authors.