With recent advancements in artificial intelligence’s ability to understand both language and visual information, there is growing interest in Physical AI, AI systems that can comprehend high-level human instructions and perform physical tasks such as object manipulation or navigation in the real world. Physical AI integrates large language models (LLMs), vision-language models (VLMs), reinforcement learning (RL), and robot control technologies, and is expected to become a cornerstone of next-generation intelligent robotics.

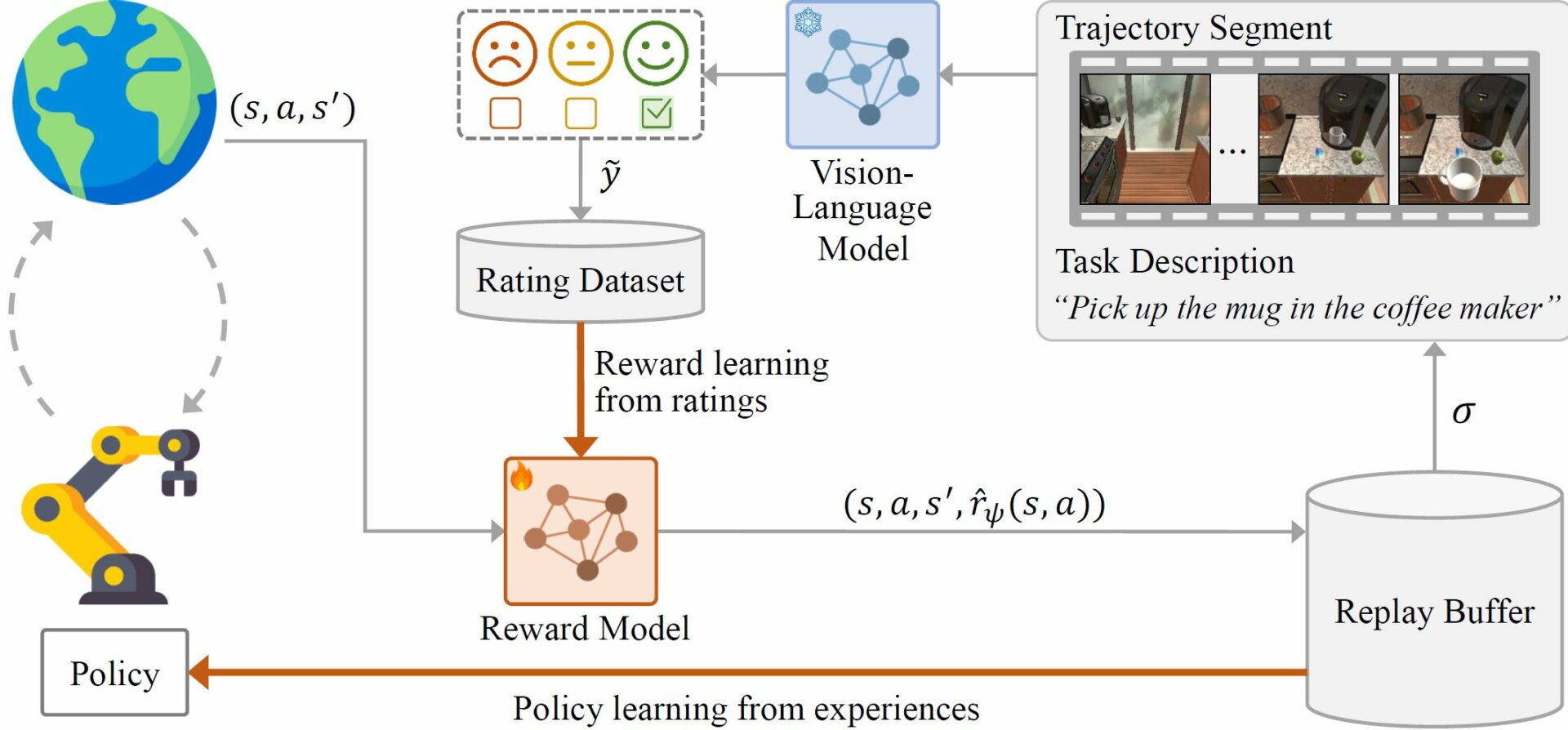

To advance research in Physical AI, an EE research team led by Professor Chang D. Yoo (U-AIM: Artificial Intelligence & Machine Learning Lab) has developed two novel reinforcement learning frameworks leveraging large vision-language models. The first, introduced in ICML 2025, is titled ERL-VLM (Enhancing Rating-based Learning to Effectively Leverage Feedback from Vision-Language Models). In this framework, a VLM provides absolute rating-based feedback on robot behavior, which is used to train a reward function. That reward is then used to learn a robot control AI model. This method removes the need for manually crafting complex reward functions and enables the efficient collection of large-scale feedback, significantly reducing the time and cost required for training.

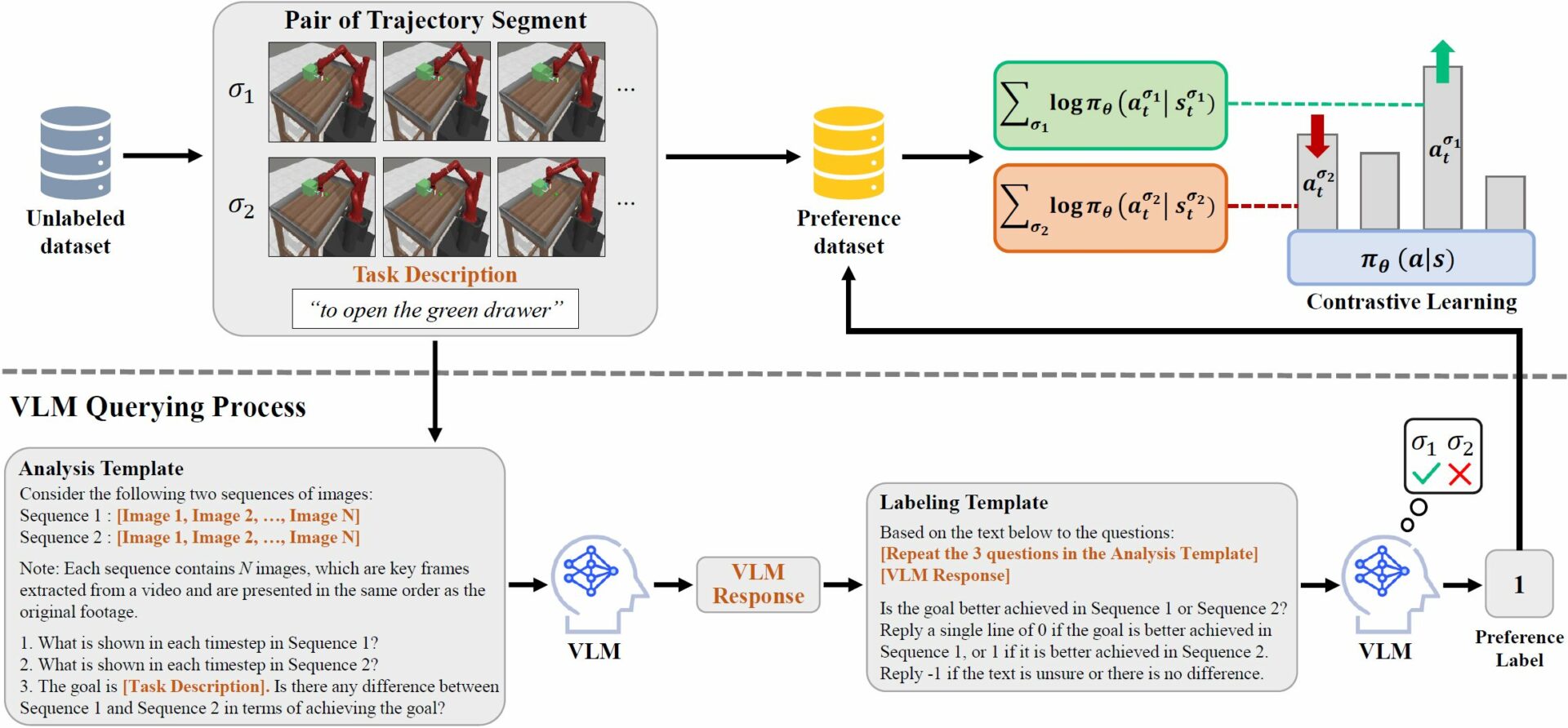

The second, published in IROS 2025, is titled PLARE (Preference-based Learning from Vision-Language Model without Reward Estimation). Unlike previous approaches, PLARE skips reward modeling entirely and instead uses pairwise preference feedback from a VLM to directly train the robot control AI model. This makes the training process simpler and more computationally efficient, without compromising performance.

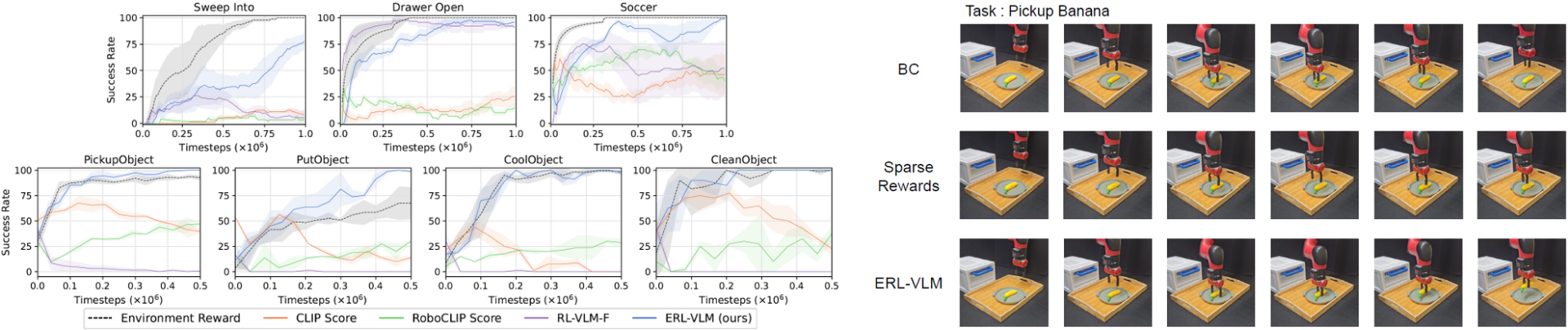

Both frameworks demonstrated superior performance not only in simulation environments but also in real-world experiments using physical robots, achieving higher success rates and more stable behavior than existing methods—thereby verifying their practical applicability.

This research provides a more efficient and practical approach to enabling robots to understand and act upon human language instructions by leveraging large vision-language models—bringing us a step closer to the realization of Physical AI. Moving forward, Professor Changdong Yoo’s team plans to continue advancing research in robot control, vision-language-based interaction, and scalable feedback learning to further develop key technologies in Physical AI.