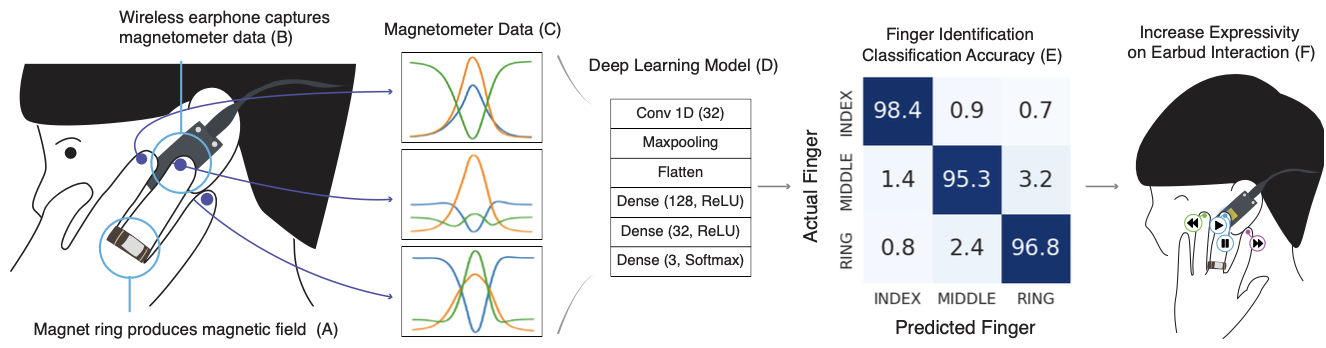

BudsID uses magnetic sensing to distinguish between fingers based on magnetic field changes that occur when a user wearing a magnetic ring touches an earbud. A lightweight deep learning model identifies which finger is used, allowing different functions to be assigned to each finger, thus expanding the input expressiveness of wireless earbuds.

This magnetic sensing system for wireless earbuds allows users to go beyond traditional interactions like play, pause, or call handling. By mapping different functions or input commands to individual fingers, the interaction capabilities can extend to augmented reality device control and beyond.

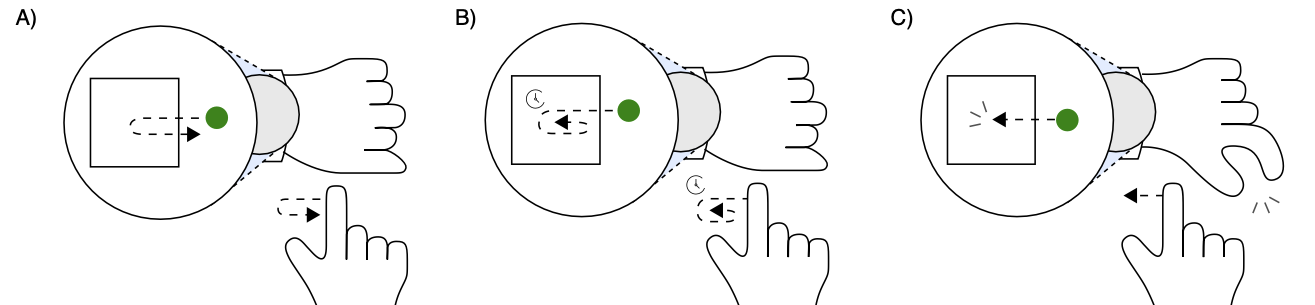

SonarSelect leverages active sonar sensing using only the built-in microphone, speaker, and motion sensors of a commercial smartwatch. It recognizes mid-air gestures around the device, enabling precise pointer manipulation and target selection.

This gesture interaction technology, based on finger movements detected via active sonar, addresses usability issues of small smartwatch screens and touch occlusion. It enables delicate 3D spatial interactions around the device.

Jiwan Kim, first author of both papers, said, “We hope our research into around-device sensing for wearable interaction technologies will help shape the future of how people interact with wearable computing devices.”

Professor Ian Oakley’s research team has made both project systems available as open source, allowing researchers and industry professionals to freely use the technology.

[BudsID]

- Title: BudsID: Mobile-Ready and Expressive Finger Identification Input for Earbuds

- Authors: Jiwan Kim, Mingyu Han, and Ian Oakley

- DOI: https://dl.acm.org/doi/10.1145/3706598.3714133

- Open Source: https://github.com/witlab-kaist/BudsID

[SonarSelect]

- Title: Cross, Dwell, or Pinch: Designing and Evaluating Around-Device Selection Methods for Unmodified Smartwatches

- Authors: Jiwan Kim, Jiwan Son, and Ian Oakley

- DOI: https://dl.acm.org/doi/10.1145/3706598.3714308

- Open Source: https://github.com/witlab-kaist/SonarSelect

This research was supported by the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT (grants 2023R1A2C1004046, RS-2024-00407732) and the Institute for Information & Communications Technology Planning & Evaluation (IITP) under the University ICT Research Center (ITRC) support program (IITP-2024-RS-2024-00436398).