Imagine if music creators had a collaborator who could help brainstorm initial ideas or assist when they hit a creative block or someone who could genuinely support exploring various musical directions. A research team at KAIST’s School of Electrical Engineering has developed an AI tool designed to be such a co-creative partner in music composition.

Professor Sung-Ju Lee’s research team at the School of Electrical Engineering has developed an AI-based music creation support system named Amuse. This research was awarded the Best Paper Award, an honor given to only the top 1% of papers, at CHI (ACM Conference on Human Factors in Computing Systems), the world’s leading conference in human-computer interaction, held in Yokohama, Japan from April 26 to May 1.

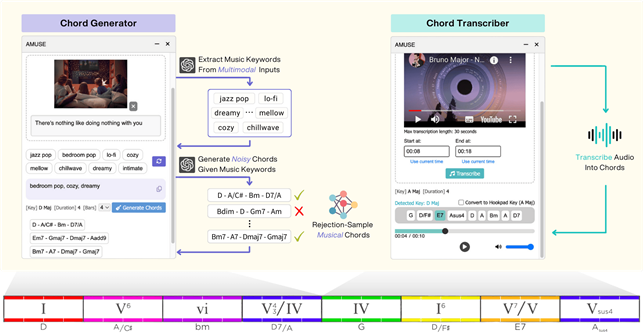

Amuse is an AI-based system that supports songwriting by transforming diverse forms of inspiration, such as text, images, or audio, into harmonic structures (chord progressions). For example, when a user inputs a phrase like “memories of a warm summer beach,” an image, or a sound clip, Amuse generates and suggests a chord progression that matches the mood and atmosphere of the inspiration.

Unlike conventional generative AI, Amuse respects the user’s creative process and offers a flexible, interactive approach that allows users to modify and integrate the AI’s suggestions, encouraging natural and creative exploration.

The core technology of the Amuse system is a hybrid generation method. It first uses a large language model to generate music chords based on the user’s textual input. Then, a second AI model trained on actual music data filters out unnatural or awkward results through a process called rejection sampling. These two processes are seamlessly integrated to produce musically coherent outcomes.

The research team conducted user studies with actual musicians, and the findings suggest that Amuse has strong potential not just as a music-generating tool but as a co-creative partner that enables collaboration between humans and AI.

The study, authored by Ph.D. candidate Yewon Kim(KAIST), Professor Sung-Ju Lee(KAIST), and Professor Chris Donahue (Carnegie Mellon University), demonstrates the creative potential of AI systems for both academia and industry.

- Paper Title: Amuse: Human-AI Collaborative Songwriting with Multimodal Inspirations

- DOI: https://doi.org/10.1145/3706598.3713818

- Project Website: https://nmsl.kaist.ac.kr/projects/amuse/

Professor Sung-Ju Lee stated, “Recent generative AI technologies have raised concerns due to the risk of replicating copyrighted content or producing results that ignore the creator’s intent. Our team was aware of these issues and focused on what creators actually need, prioritizing user-centric design in developing this AI system.”

He added, “Amuse is an attempt to explore collaborative possibilities with AI while maintaining the creator’s agency. We expect it to be a starting point that guides future development of AI-powered music tools toward a more creator-friendly direction.”

This research was supported by the National Research Foundation of Korea (NRF), funded by the Ministry of Science and ICT. (Project No. RS-2024-00337007)