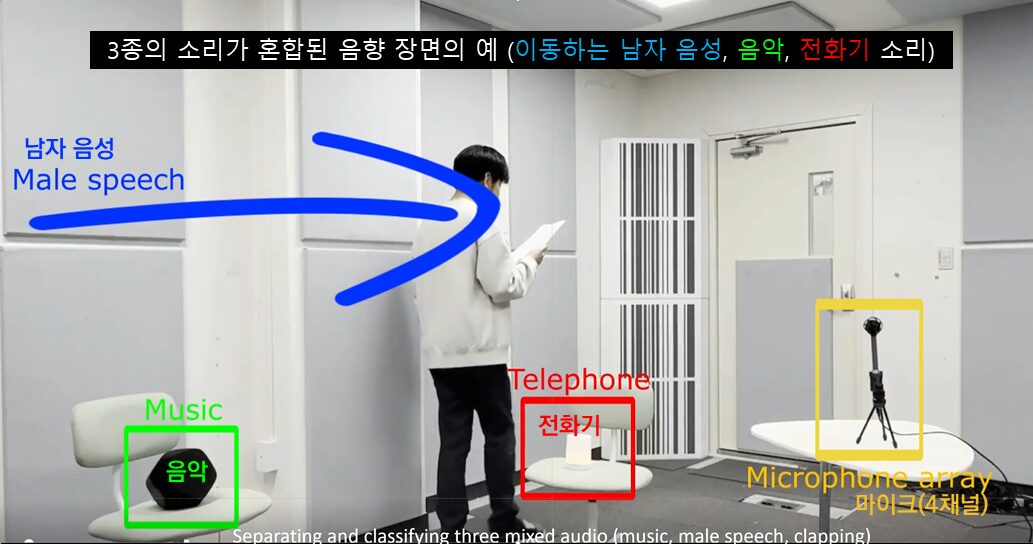

Acoustic source separation and classification is a key next-generation AI technology for early detection of anomalies in drone operations piping faults or border surveillance and for enabling spatial audio editing in AR VR content production.

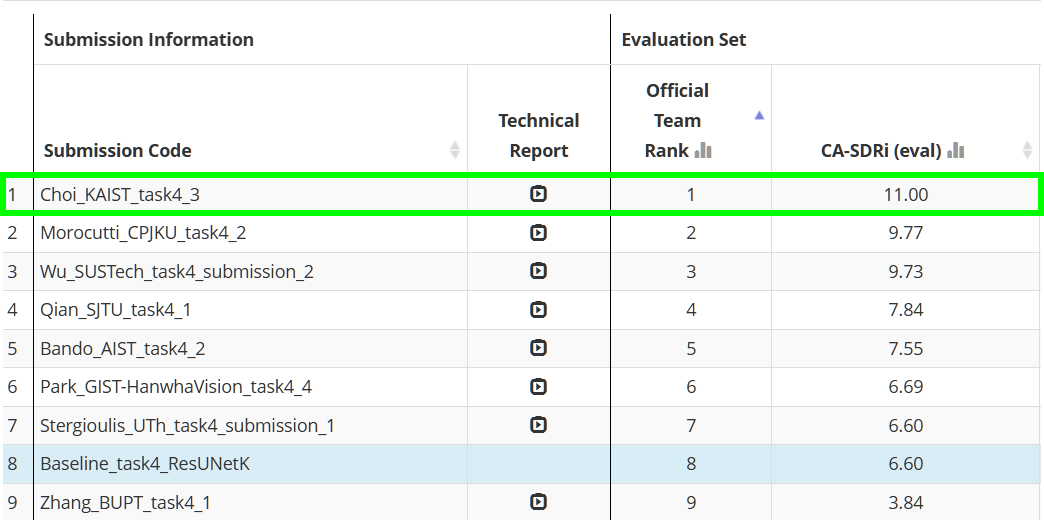

Professor Jung-Woo Choi’s research team from the School of Electrical Engineering won first place in the “Spatial Semantic Segmentation of Sound Scenes” task of the “IEEE DCASE Challenge 2025.”

This year’s challenge featured 86 teams competing across six tasks. In their first-ever participation, KAIST’s team ranked first in Task 4: Spatial Semantic Segmentation of Sound Scenes—a highly demanding task requiring the analysis of spatial information in multi-channel audio signals with overlapping sound sources. The goal was to separate individual sounds and classify them into 18 predefined categories. The team, composed of Dr. Dongheon Lee, integrated MS-PhD student Younghoo Kwon, and MS student Dohwan Kim, will present their results at the DCASE Workshop in Barcelona this October.

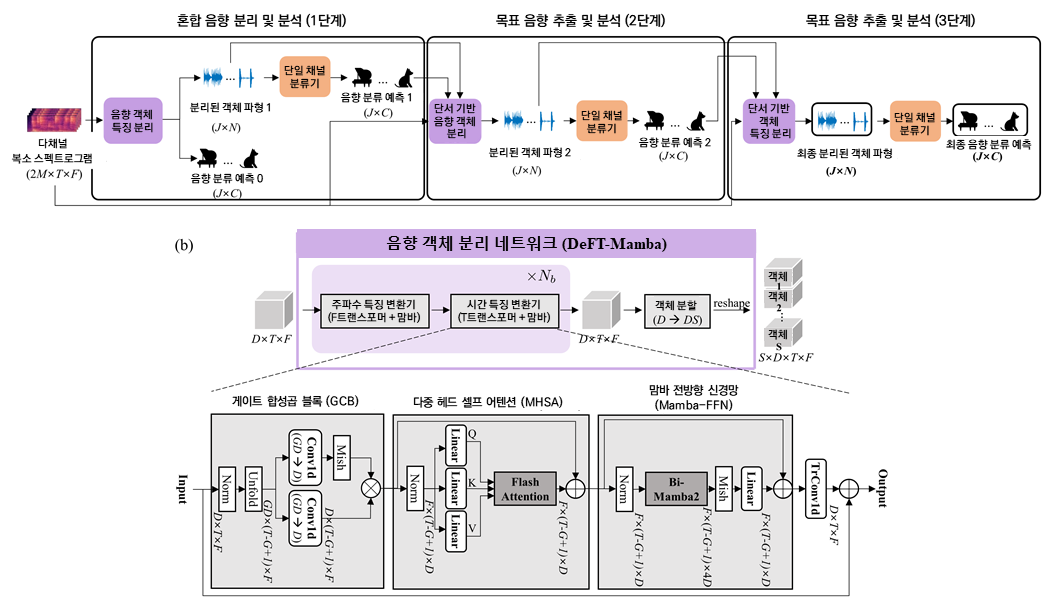

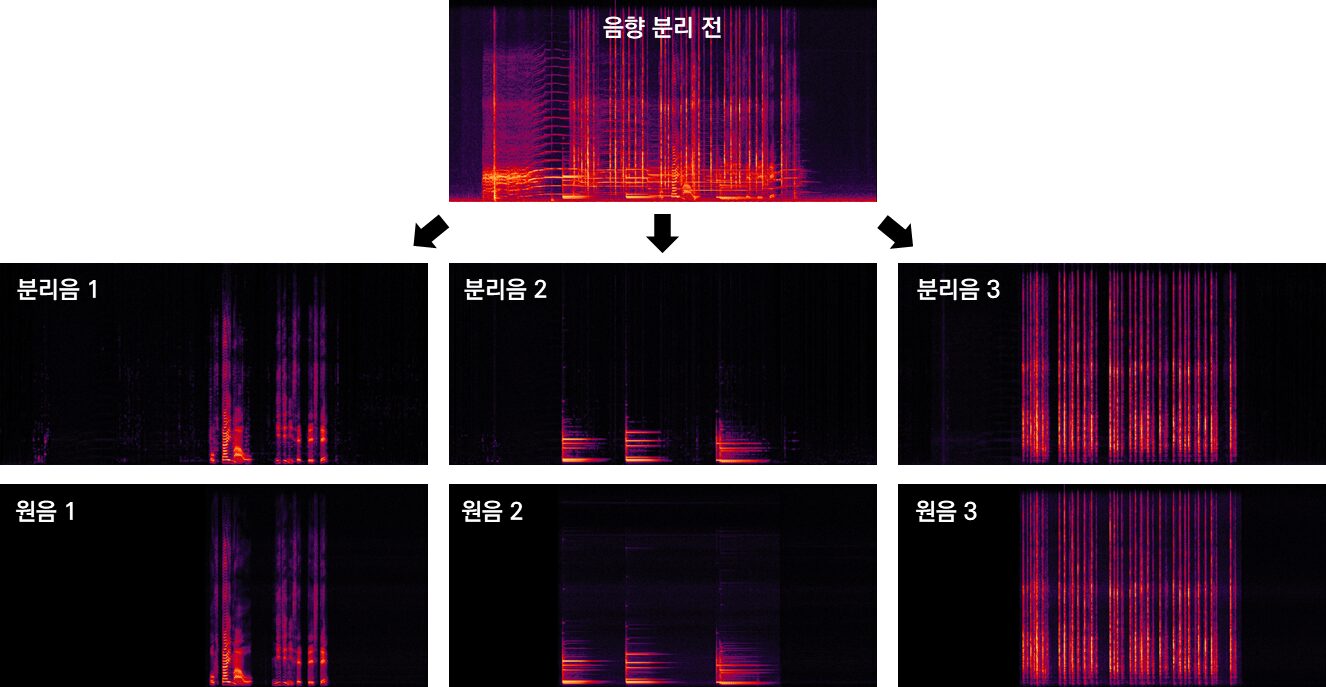

Earlier this year, Dr. Dongheon Lee developed a state-of-the-art sound source separation AI combining Transformer and Mamba architectures. Furthermore, at the challenge, led by Younghoo Kwon, the team established the chain-of-inference architecture that first separates waveforms and source types and then refines the estimation by utilizing the estimated waveforms and classes as clues for target signal extraction in the next stage.

This chain-of-inference approach is inspired by human’s auditory scene analysis mechanism that isolates individual sounds by focusing on incomplete clues such as sound type, rhythm, or direction.

In the evaluation metric CA-SDRi (Class-aware Signal-to-distortion Ratio improvement)*, the team was the only participant to achieve a double-digit improvement of 11 dB, demonstrating their technical excellence. *CA-SDRi (Class-aware Signal-to-distortion Ratio improvement) measures how much clearer and less distorted the target sound is compared with the original mix.

Professor Choi remarked, “I am proud that our team’s world leading acoustic separation AI models over the past three years have now received formal recognition. Despite the greatly increased difficulty and the limited development window due to other conference schedules and final exams, each member demonstrated focused research that led to first place.”

The “IEEE DCASE Challenge 2025” was held online from April 1st to June 15th for submissions, with results announced on June 30th. Since its inception in 2013 under the IEEE Signal Processing Society, the challenge has served as a global stage for AI models in the acoustic field.

Go to the IEEE DCASE Challenge 2025 website (Click)

This research was supported by the National Research Foundation of Korea’s Mid-Career Researcher Program and STEAM Research Project, funded by the Ministry of Education, and the Future Defense Research Center, funded by the Defense Acquisition Program Administration and the Agency for Defense Development.