Large AI models such as ChatGPT and DeepSeek are gaining attention as they’re being applied across diverse fields. These large language models (LLMs) require training on massive distributed systems composed of tens of thousands of data center GPUs. For example, the cost of training GPT-4 is estimated at approximately 140 billion won. A team of Korean researchers has developed a technology that optimizes parallelization configurations to increase GPU efficiency and significantly reduce training costs.

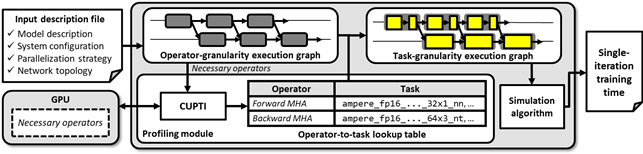

An EE research team led by Professor Minsoo Rhu, in collaboration with the Samsung Advanced Institute of Technology (SAIT), has developed a simulation framework called vTrain, which accurately predicts and optimizes the training time of LLMs in large-scale distributed environments.

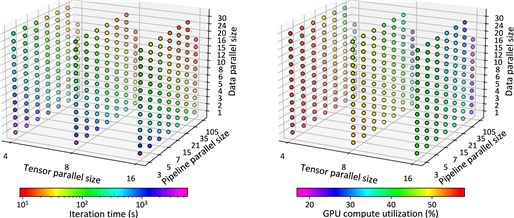

To efficiently train LLMs, it’s crucial to identify the optimal distributed training strategy. However, the vast number of potential strategies makes real-world testing prohibitively expensive and time-consuming. As a result, companies currently rely on a limited number of empirically validated strategies, causing inefficient GPU utilization and unnecessary increases in training costs. The absence of suitable large-scale simulation technology has significantly hindered companies from effectively addressing this issue.

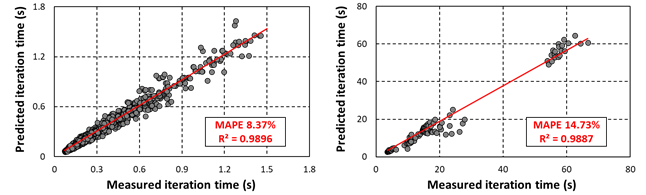

To overcome this limitation, Professor Rhu’s team developed vTrain, which can accurately predict training time and quickly evaluate various parallelization strategies. Through experiments conducted in multi-GPU environments, vTrain’s predictions were compared against actual measured training times, resulting in an average absolute percentage error (MAPE) of 8.37% on single-node systems and 14.73% on multi-node systems.

In collaboration with SAIT, the team has also released the vTrain framework along with over 1,500 real-world training time measurement datasets as open-source software (https://github.com/VIA-Research/vTrain) for free use by AI researchers and companies.

Professor Rhu commented, “vTrain utilizes a profiling-based simulation approach to explore training strategies that enhance GPU utilization and reduce training costs compared to conventional empirical methods. With the open-source release, companies can now efficiently cut the costs associated with training ultra-large AI models.”

This research, with Ph.D. candidate Jehyeon Bang as the first author, was presented last November at MICRO, the joint International Symposium on Microarchitecture hosted by IEEE and ACM, one of the premier conferences in computer architecture. (Paper title: “vTrain: A Simulation Framework for Evaluating Cost-Effective and Compute-Optimal Large Language Model Training”, https://doi.org/10.1109/MICRO61859.2024.00021)

This work was supported by the Ministry of Science, ICT, the National Research Foundation of Korea, the Information and Communication Technology Promotion Agency, and Samsung Electronics, as part of the SW Star Lab project for the development of core technologies in the SW computing industry.