Ph.D. candidate Hee Suk Yoon (Prof. Chang D. Yoo) wins excellent paper award

<(From left) Professor Chang D. Yoo, Hee Suk Yoon integrated Ph.D. candidate>

The Korean Society for Artificial Intelligence holds conferences quarterly, and this year’s summer conference is scheduled to take place from August 15 to 17 at BEXCO in Busan.

Hee Suk Yoon, a PhD candidate, has been recognized for the excellence of his paper titled “BI-MDRG: Bridging Image History in Multimodal Dialogue Response Generation” and has been selected as an award recipient.

Moreover, the findings will be presented at the ‘European Conference on Computer Vision (ECCV) 2024′, one of the top international conferences in the field of computer vision, to be held in Milan, Italy, in September this year (Paper title: BI-MDRG: Bridging Image History in Multimodal Dialogue Response Generation).

The detailed information is as follows:

* Conference Name: 2024 Summer Conference of the Korean Artificial Intelligence Association

* Period: August 15 to 17, 2024

* Award Name: Excellent Paper Award

* Authors: Hee Suk Yoon, Eunseop Yoon, Chang D. Yoo (Supervising Professor)

* Paper Title: BI-MDRG: Bridging Image History in Multimodal Dialogue Response Generation

This research is considered an innovative breakthrough that overcomes the limitations of existing multimodal dialogue large models, such as ChatGPT, and maintains consistency in image generation within multimodal dialogues.

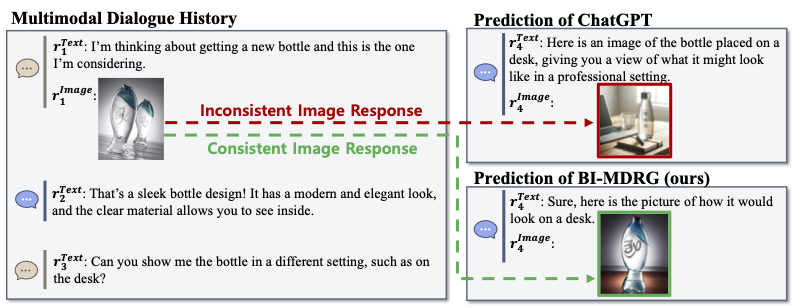

Figure 1 : Image Response of ChatGPT and BI-MDRG (ours)

Traditional multimodal dialogue models prioritize generating textual descriptions of images and then create images using text-to-image models.

This approach often fails to sufficiently reflect the visual information from previous dialogues, leading to inconsistent image responses.

However, Professor Yoo’s BI-MDRG minimizes image information loss through a direct image referencing technique, enabling consistent image response generation.

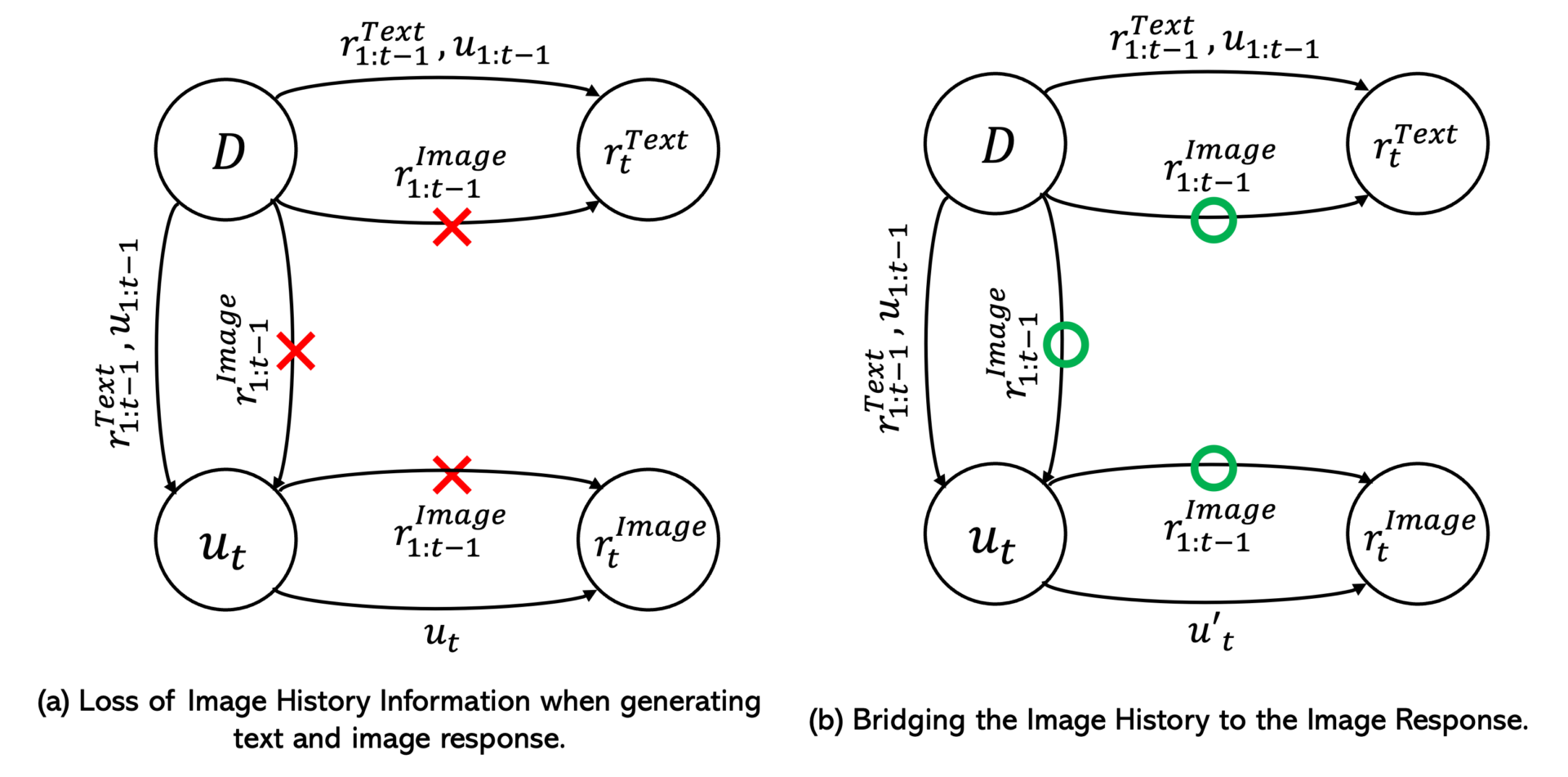

Figure 2 : Framework of previous multimodal dialogue system and our proposed BI-MDRG

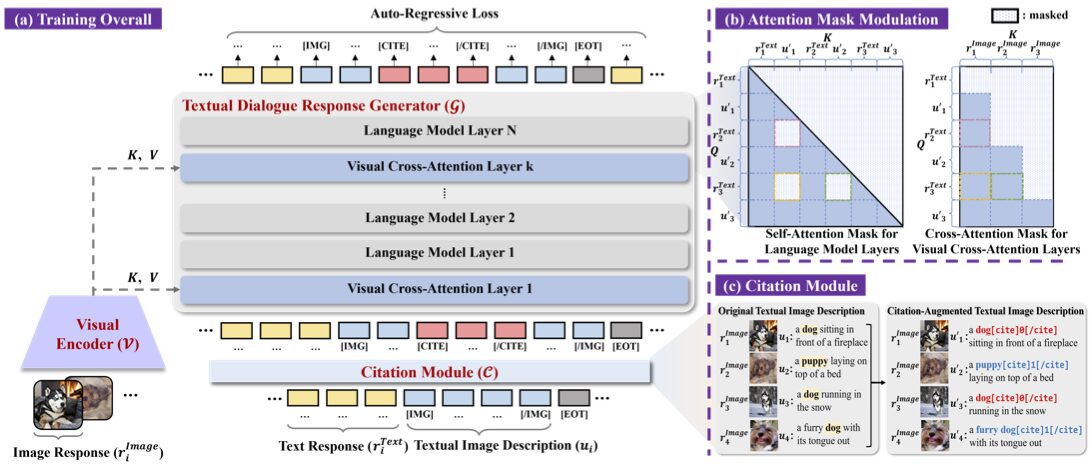

BI-MDRG is a new system designed to solve the problem of image information loss in existing multimodal dialogue models by proposing Attention Mask Modulation and Citation Module.

Attention Mask Modulation allows the dialogue to focus directly on the image itself instead of its textual description, while the Citation Module ensures consistent responses by directly referencing objects that should be maintained in image responses through citation tagging of the same objects appearing in the conversation.

The research team validated BI-MDRG’s performance across various multimodal dialogue benchmarks, achieving high dialogue performance and consistency.

Figure 3: Overall framework of BI-MDRG

BI-MDRG offers practical solutions in various multimodal application fields.

For instance, in customer service, it can enhance user satisfaction by providing accurate images based on conversation content.

In education, it can improve understanding by consistently providing relevant images and texts in response to learners’ questions. Additionally, in the entertainment field, it can enable natural and immersive interactions in interactive games.