Professor Changick Kim’s Research Team Develops ‘VideoMamba,’ a High-Efficiency Model Opening a New Paradigm in Video Recognition

<(From left) Professor Changick Kim, Jinyoung Park integrated Ph.D. candidate, Hee-Seon Kim Ph.D. candidate, Kangwook Ko Ph.D. candidate, and Minbeom Kim Ph.D. candidate>

On the 9th, Professor Changick Kim’s research team announced the development of a high-efficiency video recognition model named ‘VideoMamba.’ VideoMamba demonstrates superior efficiency and competitive performance compared to existing video models built on transformers, like those underpinning large language models such as ChatGPT. This breakthrough is seen as pioneering a new paradigm in the field of video utilization.

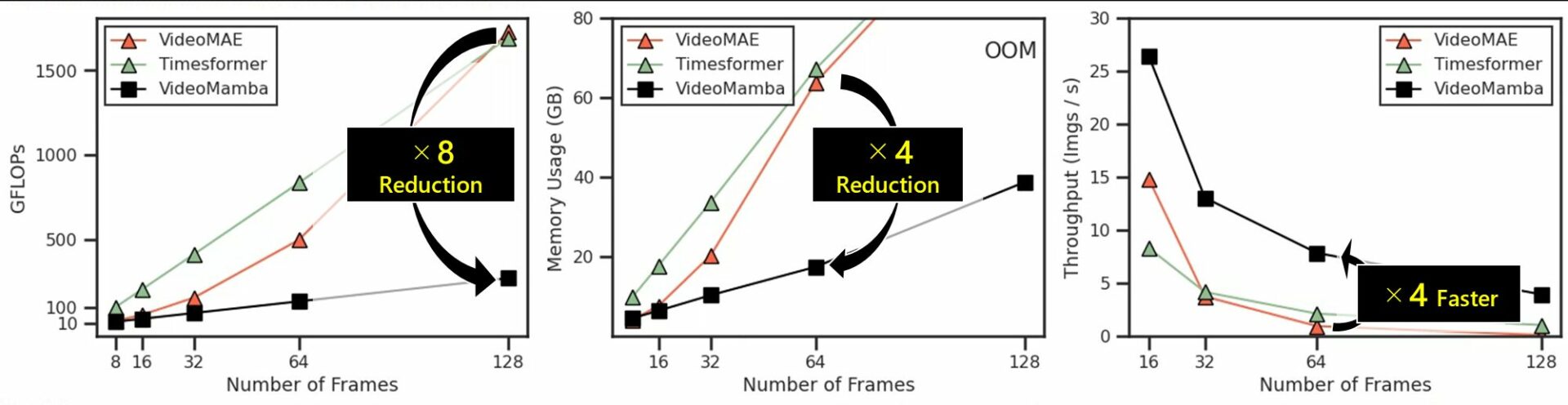

Figure 1: Comparison of VideoMamba’s memory usage and inference speed with transformer-based video recognition models.

VideoMamba is designed to address the high computational complexity associated with traditional transformer-based models.

These models typically rely on the self-attention mechanism, which scales quadratically in complexity. However, VideoMamba utilizes a Selective State Space Model (SSM) mechanism, enabling efficient linear complexity processing. This allows VideoMamba to effectively capture the spatio-temporal information in videos and efficiently handle long dependencies within video data.

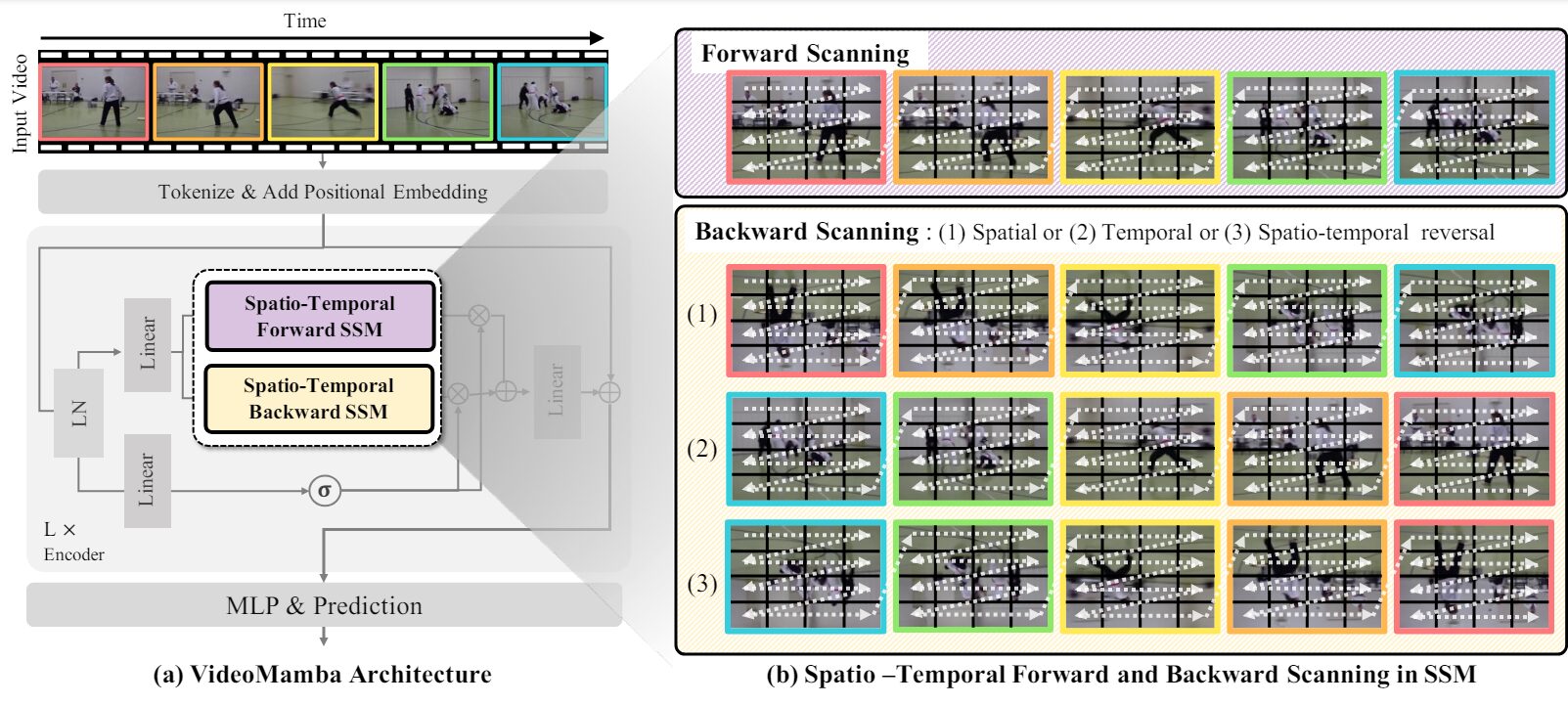

Figure 2: Detailed structure of the spatio-temporal forward and backward Selective State Space Model in VideoMamba.

To maximize the efficiency of the video recognition model, Professor Kim’s team incorporated spatio-temporal forward and backward SSMs into VideoMamba. This model integrates non-sequential spatial information and sequential temporal information effectively, enhancing video recognition performance.

The research team validated VideoMamba’s performance across various video recognition benchmarks. As a result, VideoMamba achieved high accuracy with low GFLOPs (Giga Floating Point Operations) and memory usage, and it demonstrated very fast inference speed.

VideoMamba offers an efficient and practical solution for various applications requiring video analysis. For example, autonomous driving can analyze driving footage to accurately assess road conditions and recognize pedestrians and obstacles in real time, thereby preventing accidents.

In the medical field, it can analyze surgical videos to monitor the patient’s condition in real-time and respond swiftly to emergencies. In sports, it can analyze players’ movements and tactics during games to improve strategies and detect fatigue or potential injuries during training to prevent them. VideoMamba’s fast processing speed, low memory usage, and high performance provide significant advantages in these diverse video utilization fields.

The research team includes Jinyoung Park (integrated Ph.D candidate), Hee-Seon Kim (Ph.D. candidate), Kangwook Ko (Ph.D. candidate) as co-first authors, and Minbeom Kim (Ph.D. candidate) as a co-author, with Professor Changick Kim as the corresponding author from the Department of Electrical and Electronic Engineering at KAIST.

The research findings will be presented at the European Conference on Computer Vision (ECCV) 2024, one of the top international conferences in the field of computer vision, to be held in Milan, Italy, in September this year. (Paper title: VideoMamba: Spatio-Temporal Selective State Space Model).

This work was supported by the Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (MSIT) (No.2020-0-00153, Penetration Security Testing of ML Model Vulnerabilities and Defense).