Journal : IEEE Trans. on Information Theory (2022)

Abstract : We consider the weak detection problem in a rank-one spiked Wigner data matrix where the signal-to-noise ratio is small so that reliable detection is impossible. We prove a central limit theorem for the linear spectral statistics of general rank-one spiked Wigner matrices, and based on the central limit theorem, we propose a hypothesis test on the presence of the signal by utilizing the linear spectral statistics of the data matrix. The test is data-driven and does not require prior knowledge about the distribution of the signal or the noise. When the noise is Gaussian, the proposed test is optimal in the sense that its error matches that of the likelihood ratio test, which minimizes the sum of the Type-I and Type-II errors. If the density of the noise is known and non-Gaussian, the error of the test can be lowered by applying an entrywise transformation to the data matrix.

Conference : IEEE International Symposium on Information Theory (2022)

Abstract : Crowdsourcing has emerged as an effective platform to label data with low cost by using non-expert workers. However, inferring correct labels from multiple noisy answers on data has been a challenging problem, since the quality of answers varies widely across tasks and workers. We propose a highly general crowdsourcing model in which the reliability of each worker can vary depending on the type of a given task, where the number of types d can scale in the number of tasks. In this model, we characterize the optimal sample complexity to correctly infer the unknown labels within any given accuracy, and propose an algorithm achieving the order-wise optimal result

Authors: Suyoung Lee, Sae-Young Chung

Conference: Advances in Neural Information Processing Systems 2022 (NeurIPS 2022)

Abstract:

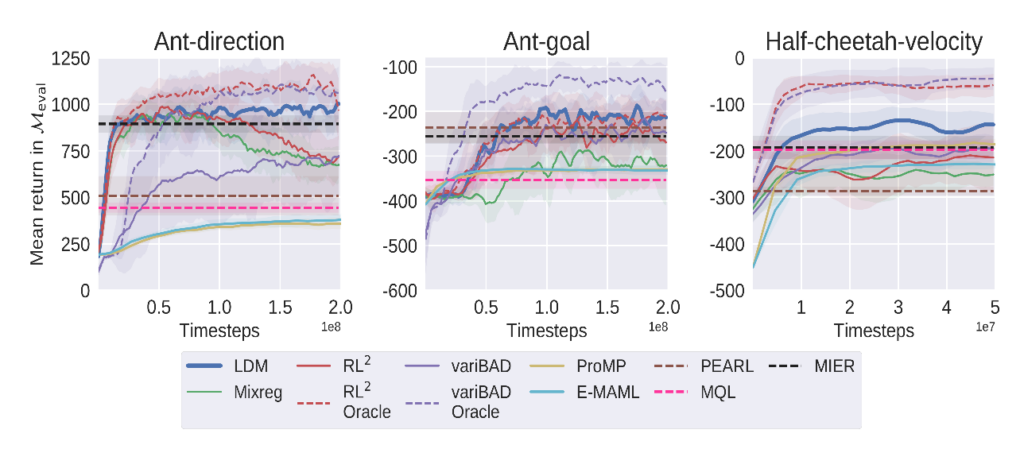

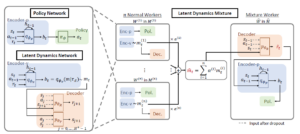

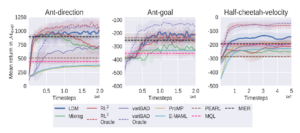

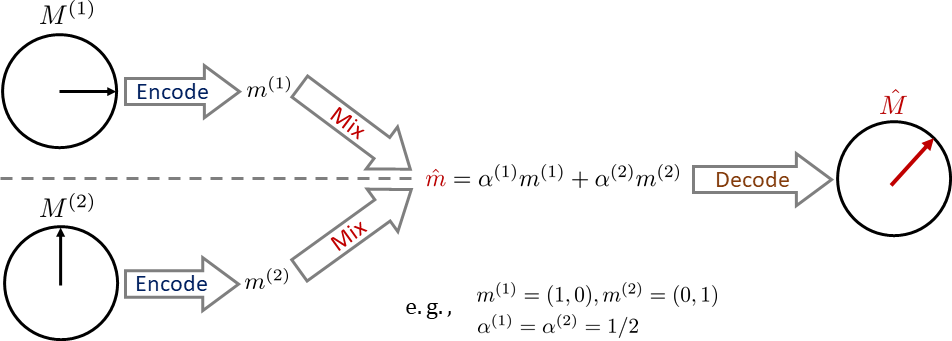

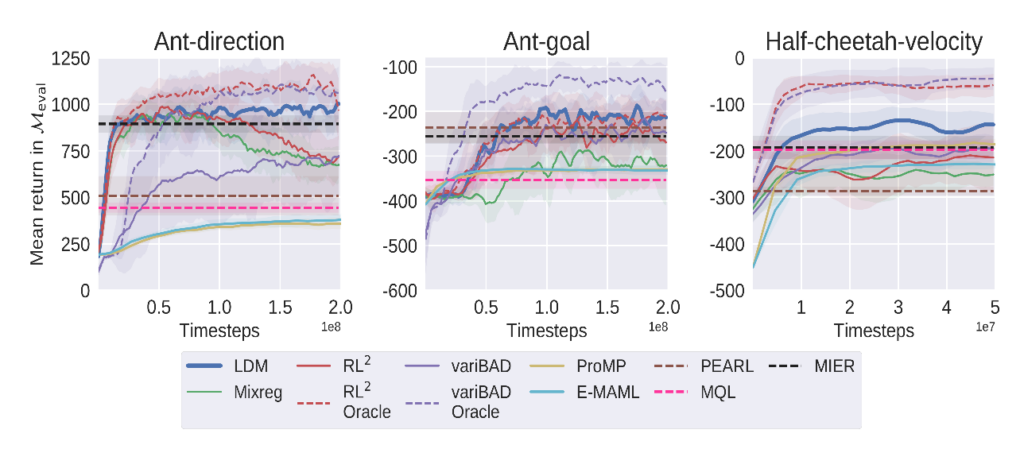

대부분의 메타 강화 학습(meta-RL) 방법의 일반화 능력은 학습 태스크를 추출하는 데 사용된 동일한 분포에서 추출된 테스트 태스크로 크게 제한된다. 이러한 한계를 극복하기 위해 학습된 잠재 특징 분포의 혼합으로 생성된 가상 태스크로 훈련시키는 LDM (Latent Dynamics Mixture)을 제안한다. 기존 학습 태스크와 함께 혼합 태스크에 대해서 훈련함으로써 LDM은 학습 중에 보이지 않는 테스트 태스크를 준비할 수 있게 되고 학습태스크에 과적합되는 것을 방지한다. LDM은 학습 태스크 분포와 테스트 태스크 분배를 엄격하게 분리한 Grid-World 탐색 및 MuJoCo 테스트 환경에서 기존 방식을 큰 폭으로 뛰어넘는 성능을 보였다.

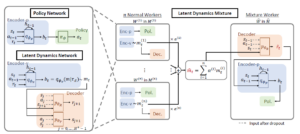

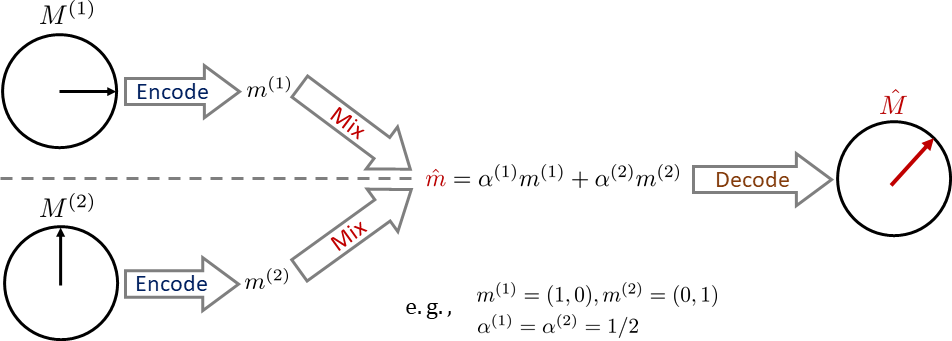

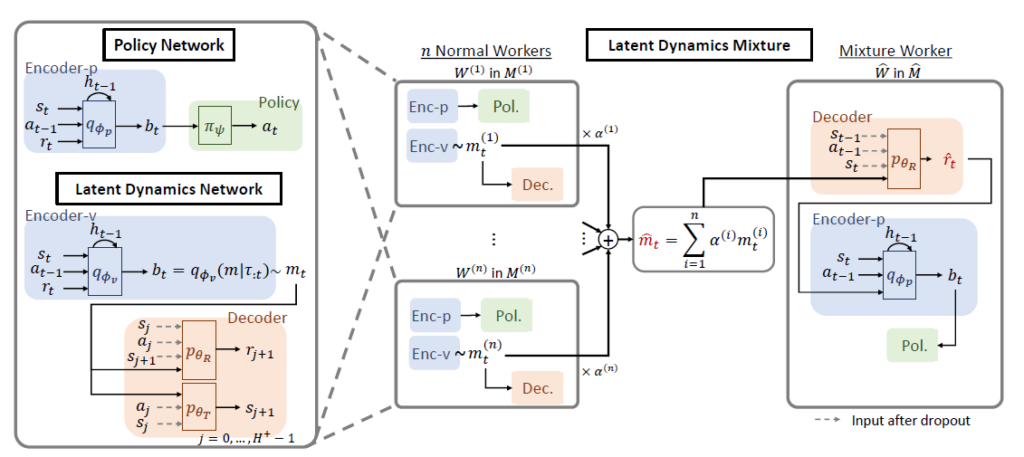

Figure 1. Latent space에서 혼합을 통해 가상의 태스크를 생성

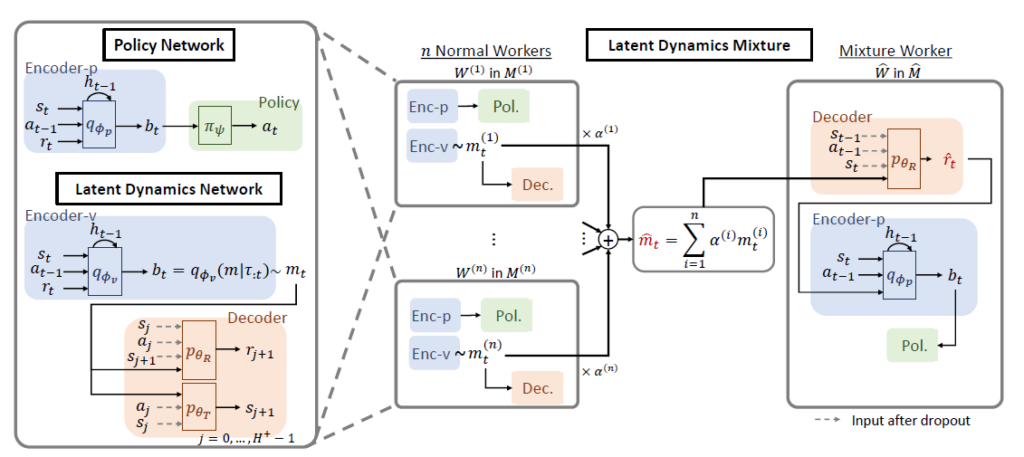

Figure 2. 가상 태스크를 생성해 학습하는 강화학습 기법 LDM 네트워크 구조

Figure 3. 3가지 Out-of-distribution MuJoCo 태스크의 테스트 상황에서의 평균 리턴

Journal : IEEE Transactions on Communications (published: March 2022)

Abstract

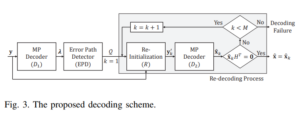

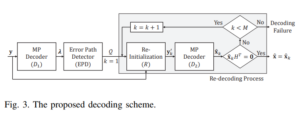

저밀도 패리티 검사(LDPC) 부호의 메시지 전달(MP) 복호 알고리즘은 낮은 오류율 영역에서 trapping set으로 인해 성능이 저하되므로 높은 신뢰도를 요구하는 저장 장치와 같은 분야에서의 사용이 제한되고 있다. 본 논문은 trapping set을 해결하기 위한 새로운 딥 러닝 기반 복호 알고리즘을 제안한다. trapping set으로 인해 메시지 전달 복호에 실패하는 경우, 잘못된 경판정 결과를 갖는 오류 변수 노드(Variable Node)들 만이 존재하는 경로로 연결되는 unsatisfied 검사 노드(Check Node) 쌍이 존재한다. 제안된 알고리즘은 딥 러닝 기술을 사용하여 unsatisfied 검사노드 사이에 오류 변수 노드 만이 존재하는 경로를 효율적으로 식별한다. 그리고 식별된 경로의 오류 변수 노드에 대한 채널 출력을 다시 초기화한 후 메시지 전달 복호 알고리즘을 반복하여 trapping set으로 인한 복호 실패 문제를 해결한다. 추가적으로 딥 러닝 기반 알고리즘의 동작 방식을 분석하여 낮은 복잡도로 적응적으로 오류가 발생한 경로를 색출할 수 있는 검출기를 제안한다. 시뮬레이션 결과를 통해 제안된 알고리즘이 trapping set을 효율적으로 해결하고 낮은 오류율 영역에서 오류 마루 성능을 크게 향상시키는 것으로 보인다.

Journal : IEEE Transactions on Vehicular Technology (published: February 2022)

Abstract

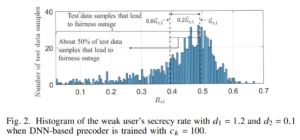

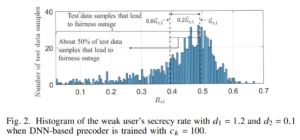

본 논문은 인공 잡음(AN) 지원 보안 다중 입력 단일 출력 비직교 다중 액세스 (NOMA) 방식을 제안한다. 제안하는 방식의 설계에서는 경쟁하는 직교 다중 엑세스 (OMA) 방식에 비해 모든 사용자가 더 높은 보안 효율을 갖는다는 공정성을 고려한다. NOMA의 중요성에도 불구하고 공정성을 고려한 설계방식은 수학적 분석의 난해함으로 인해 현재까지 연구된 성과가 거의 존재하지 않는다. 본 연구는 큰 안테나 배열 및/또는 높은 신호 대 잡음 비와 같은 일부 가정에 의존하지 않고도 정보 및 인공 잡음 신호에 대한 precoder로 심층 신경망을 활용하여 이 문제를 효율적으로 해결할 수 있음을 보여준다. 또한 공정성이 충족되는 경우에만 접근 프로토콜을 OMA에서 NOMA로 전환하는 적응 모드를 제안한다. 제안된 보안 NOMA의 성능은 기존 NOMA 및 OMA 와 광범위하게 평가 및 비교된다. 비교 결과, 제안한 방식은 기존 NOMA로는 달성할 수 없는 공정성을 보장하면서 합계 보안 효율을 크게 향상시킬 수 있음을 보여준다.

Author: Beomsoo Ko, Hwanjin Kim, and Junil Choi

Conference and Year: ICTC 2021

In this paper, we focus on channel prediction for massive MIMO wideband systems. In general, precise channel state information (CSI) is essential to fully utilize the benefits of the large array of antennas. However, the CSI is likely to be out-dated due to the feedback delay between a base station and a user equipment. Hence, the channel prediction is required to enhance the performance of the system.

The wideband channel is transformed in to multiple parallel orthogonal-frequency-division-multiplexing (OFDM) channels. In the conventional machine learning-based channel prediction studies, a neural network (NN) is generated for each sub-carrier channel (sub-channel), which results in large training overhead. Hence, we propose a channel predictor, which only generates a single NN for every sub-channel to decrease the training overhead.

Since the OFDM sub-channels are highly correlated, a single NN trained with a single OFDM sub-channel is sufficient to represent the channel statistics of every sub-channels. In the simulation, we showed that the normalized mean square error (NMSE) performance of the proposed channel prediction has almost the same performance with the conventional channel predictor while reducing the training overhead significantly.

Author: Daesung Kim and Hye Won Chung

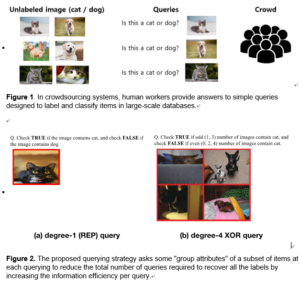

Keywords: Crowdsourcing, XOR Queries, Fundamental Limits, Inference

Abstract:

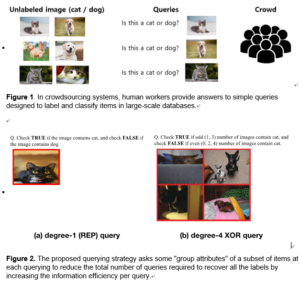

We consider a query-based data acquisition problem for binary classification of unknown labels, which has diverse applications in communications, crowdsourcing, recommender systems and active learning. To ensure reliable recovery of unknown labels with as few queries as possible, we consider an effective query type that asks “group attribute” of a chosen subset of objects. In particular, we consider the problem of classifying m binary labels with XOR queries that ask whether the number of objects having a given attribute in the chosen subset of size d is even or odd. The subset size d, which we call query degree, can be varying over queries. We consider a general noise model where the accuracy of answers on queries changes depending both on the worker (the data provider) and query degree d. For this general model, we characterize the information-theoretic limit on the optimal number of queries to reliably recover m labels in terms of a given combination of degree-d queries and noise parameters. Further, we propose an efficient inference algorithm that achieves this limit even when the noise parameters are unknown.

이 논문에서는 알려지지 않은 이진 레이블을 쿼리를 통해 복구하는 문제를 연구한다. 이진 레이블의 분류는 통신 시스템, 크라우드소싱, 추천 시스템, 능동 학습 등 다양한 분야에서 활용되는 중요한 문제이다. 최대한 적은 수의 쿼리를 통해 레이블을 복구하기 위하여 어떤 레이블의 묶음에 대한 질문을 하는 방식을 제안하였다. 구체적으로는 총 m개의 이진 레이블 중 특정 d개를 선택하여 이들의 XOR값을 묻는 방식을 사용하였다. 여기서 d는 “쿼리 난도”로 정의되며, 매 쿼리마다 변할 수 있도록 설정되었다. 또한, 쿼리의 정답률이 대답하는 사람과 쿼리 난도 두 가지 모두에 의해 결정되는 일반적인 모델을 가정하였다. 이러한 설정에서 모든 레이블을 복구하기 위해 필요한 쿼리의 개수를 이론적으로 계산하였다. 여기에 더하여 대답하는 사람들의 정확도를 모르는 상황에서도 앞에서 계산한 이상적인 쿼리 개수만을 사용하여 모든 레이블을 복구해내는 알고리즘을 제안하였다.

Author: Jinhee Lee, Haeri Kim, Youngkyu Hong and Hye Won Chung

Keywords: Generative Adversarial Networks (GAN), diversity, fairness

Abstract:

Despite remarkable performance in producing realistic samples, Generative Adversarial Networks (GANs) often produce low-quality samples near low-density regions of the data manifold, e.g., samples of minor groups. Many techniques have been developed to improve the quality of generated samples, either by post-processing generated samples or by pre-processing the empirical data distribution, but at the cost of reduced diversity. To promote diversity in sample generation without degrading the overall quality, we propose a simple yet effective method to diagnose and emphasize underrepresented samples during training of a GAN. The main idea is to use the statistics of the discrepancy between the data distribution and the model distribution at each data instance. Based on the observation that the underrepresented samples have a high average discrepancy or high variability in discrepancy, we propose a method to emphasize those samples during training of a GAN. Our experimental results demonstrate that the proposed method improves GAN performance on various datasets, and it is especially effective in improving the quality and diversity of sample generation for minor groups.

주어진 데이터셋으로부터 이 데이터셋을 생성한 확률 분포를 효과적으로 학습하고, 이를 통해 실제와 같은 가상 데이터를 생성하는 기술은 컴퓨터 그래픽 분야에서 높은 활용도를 보이고 있다. 하지만 현재의 기술은 주어진 데이터 샘플 중 다수를 이루는 메이저한 특성(feature)은 효과적으로 학습하는 데 반해 마이너 그룹에 해당하는 특성은 잘 학습하지 못하는 한계를 지닌다. 예를 들어, 사람 얼굴로 이루어진 CelebA 데이터셋 같은 경우는 다수의 이미지를 차지하는 백인, 금발 등의 특성은 높은 퀄리티로 학습하지만, 마이너한 인종, 머리색 등의 특성은 네트워크가 잘 학습하지 못한다. 그 결과, 가상 데이터에서 마이너에 해당하는 샘플의 비율과 퀄리티 모두 메이저 샘플에 비해 (실제 학습 데이터에 비해서도) 현저히 떨어지는 단점을 지닌다. 따라서 본 연구는 이에 대한 해결을 위해 스스로 학습 공정성을 진단하고 개선하는 기계학습 생성 모델 개발을 목표로 하였다.

본 연구에서는 데이터셋에 라벨이 없는 경우, 즉, 데이터를 구성하는 마이너한 특성(feature)이 무엇인지 알려지지 않은 경우에도, 데이터셋으로부터 직접 적대적 생성 신경망(GAN) 모델을 학습하며 스스로 학습 공정성을 진단하고 개선해 가는 기계학습 생성 모델을 제안하였다. 핵심 아이디어는 각 샘플이 학습되는 경향성을 수학적으로 진단하고 학습 되는 정도를 샘플 별로 scoring하는 기법을 통해 학습이 제대로 이루어지지 않고 있는 마이너한 샘플을 구별하고, 이를 다시 강조하여 마이너 샘플에 대한 학습 퀄리티를 향상 시킨데 있다. 이 연구는 가상 데이터셋 생성에 있어 공정성을 개선하며 동시에 다양성을 획기적으로 증진 시켰다는 점에서 앞으로 활용도가 높을 것으로 기대된다.

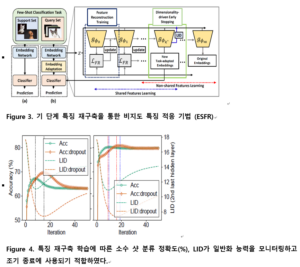

저자: Dong Hoon Lee, Sae-Young Chung

내용:

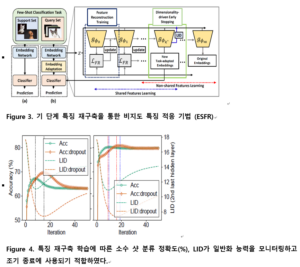

적은 데이터 환경에서 활용할 수 있는 심층망 기반 자기학습을 위해, 심층 신경망이 학습과정에서 일반화된 특징을 암기를 요구하는 특징보다 빠르게 학습하는 현상을 활용하였다. 현상을 통해 적은 데이터만으로도 태스크에 유용한 정보로 구성된 특징을 얻는 새로운 비지도 특징 적응 방법론인 ESFR (Early-Stage Feature Reconstruction)을 개발하였다. ESFR은 위 현상을 활용하기 위한 자기학습 과정인 특징 재구축 학습(feature reconstruction training)과 과적합을 방지하고 적절한 시점에 학습을 종료하기 위한 차원 기반 학습 종료 기법(dimensionality driven early stopping)으로 구성된다.

ESFR에서 자기학습은 특징 레벨에서 자기부호화기(autoencoder) 형태로 재구축 학습을 통해 진행되며, 태스크와 관련된 일반화 가능한 특징이 분리되어 학습 초기 단계에 재구축된다. 이는 소수 샷 분류 정확도가 학습 초기 단계에 상승함을 통해 확인할 수 있었다. 이후 재구축 학습을 통해 암기 형태의 특징도 함께 배워지게 되는데, ESFR은 이러한 불필요한 정보가 배워지는 것을 LID (Local Intrinsic Dimensionality)을 통해 학습을 조기 종료 함으로써 방지하였다. ESFR의 우수성은 소수 샷 분류 벤치마크를 통해 검증되었으며, 데이터셋이나 분류 환경을 가리지 않고 최고성능을 달성하였다.

저자: Suyoung Lee, Sae-Young Chung

내용:

기존 강화학습 알고리즘들은 학습에 다량의 데이터를 요구하면서도 일반화 능력이 좋지 못해 태스크 간 분포 차이가 크게 나는 경우 성능 저하가 크다. 본 연구에서는 특징 레벨에서 역학 모델을 학습하고 가상의 경험을 생성하는 형태로 데이터 효율성과 일반화 능력을 개선하는 LDM (Latent Dynamics Mixture) 기법을 개발하였다.

LDM은 학습 과정에서 경험해보지 못한 태스크를 사전정보 없이 자체적으로 생성하고 학습에 활용함으로써 새로운 태스크에 미리 대비하는 형태로 학습이 이루어진다. 이를 통해 기존 메타 강화학습의 경우처럼 새로운 태스크 환경에 대해 다량의 학습 데이터를 요구하지 않고도 빠르게 좋은 성능을 달성할 수 있음을 확인하였다. LDM은 기존 메타 강화학습 기법들 대비 더 높은 성능과 데이터 효율성을 보였고, 맥락 기반 기법 대비 더 높은 데이터 효율성을 보였다.