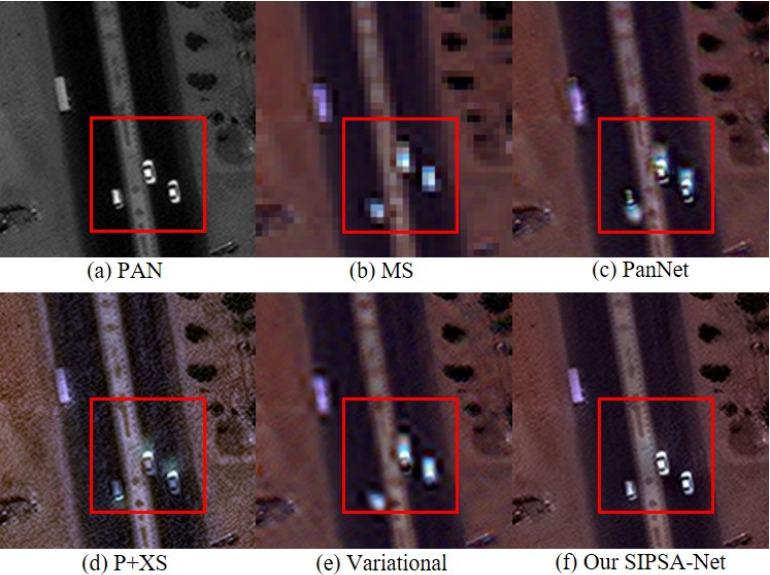

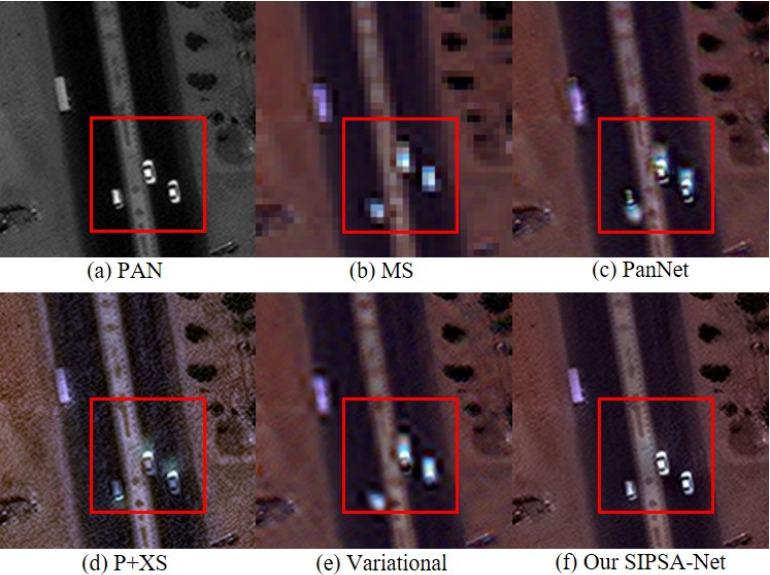

Jaehyup Lee, Soomin Seo and Munchurl Kim, “SIPSA-Net: Shift-Invariant Pan Sharpening with Moving Object Alignment for Satellite Imagery,” Conference on Computer Vision and Pattern Recognition (CVPR), June 19-25, 2021. (Oral Paper)

Abstract

Pan-sharpening is a process of merging a highresolution (HR) panchromatic (PAN) image and its corresponding low-resolution (LR) multi-spectral (MS) image to create an HR-MS and pan-sharpened image. However, due to the different sensors’ locations, characteristics and acquisition time, PAN and MS image pairs often tend to have various amounts of misalignment. Conventional deeplearning-based methods that were trained with such misaligned PAN-MS image pairs suffer from diverse artifacts such as double-edge and blur artifacts in the resultant PANsharpened images. In this paper, we propose a novel framework called shift-invariant pan-sharpening with moving object alignment (SIPSA-Net) which is the first method to take into account such large misalignment of moving object regions for PAN sharpening. The SISPA-Net has a feature alignment module (FAM) that can adjust one feature to be aligned to another feature, even between the two different PAN and MS domains. For better alignment in pansharpened images, a shift-invariant spectral loss is newly designed, which ignores the inherent misalignment in the original MS input, thereby having the same effect as optimizing the spectral loss with a well-aligned MS image. Extensive experimental results show that our SIPSA-Net can generate pan-sharpened images with remarkable improvements in terms of visual quality and alignment, compared to the state-of-the-art methods

[Title]

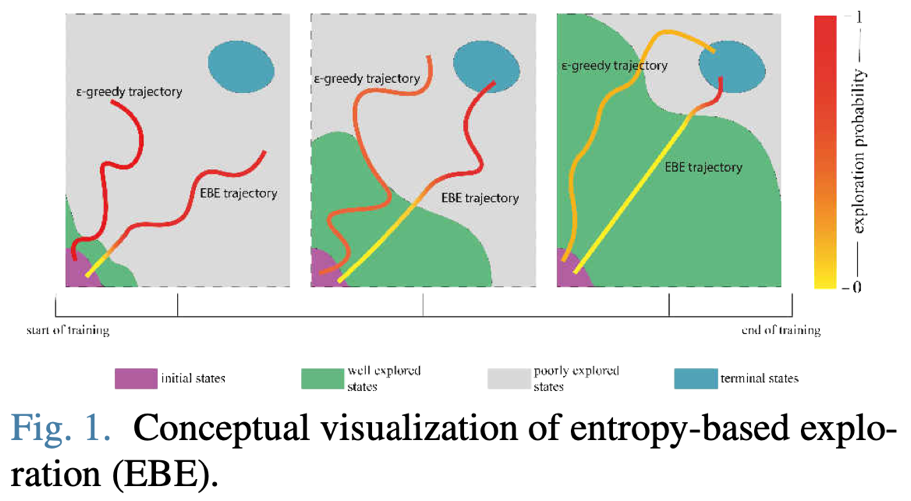

Learning-driven exploration for reinforcement learning

[Authors]

Muhammad Usama, Dong Eui Chang

[Abstract]

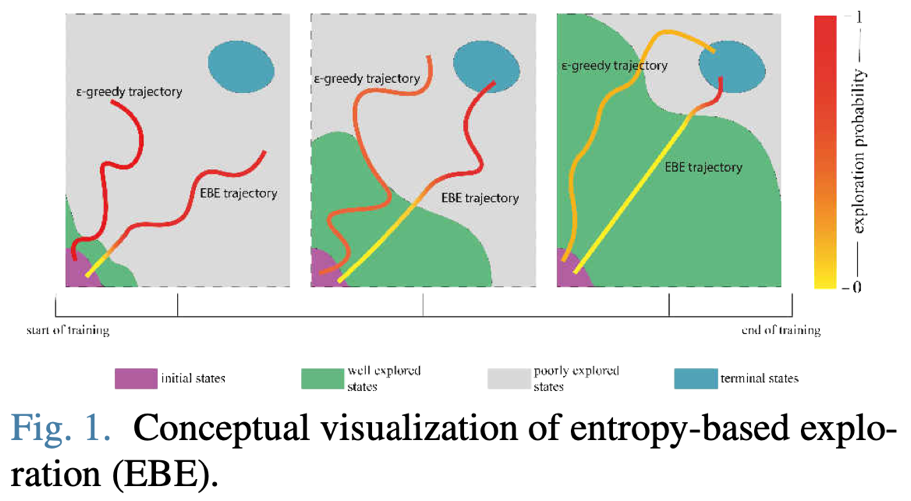

Effective and intelligent exploration remains an unresolved problem for reinforcement learning. Most contemporary reinforcement learning relies on simple heuristic strategies which are unable to intelligently distinguish the well-explored and the unexplored regions of state space, which can lead to inefficient use of training time. We introduce entropy-based exploration (EBE) that enables an agent to explore efficiently the unexplored regions of state space. EBE quantifies the agent’s learning in a state using state-dependent action values and adaptively explores the state space, i.e. more exploration for the unexplored region of the state space. We perform experiments on a diverse set of environments and demonstrate that EBE enables efficient exploration that ultimately results in faster learning without having to tune any hyperparameter. The code to reproduce the experiments is given at https://github.com/Usama1002/ EBE-Exploration and the supplementary video is given at https://youtu.be/nJggIjjzKic.

[Title]

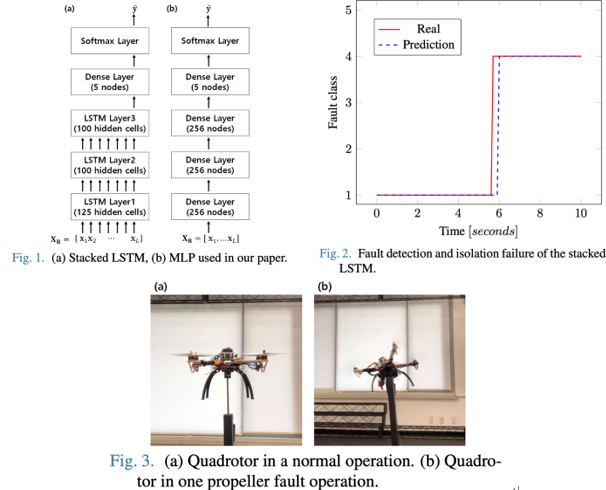

Data-driven fault detection and isolation of system with only state measurements and control inputs using neural networks

[Authors]

Jae-Hyeon Park, Dong Eui Chang

[Abstract]

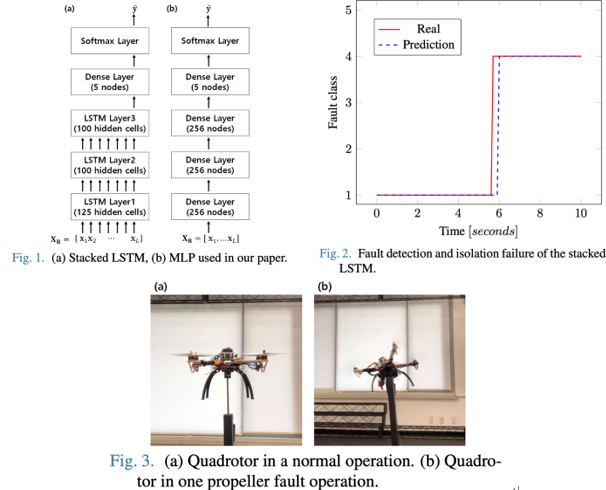

With the advancement of neural network technology, many researchers are trying to find a clever way to apply neural network to a fault detection and isolation area for satisfactory and safer operations of the system. Some researchers detect system faults by combining a concrete model of the system with neural network, generating residuals by neural network, or training neural network with specific sensor signals of the system. In this article, we make a fault detection and isolation neural network algorithm that uses only inherent sensor measurements and control inputs of the system. This algorithm does not need a model of the system, residual generations, or additional sensors. We obtain sensor measurements and control inputs in a discrete-time manner, cut signals with a sliding window approach, and label data with one-hot vectors representing a normal or fault classes. We train our neural network model with the labeled training data. We give 2 neural network models: a stacked long short-term memory neural network and a multilayer perceptron. We test our algorithm with the quadrotor fault simulation and the real experiment. Our algorithm gives nice performance on a fault detection and isolation of the quadrotor.

[Title]

Autonomous Driving Based on Modified SAC Algorithm through Imitation Learning Pretraining

[Authors]

Mengyi Gao, Dong Eui Chang

[Abstract]

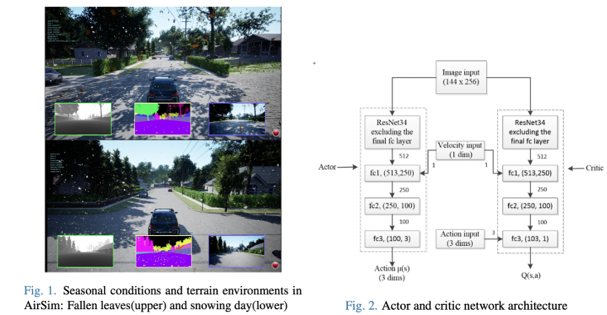

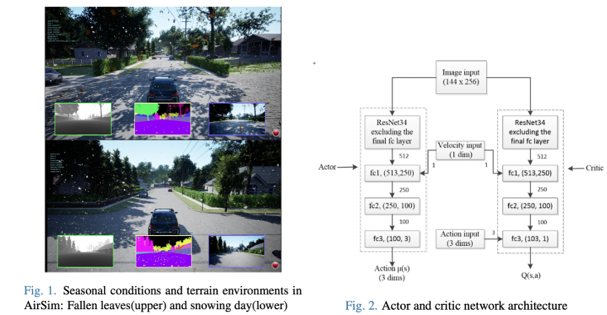

In this paper, we implement a modified SAC [1] algorithm for autonomous driving tasks using the simulator AirSim’s [2] environment API which provides various weather, collision, and lighting choices. Given current image state and car velocity as our inputs, the task outputs the throttle, brake, and steering angle data and gives the vehicle action instruction through the AirSim control outputs. As autonomous vehicles are more likely to be accepted if they drive like how human would, we at first train our model by imitation learning to provides the pre-trained human-like policy and weights to SAC. During the reinforcement learning, in order to increase the feasible policy’s robustness, we use ResNet-34 [3] as our actor and critic network architecture in the SAC algorithm.

[Title]

Real time pose detection of animals using HRNet

[Authors]

Soham Shanbhag, Dong Eui Chang

[Abstract]

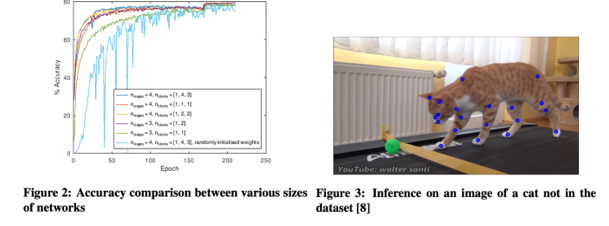

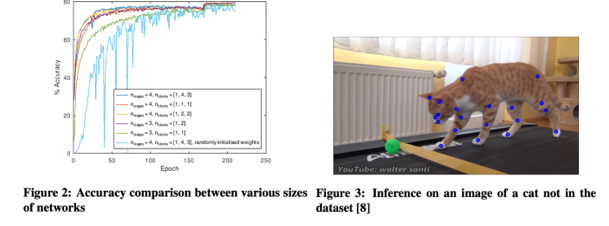

We design a machine learning algorithm for detection of the joints of a cat from an image. We compare the accuracy and the speed of the algorithm with weights initialized from a network trained on images of humans and with random initialization of the weights. We also compare five networks with different sizes for speed and accuracy.

[Title]

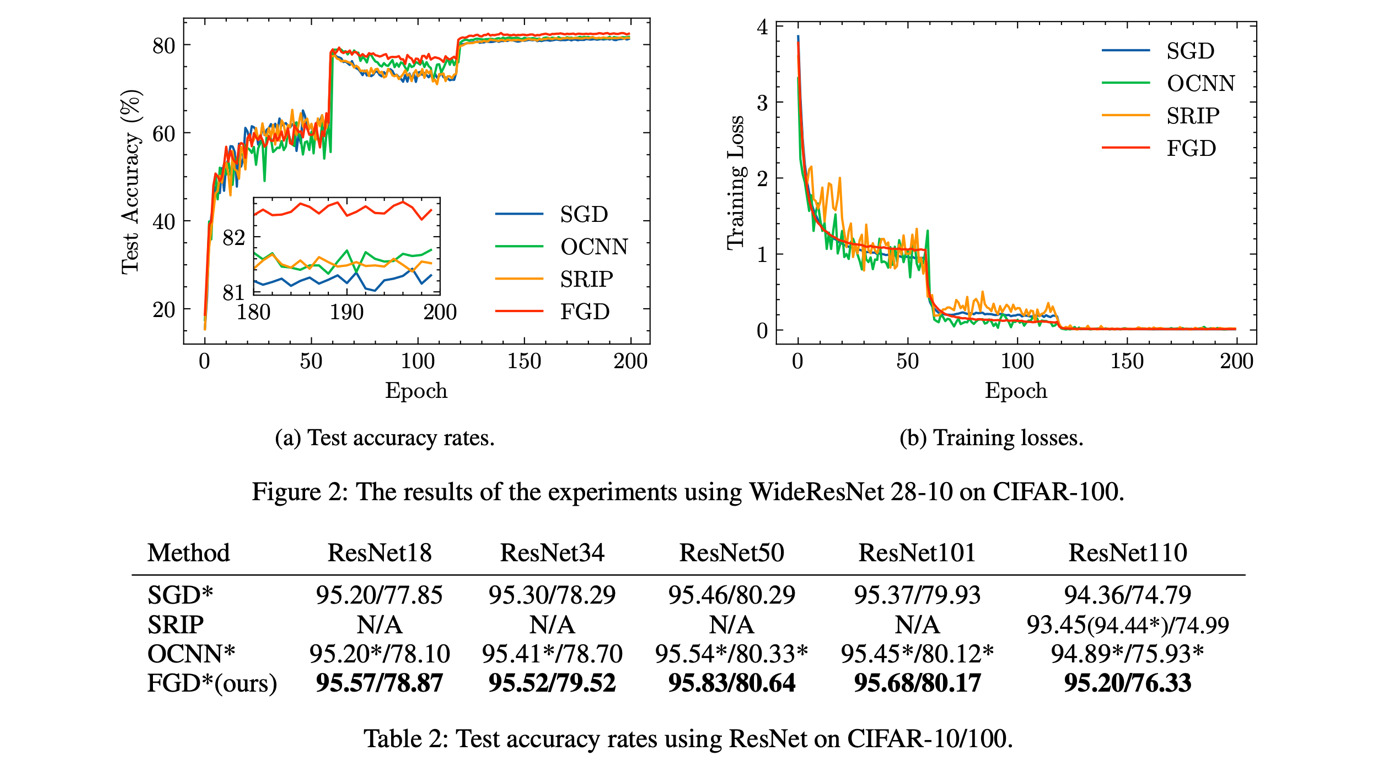

Feedback Gradient Descent: Efficient and Stable Optimization with Orthogonality for DNNs

[Authors]

Fanchen Bu, Dong Eui Chang

[Abstract]

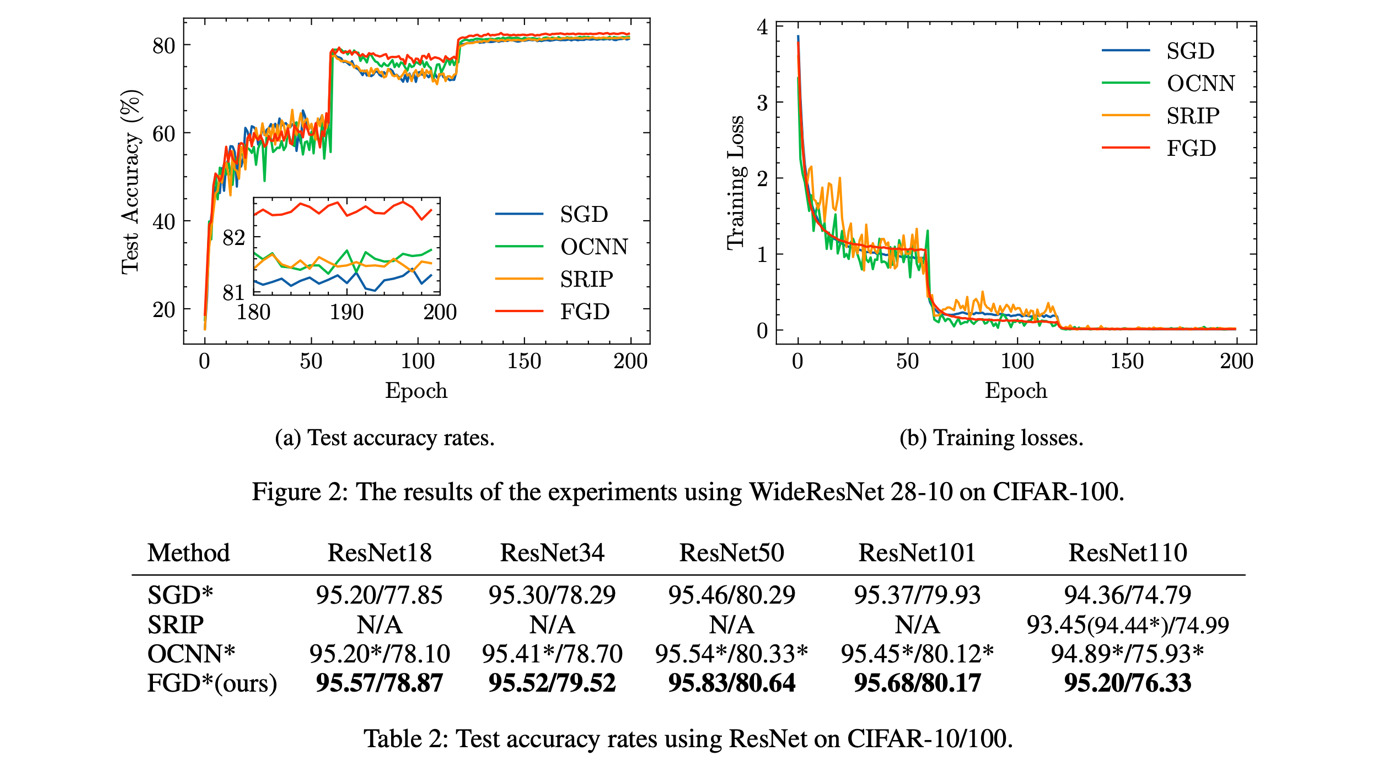

The optimization with orthogonality has been shown useful in training deep neural networks (DNNs). To impose orthog- onality on DNNs, both computational efficiency and stability are important. However, existing methods utilizing Rieman- nian optimization or hard constraints can only ensure stabil- ity while those using soft constraints can only improve ef- ficiency. In this paper, we propose a novel method, named Feedback Gradient Descent (FGD), to our knowledge, the first work showing high efficiency and stability simultane- ously. FGD induces orthogonality based on the simple yet indispensable Euler discretization of a continuous-time dy- namical system on the tangent bundle of the Stiefel manifold. In particular, inspired by a numerical integration method on manifolds called Feedback Integrators, we propose to instan- tiate it on the tangent bundle of the Stiefel manifold for the first time. In the extensive image classification experiments, FGD comprehensively outperforms the existing state-of-the- art methods in terms of accuracy, efficiency, and stability.

[Title]

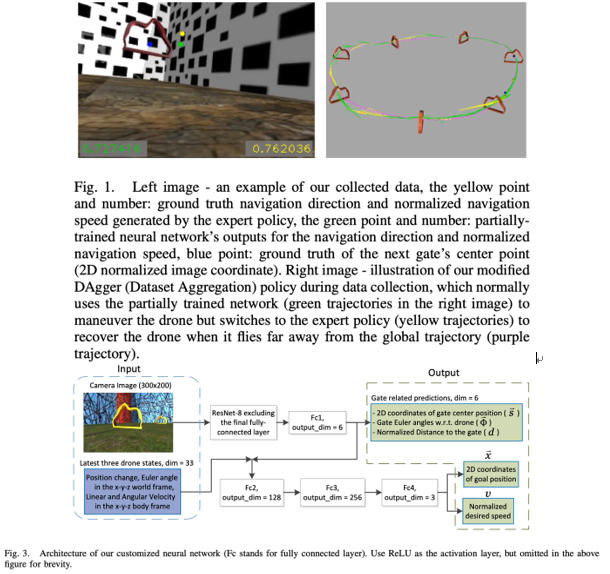

Robust Navigation for Racing Drones based on Imitation Learning and Modularization

[Authors]

Tianqi Wang, Dong Eui Chang

[Abstract]

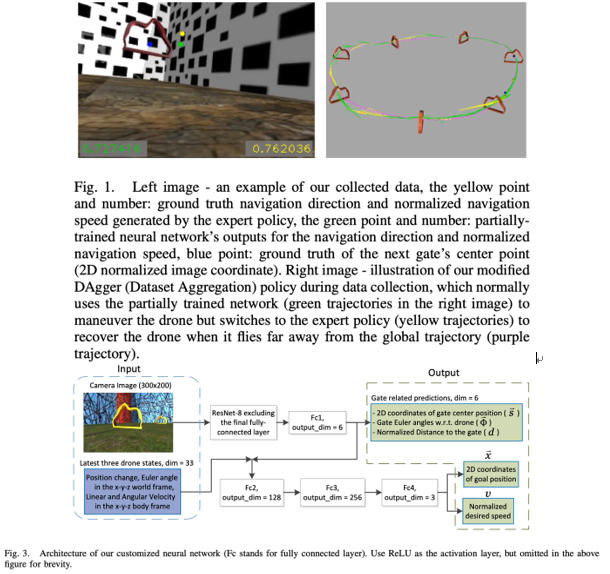

This paper presents a vision-based modularized drone racing navigation system that uses a customized convo- lutional neural network (CNN) for the perception module to produce high-level navigation commands and then leverages a state-of-the-art planner and controller to generate low-level control commands, thus exploiting the advantages of both data- based and model-based approaches. Unlike the state-of-the-art method, which only takes the current camera image as the CNN input, we further add the latest three estimated drone states as part of the inputs. Our method outperforms the state-of-the-art method in various track layouts and offers two switchable navi- gation behaviors with a single trained network. The CNN-based perception module is trained to imitate an expert policy that automatically generates ground truth navigation commands based on the pre-computed global trajectories. Owing to the extensive randomization and our modified dataset aggregation (DAgger) policy during data collection, our navigation system, which is purely trained in simulation with synthetic textures, successfully operates in environments with randomly-chosen photo-realistic textures without further fine-tuning.

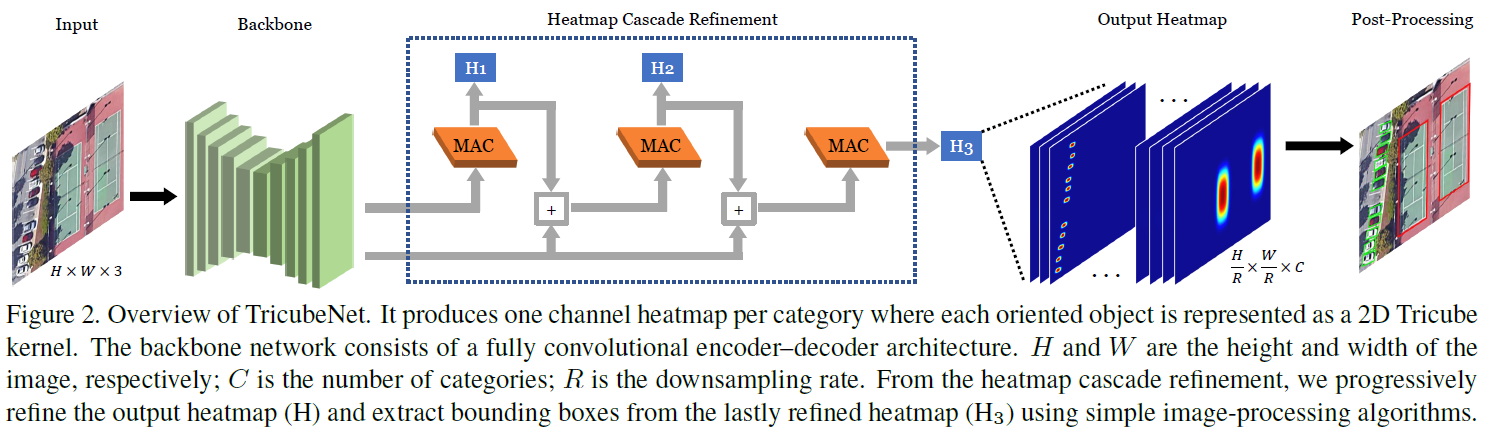

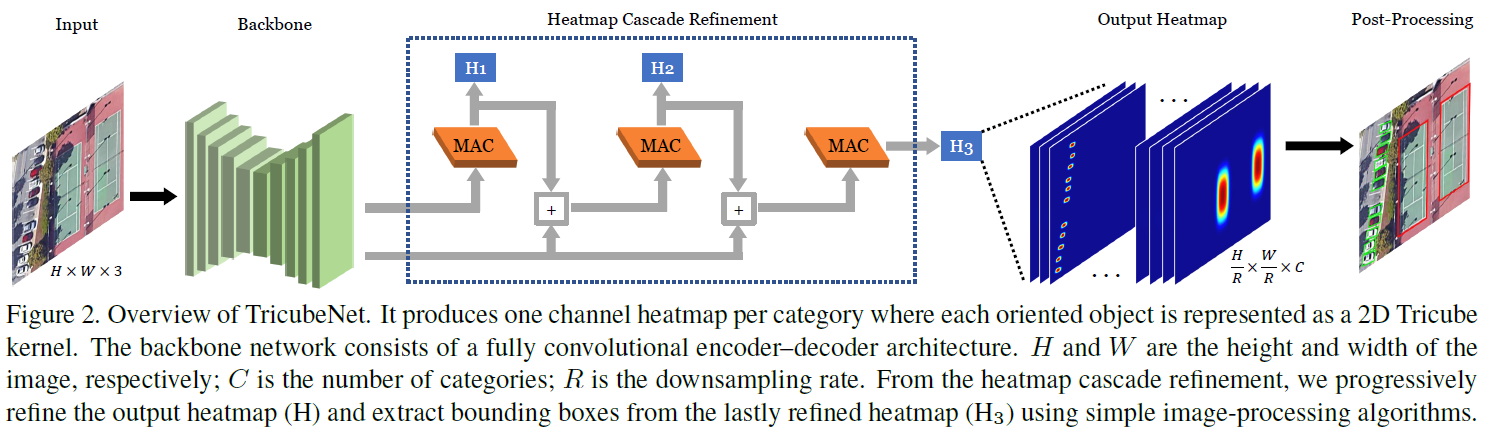

Title: TricubeNet: 2D Kernel-Based Object Representation for Weakly-Occluded Oriented Object Detection (WACV 2022)

Authors: Beomyoung Kim (NAVER CLOVA), Janghyeon Lee (LG AI Research), Sihaeng Lee (LG AI Research), Doyeon Kim (KAIST), Junmo Kim (KAIST)

Abstract: We present a novel approach for oriented object detection, named TricubeNet, which localizes oriented objects using visual cues (i.e., heatmap) instead of oriented box offsets regression. We represent each object as a 2D Tricube kernel and extract bounding boxes using simple image-processing algorithms. Our approach is able to (1) obtain well-arranged boxes from visual cues, (2) solve the angle discontinuity problem, and (3) can save computational complexity due to our anchor-free modeling. To further boost the performance, we propose some effective techniques for size-invariant loss, reducing false detections, extracting rotation-invariant features, and heatmap refinement. To demonstrate the effectiveness of our TricubeNet, we experiment on various tasks for weakly-occluded oriented object detection: detection in an aerial image, densely packed object image, and text image. The extensive experimental results show that our TricubeNet is quite effective for oriented object detection.

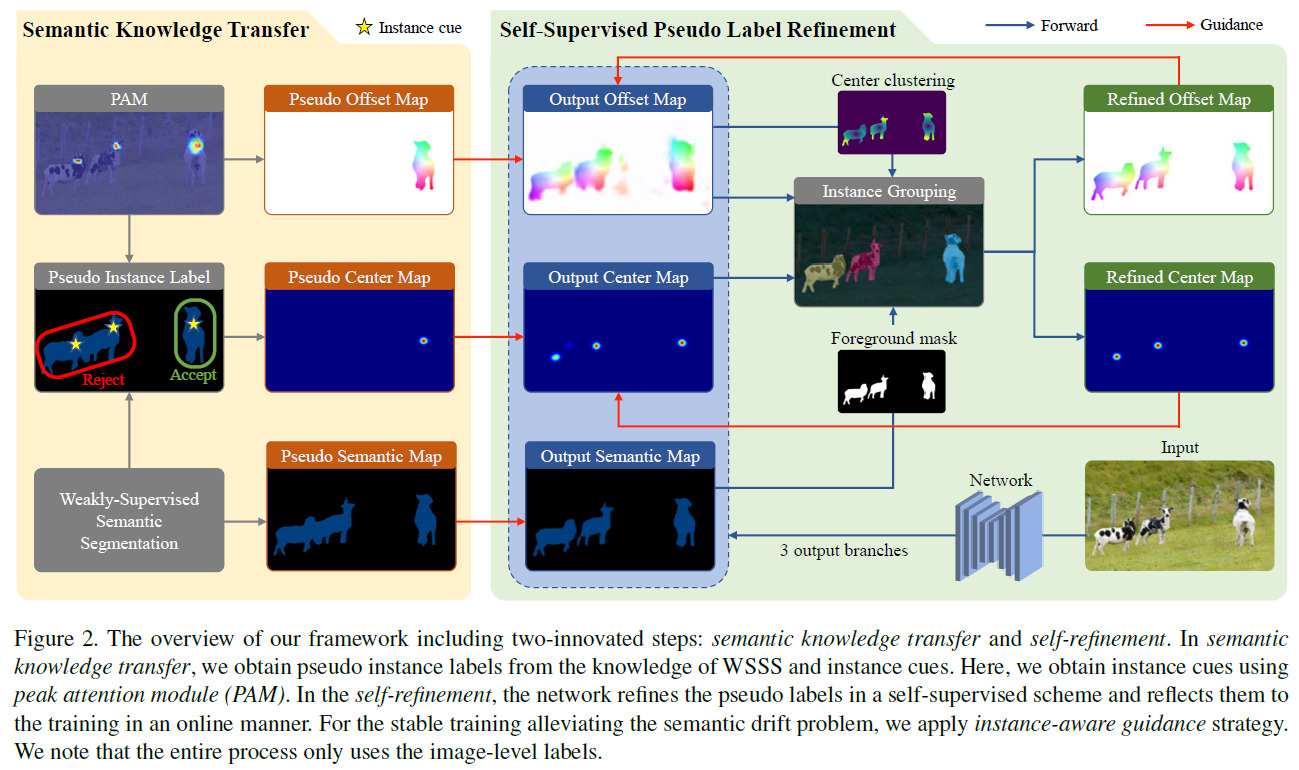

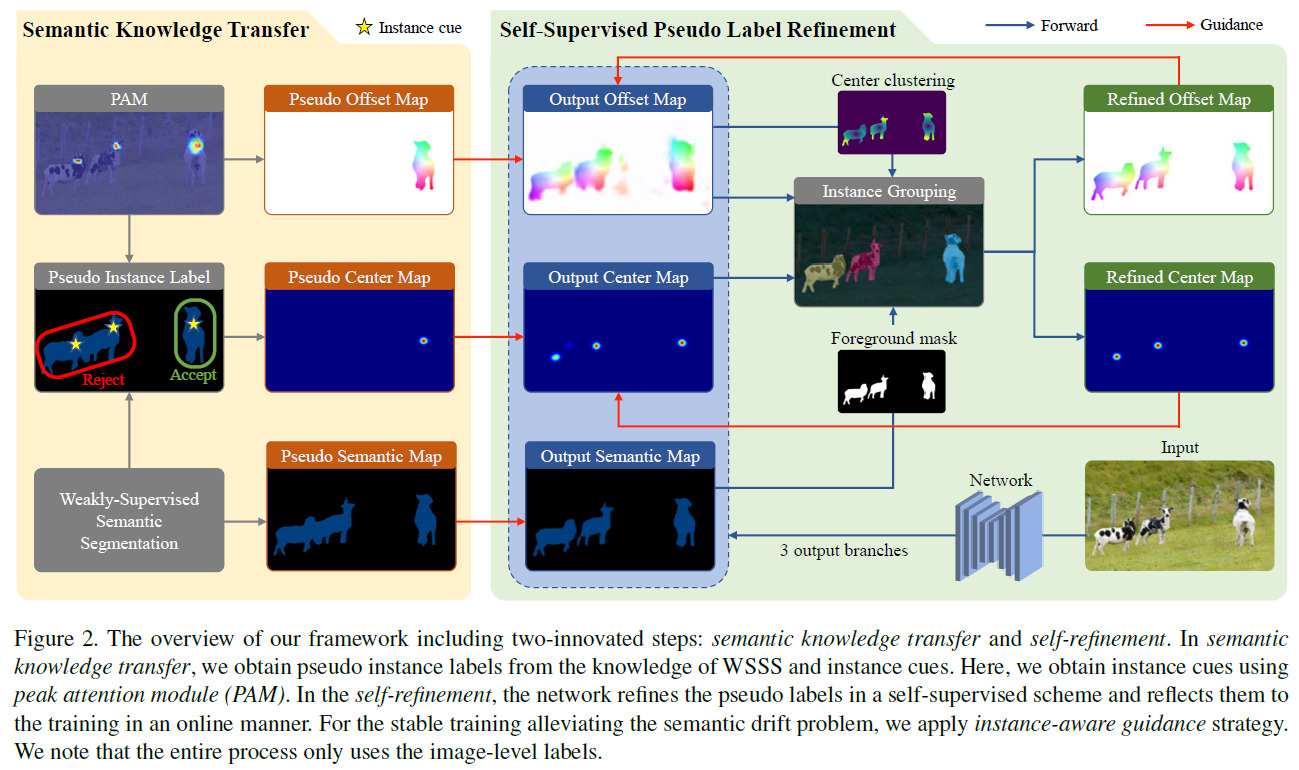

Title: Beyond Semantic to Instance Segmentation:Weakly-Supervised Instance Segmentation via Semantic Knowledge Transfer and Self-Refinement (CVPR 2022)

Authors: Beomyoung Kim (NAVER CLOVA) Youngjoon Yoo (NAVER CLOVA, NAVER AI Lab), Chae Eun Rhee (Inha University) Junmo Kim (KAIST)

Abstract: Weakly-supervised instance segmentation (WSIS) has been considered as a more challenging task than weakly-supervised semantic segmentation (WSSS). Compared to WSSS, WSIS requires instance-wise localization, which is difficult to extract from image-level labels. To tackle the problem, most WSIS approaches use off-the-shelf proposal techniques that require pre-training with instance or object level labels, deviating the fundamental definition of the fully-image-level supervised setting. In this paper, we propose a novel approach including two innovative components. First, we propose a semantic knowledge transfer to obtain pseudo instance labels by transferring the knowledge of WSSS to WSIS while eliminating the need for the off-the-shelf proposals. Second, we propose a self-refinement method to refine the pseudo instance labels in a self-supervised scheme and to use the refined labels for training in an online manner. Here, we discover an erroneous phenomenon, semantic drift, that occurred by the missing instances in pseudo instance labels categorized as background class. This semantic drift occurs confusion between background and instance in training and consequently degrades the segmentation performance. We term this problem as semantic drift problem and show that our proposed self-refinement method eliminates the semantic drift problem. The extensive experiments on PASCAL VOC 2012 and MS COCO demonstrate the effectiveness of our approach, and we achieve a considerable performance without off-the-shelf proposal techniques.

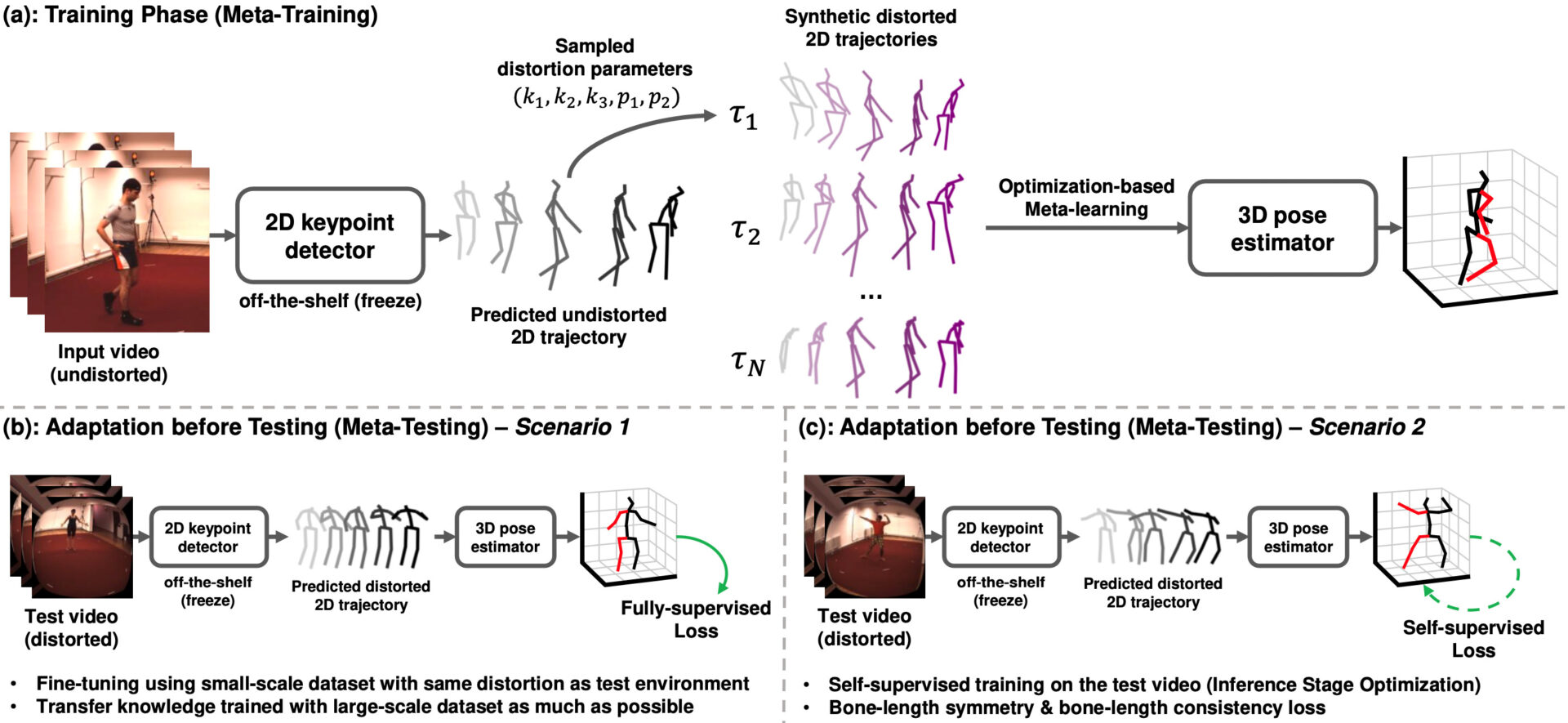

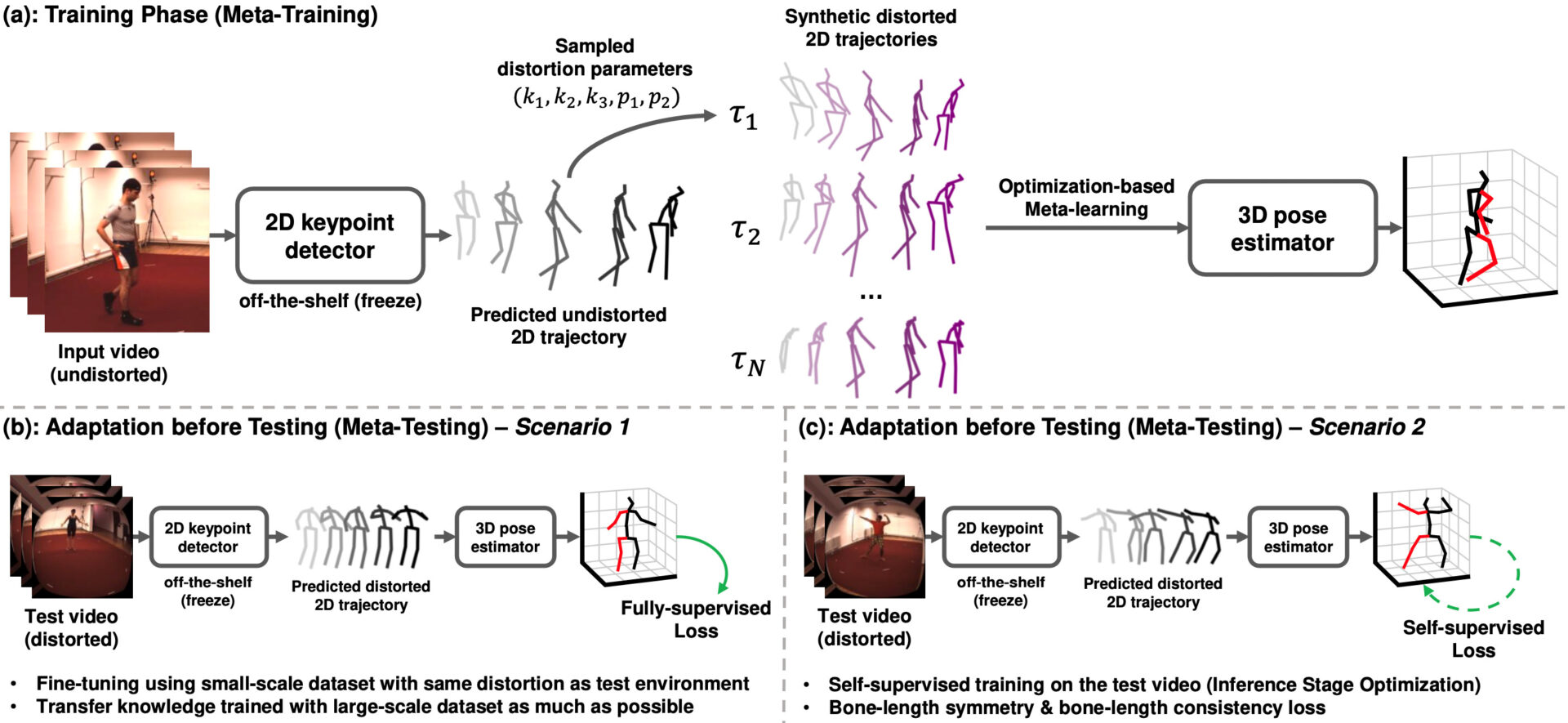

Title: Camera Distortion-aware 3D Human Pose Estimation in Video with Optimization-based Meta-Learning

Authors: Hanbyel Cho, Yooshin Cho, Jaemyung Yu, and Junmo Kim

In this paper, we propose a simple yet effective model for 3D human pose estimation in video that can quickly adapt to any distortion environment by utilizing MAML, a representative optimization-based meta-learning algorithm. We consider a sequence of 2D keypoints in a particular distortion as a single task of MAML. However, due to the absence of a large-scale dataset in a distorted environment, we propose an efficient method to generate synthetic distorted data from undistorted 2D keypoints. For the evaluation, we assume two practical testing situations depending on whether a motion capture sensor is available or not. In particular, we propose Inference Stage Optimization using bone-length symmetry and consistency. Extensive evaluation shows that our proposed method successfully adapts to various degrees of distortion in the testing phase and outperforms the existing state-of-the-art approaches.