Title: Machine-Learning-Based Read Reference Voltage Estimation for NAND Flash Memory Systems Without Knowledge of Retention Time

Authors: Hyemin Choe, Jeongju Jee, Seung-Chan Lim, Sung Min Joe, Il Han Park, and Hyuncheol Park

Journal: IEEE Access (published: September 2020)

To achieve a low error rate of NAND flash memory, reliable reference voltages should be updated based on the accurate knowledge of program/erase (P/E) cycles and retention time, because those severely distort the threshold voltage distribution of memory cell. Due to the sensitivity to the temperature, however, a flash memory controller is unable to acquire the exact knowledge of retention time, meaning that it is challenging to estimate accurate read reference voltages in practice.

In addition, it is difficult to characterize the relation between the channel impairments and the optimal read reference voltages in general. Therefore, we propose a machine-learning-based read reference voltage estimation framework for NAND flash memory without the knowledge of retention time.

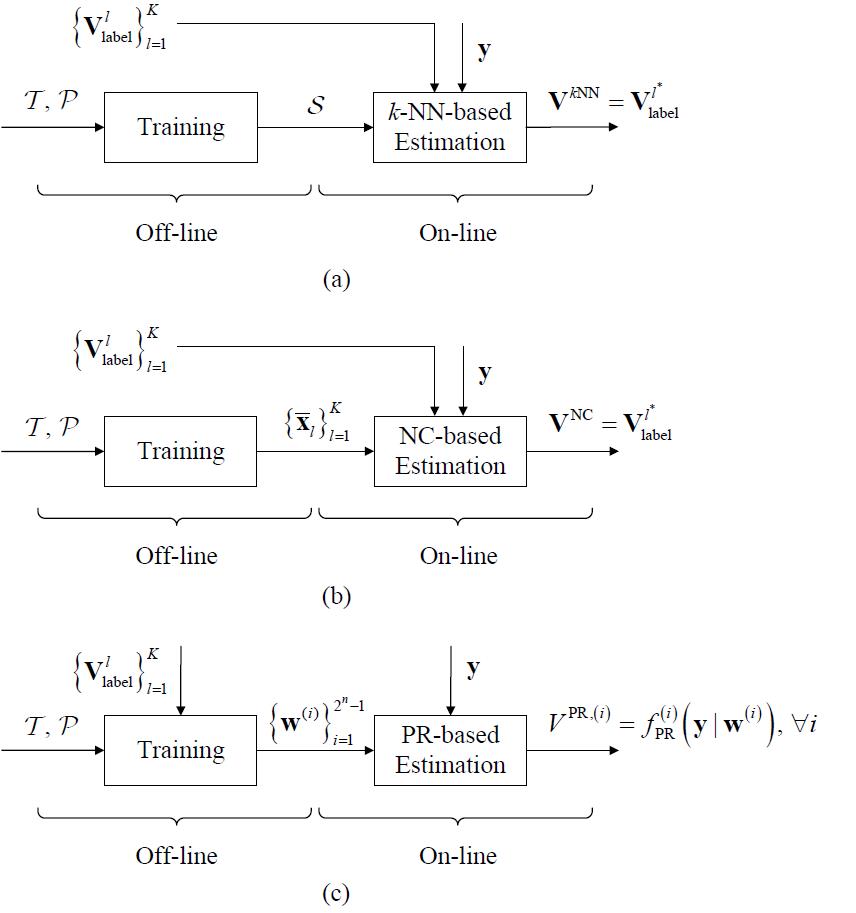

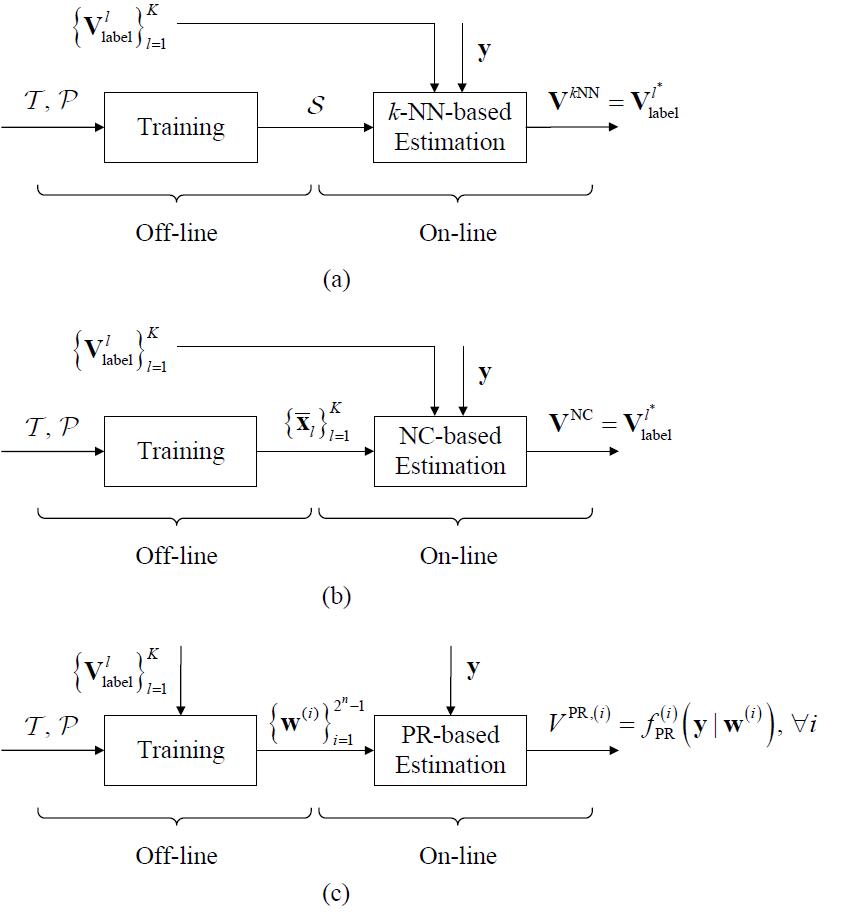

In the off-line training phase, to define the input features of the proposed framework, we derive alternative information of unknown retention time, which are obtained by sensing and decoding the data in one wordline. For the on-line estimation phase, we propose three estimation schemes: 1) k-nearest neighbors (k-NN)- based, 2) nearest-centroid (NC)-based, and 3) polynomial regression (PR)-based estimations. By applying these estimation schemes, an unlabeled input feature is simply mapped into a pre-assigned class label, namely label read reference voltages, via the on-line estimation phase.

Based on the simulation and analysis, we have verified that the proposed framework can achieve high-reliable and low-latency performances in NAND flash memory systems without the knowledge of retention time.

Figure 1. Flow charts of read reference voltage estimation schemes. (a) k-NN based (b) NC-based (c) PR-based estimations.