EE Professor Dongsu Han’s Research Team Develops Technology to Accelerate AI Model Training in Distributed Environments Using Consumer-Grade GPUs

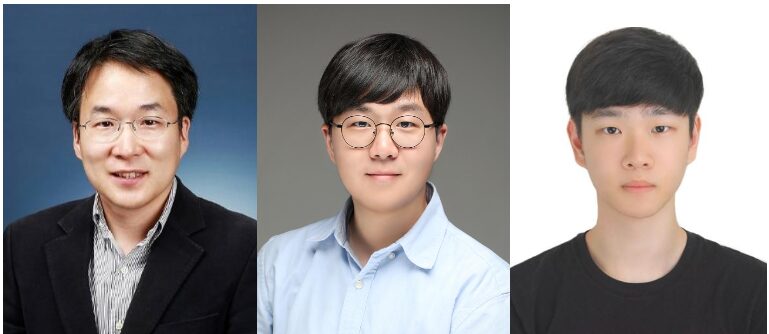

<(from left) Professor Dongsu Han, Dr. Hwijoon Iim, Ph.D. Candidate Juncheol Ye>

Professor Dongsu Han’s research team of the KAIST Department of Electrical Engineering has developed a groundbreaking technology that accelerates AI model training in distributed environments with limited network bandwidth using consumer-grade GPUs.

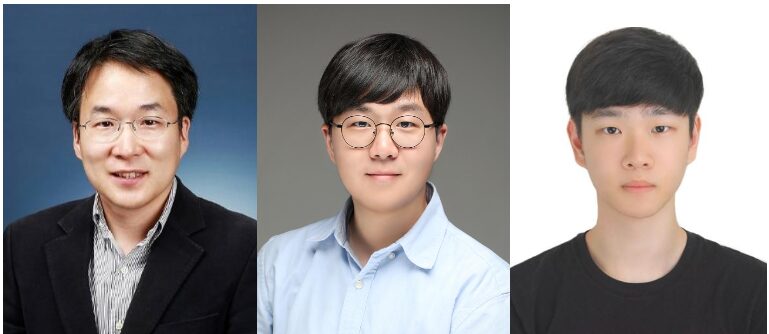

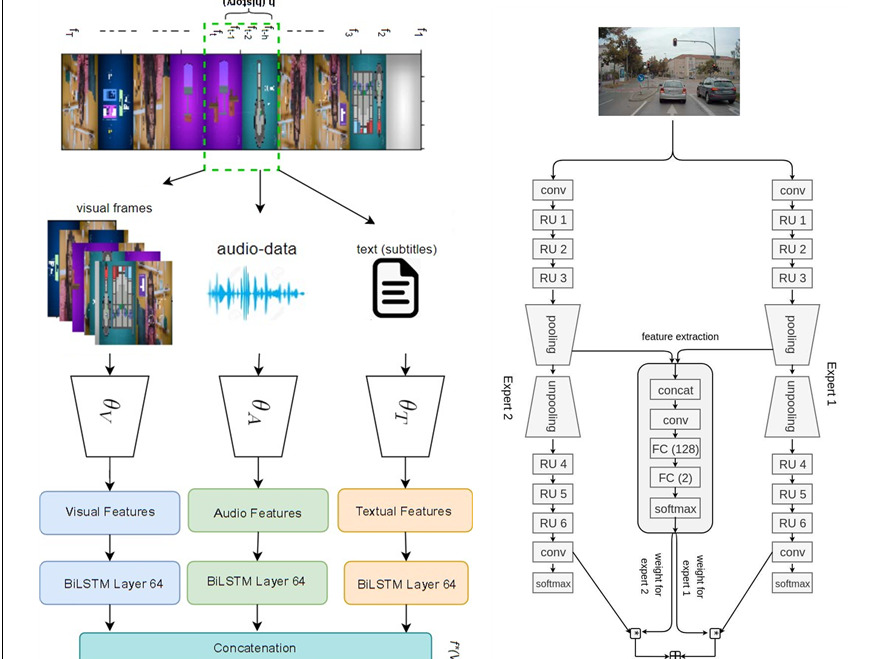

Training the latest AI models typically requires expensive infrastructure, such as high-performance GPUs costing tens of millions in won and high-speed dedicated networks.

As a result, most researchers in academia and small to medium-sized enterprises have to rely on cheaper, consumer-grade GPUs for model training.

However, they face difficulties in efficient model training due to network bandwidth limitations.

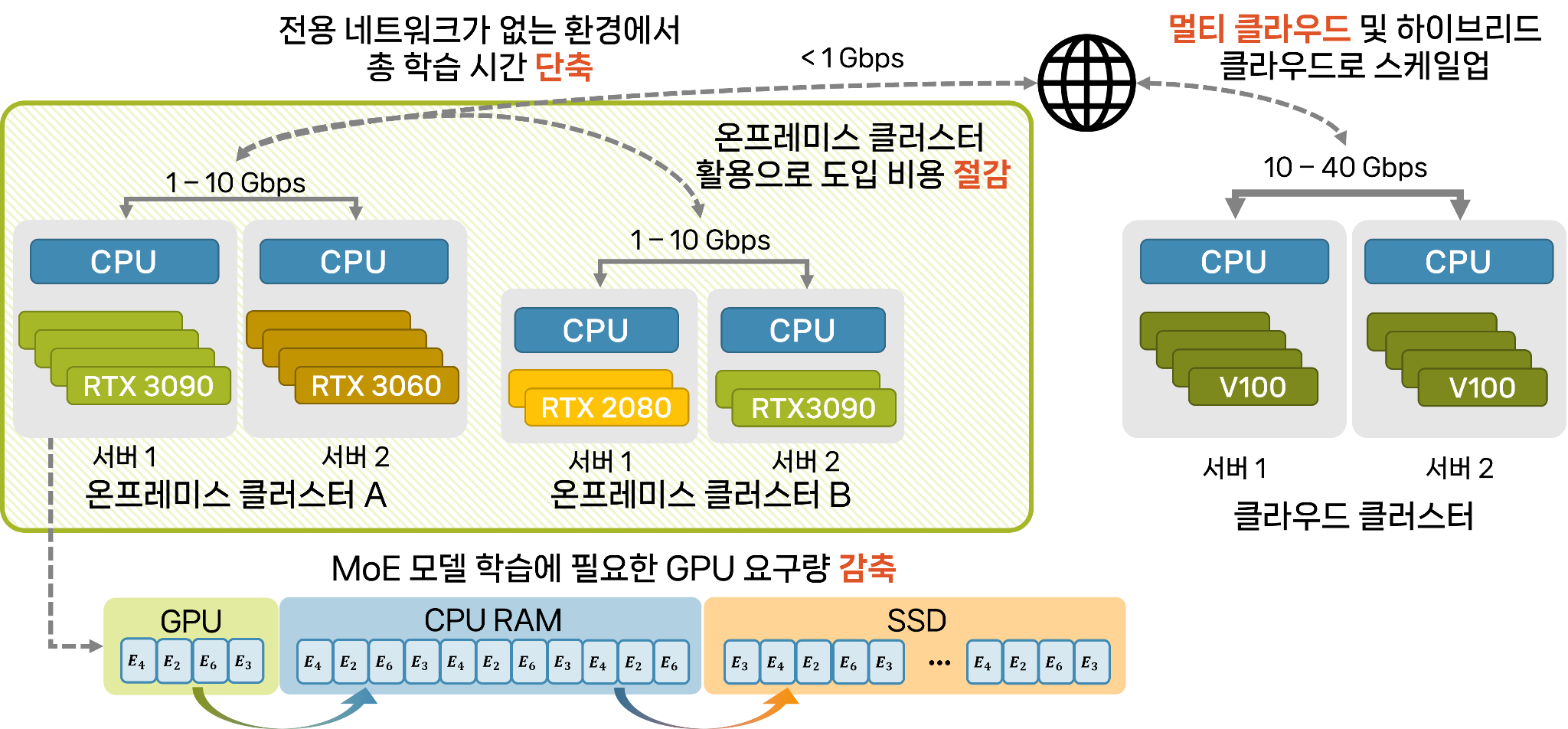

<Figure 1. Problems in Conventional Low-Cost Distributed Deep Learning Environments>

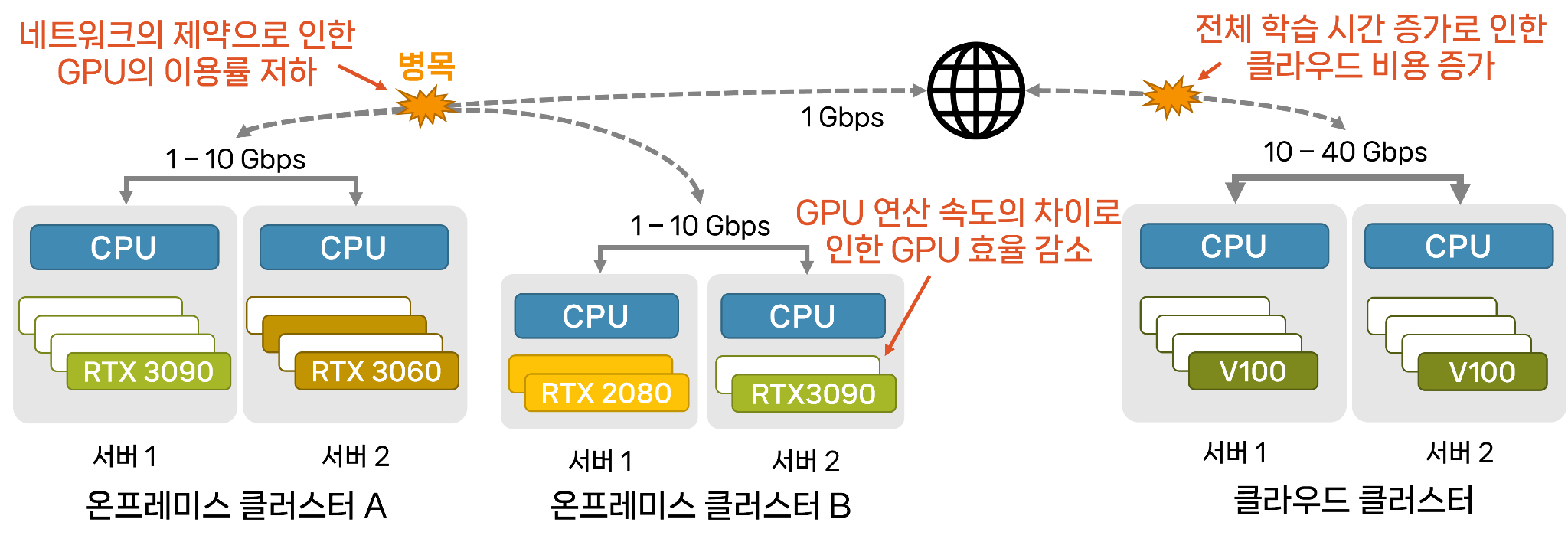

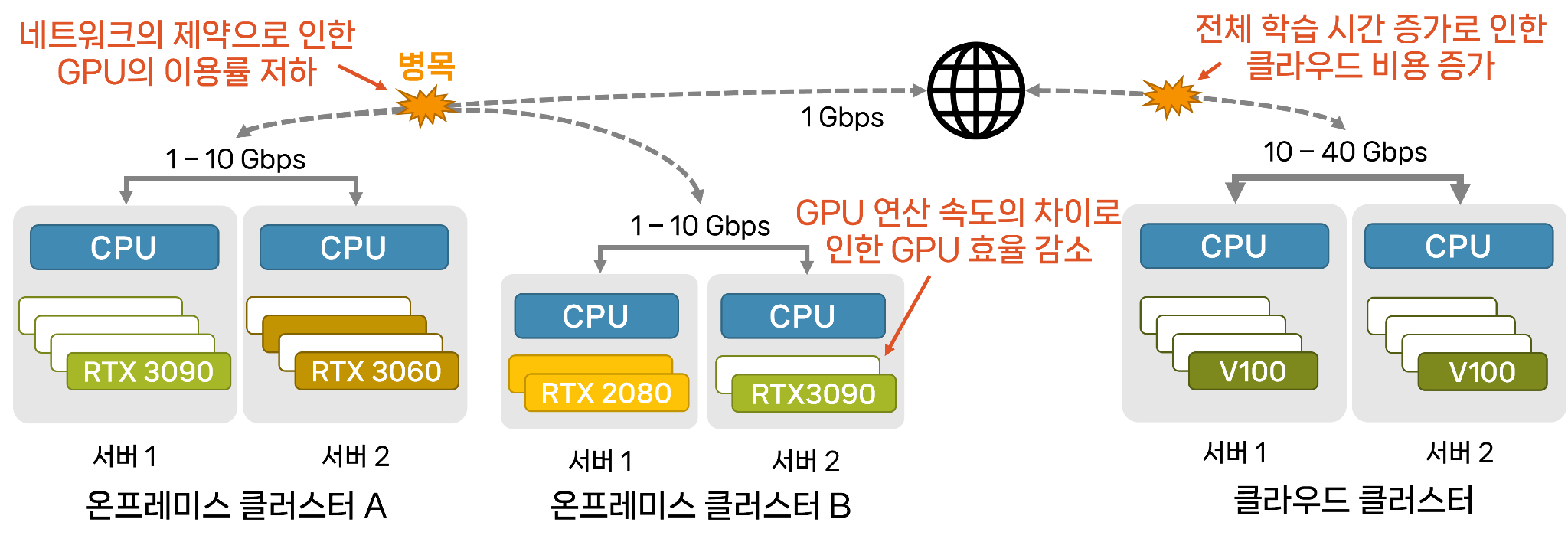

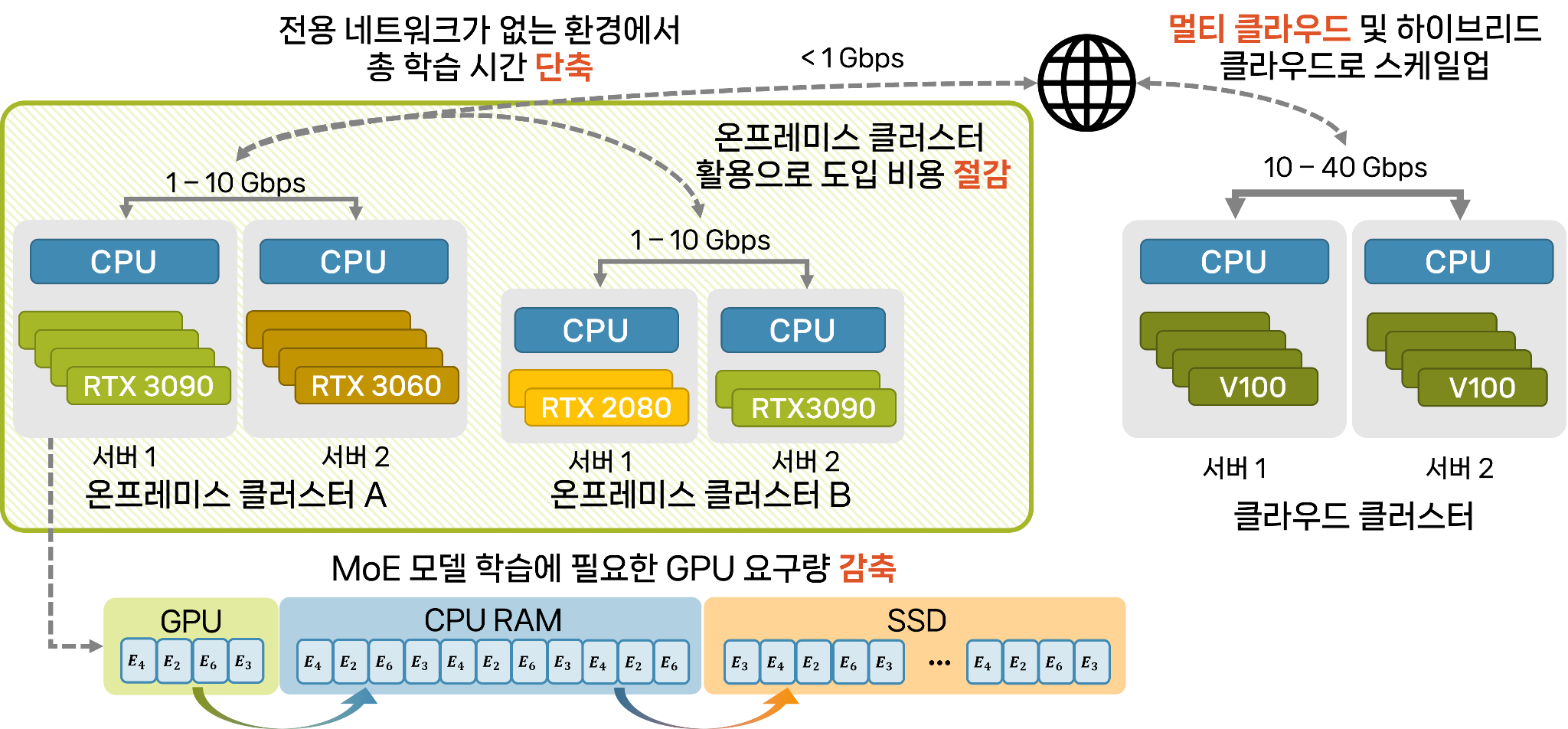

To address these issues, Professor Han’s team developed a distributed learning framework called StellaTrain.

StellaTrain accelerates model training on low-cost GPUs by integrating a pipeline that utilizes both CPUs and GPUs. It dynamically adjusts batch sizes and compression rates according to the network environment, enabling fast model training in multi-cluster and multi-node environments without the need for high-speed dedicated networks.

StellaTrain adopts a strategy that offloads gradient compression and optimization processes to the CPU to maximize GPU utilization by optimizing the learning pipeline. The team developed and applied a new sparse optimization technique and cache-aware gradient compression technology that work efficiently on CPUs.

This implementation creates a seamless learning pipeline where CPU tasks overlap with GPU computations. Furthermore, dynamic optimization technology adjusts batch sizes and compression rates in real-time according to network conditions, achieving high GPU utilization even in limited network environments.

<Figure 2. Overview of the StellaTrain Learning Pipeline>

Through these innovations, StellaTrain significantly improves the speed of distributed model training in low-cost multi-cloud environments, achieving up to 104 times performance improvement compared to the existing PyTorch DDP.

Professor Han’s research team has paved the way for efficient AI model training without the need for expensive data center-grade GPUs and high-speed networks. This breakthrough is expected to greatly aid AI research and development in resource-constrained environments, such as academia and small to medium-sized enterprises.

Professor Han emphasized, “KAIST is demonstrating leadership in the AI systems field in South Korea.” He added, “We will continue active research to implement large-scale language model (LLM) training, previously considered the domain of major IT companies, in more affordable computing environments. We hope this research will serve as a critical stepping stone toward that goal.”

The research team included Dr. Hwijoon Iim and Ph.D. candidate Juncheol Ye from KAIST, as well as Professor Sangeetha Abdu Jyothi from UC Irvine. The findings were presented at ACM SIGCOMM 2024, the premier international conference in the field of computer networking, held from August 4 to 8 in Sydney, Australia (Paper title: Accelerating Model Training in Multi-cluster Environments with Consumer-grade GPUs).

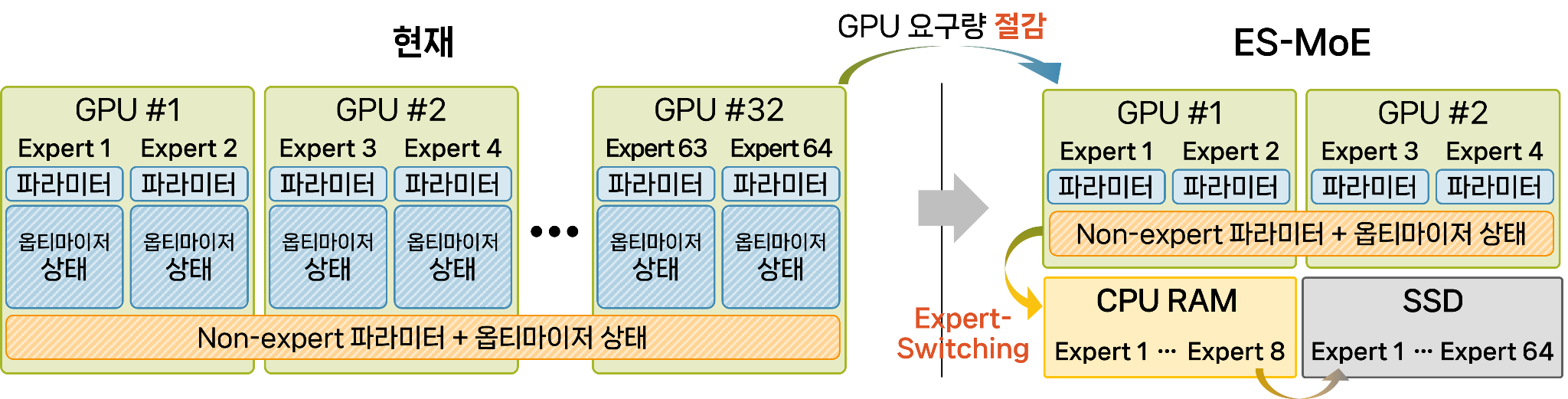

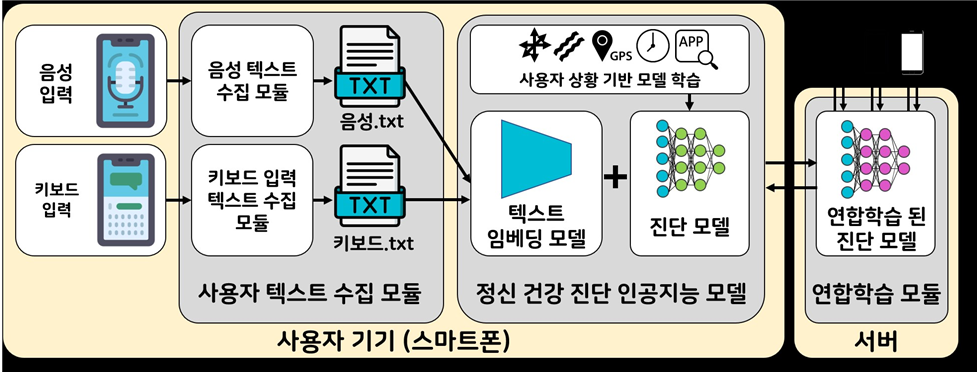

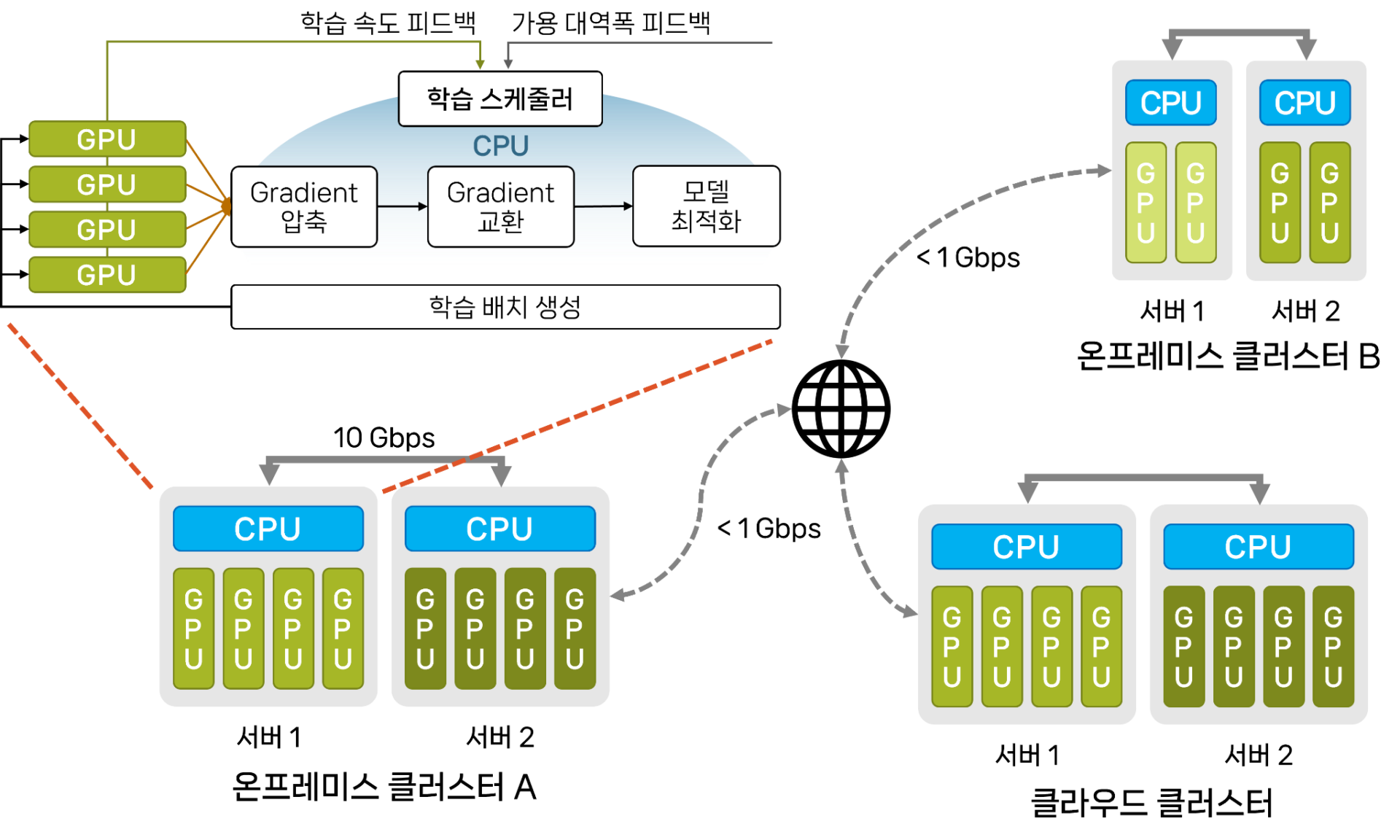

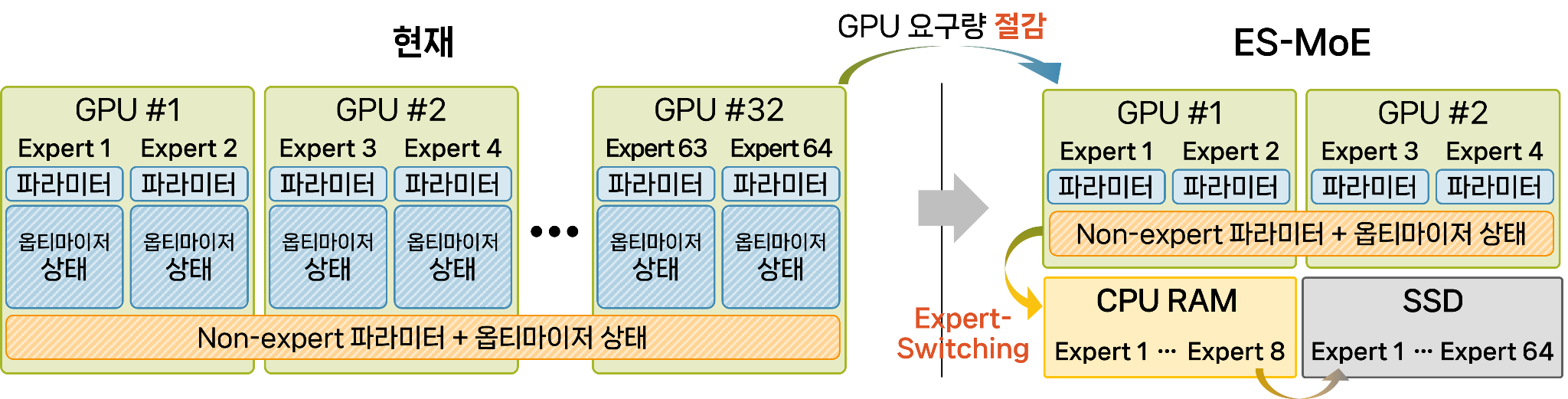

Meanwhile, Professor Han’s team has also made continuous research advancements in the AI systems field, presenting a framework called ES-MoE, which accelerates Mixture of Experts (MoE) model training, at ICML 2024 in Vienna, Austria.

By overcoming GPU memory limitations, they significantly enhanced the scalability and efficiency of large-scale MoE model training, enabling fine-tuning of a 15-billion parameter language model using only four GPUs. This achievement opens up the possibility of effectively training large-scale AI models with limited computing resources.

<Figure 3. Overview of the ES-MoE Framework>

<Figure 4. Professor Dongsu Han’s research team has enabled AI model training in low-cost computing environments, even with limited or no high-performance GPUs, through their research on StellaTrain and ES-MoE.>