Ki Hyun Tae, a Ph.D. student of Prof. Steven Euijong Whang in the EE department, proposed a selective data acquisition framework for accurate and fair machine learning models.

As machine learning becomes widespread in our everyday lives, making AI more responsible is becoming critical. Beyond high accuracy of AI, the key objectives of responsible AI include fairness, robustness, explainability, and more. In particular, companies including Google, Microsoft, and IBM are emphasizing responsible AI.

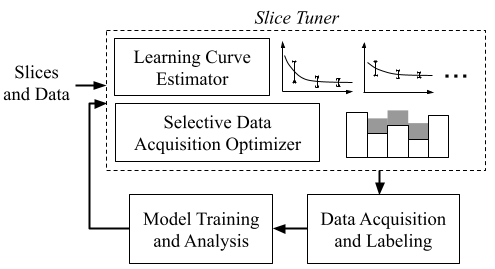

Among the objectives, this work focuses on model fairness. Based on the key insight that the root cause of unfairness is in biased training data, Ki Hyun proposed Slice Tuner, a selective data acquisition framework that optimizes both model accuracy and fairness. Slice Tuner efficiently and reliably manages learning curves, which are used to estimate model accuracy given more data, and utilizes them to provide the best data acquisition strategy for training an accurate and fair model.

The research team believes that Slice Tuner is an important first step towards realizing responsible AI starting from data collection. This work was presented at ACM SIGMOD (International Conference on Management of Data) 2021, a top Database conference.

For more details, please refer to the links below.

Figure 1. Slice Tuner architecture

https://arxiv.org/abs/2003.04549

https://docs.google.com/presentation/d/1thnn2rEvTtcCbJc8s3TnHQ2IEDBsZOe66-o-u4Wb3y8/edit?usp=sharing

https://youtu.be/QYEhURcd4u4?list=PL3xUNnH4TdbsfndCMn02BqAAgGB0z7cwq

Professors Steven Euijong Whang and Changho Suh’s research team in the School of Electrical Engineering has developed a new batch selection technique for fair artificial intelligence (AI) systems. The research was led by Ph.D. student Yuji Roh (advisor: Steven Euijong Whang) and was conducted in collaboration with Professor Kangwook Lee from the Department of Electrical and Computer Engineering at the University of Wisconsin-Madison.

AI technologies are now widespread and influence everyday lives of humans. Unfortunately, researchers have recently observed that machine learning models may discriminate against specific demographics or individuals. As a result, there is a growing social consensus that AI systems need to be fair.

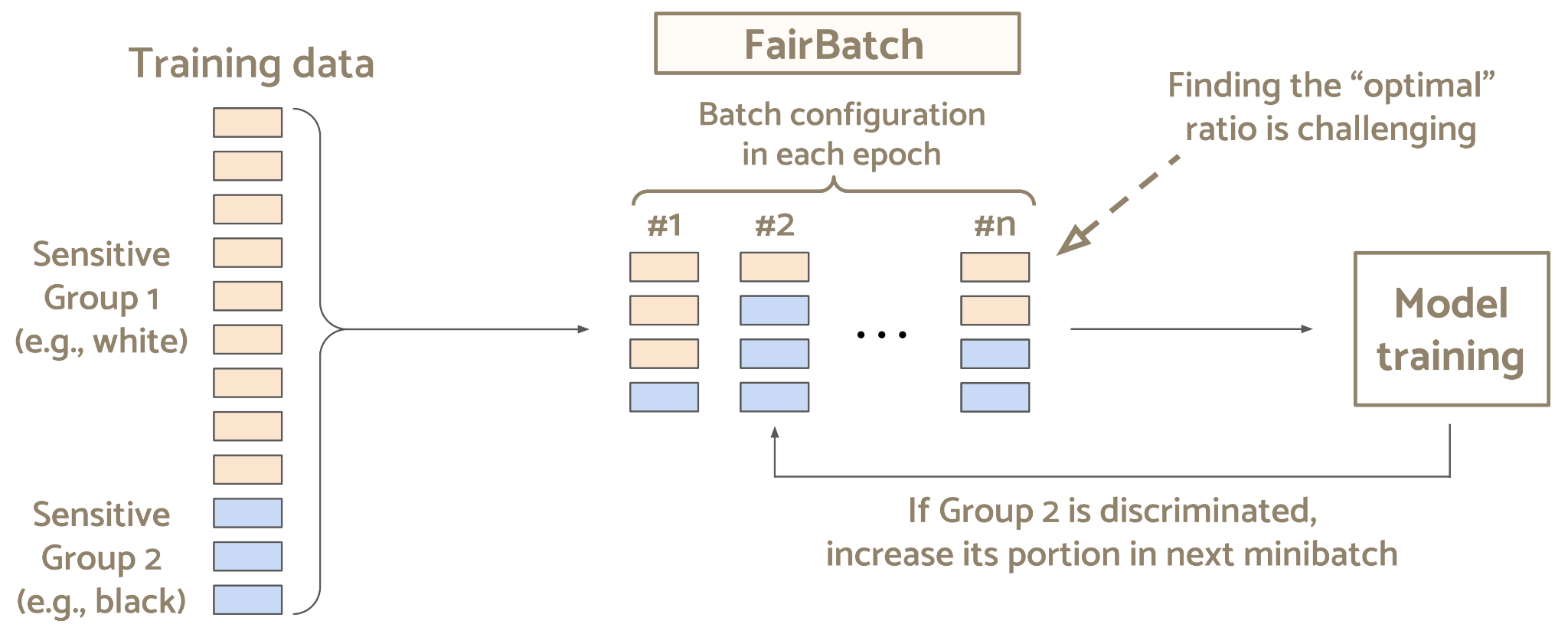

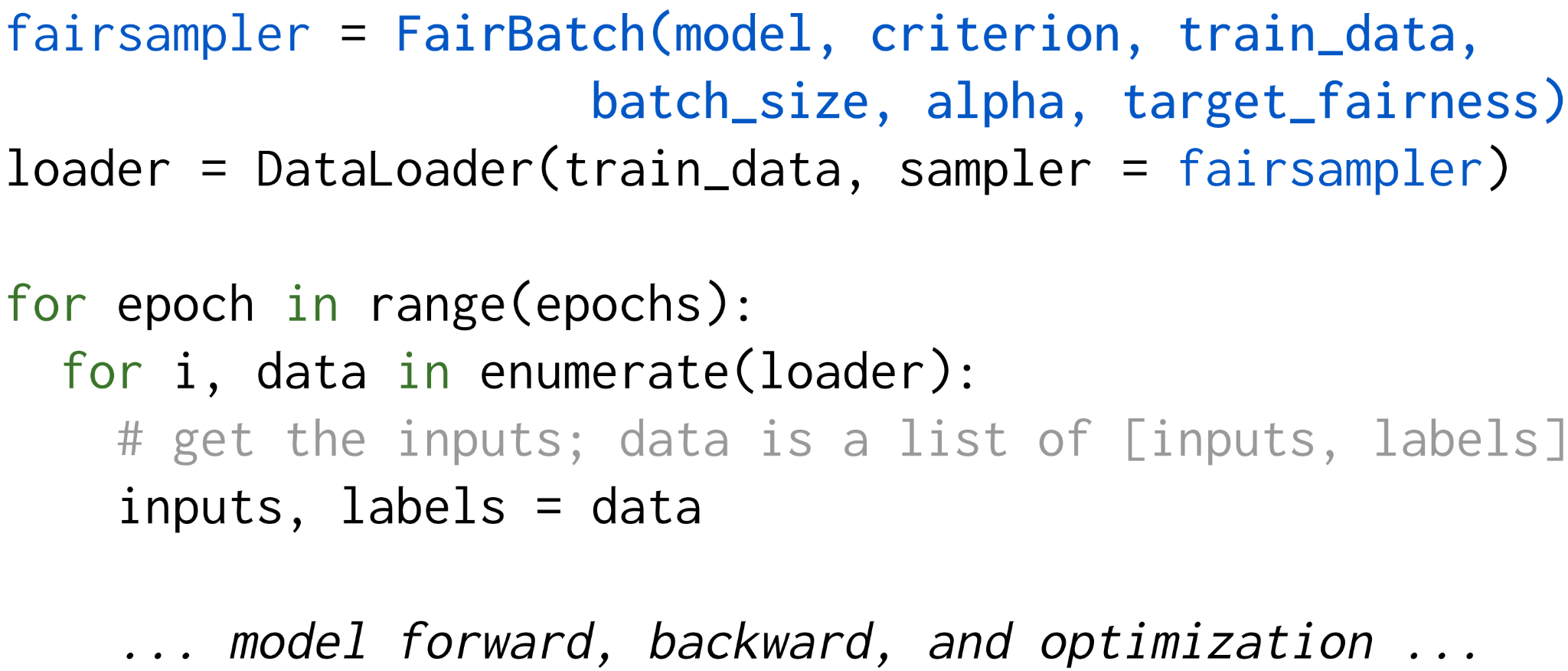

The research team proposes FairBatch, a new batch selection technique for building fair machine learning models. Existing fair training algorithms require significant non-trivial modifications either in the training data or model architecture. In contrast, FairBatch effectively achieves high accuracy and fairness with only a single-line change of code in the batch selection, which enables FairBatch to be easily deployed in various applications. FairBatch’s key approach is solving a bi-level optimization for jointly achieving accuracy and fairness.

This research was presented at the International Conference for Learning Representations (ICLR) 2021, a top machine learning conference. More details are in the links below.

Figure 1. A scenario that shows how FairBatch adaptively adjusts batch ratios in model training for fairness.

Figure 2. PyTorch code for model training where FairBatch is used for batch selection. Only a single-line code change is required to replace an existing sampler with FairBatch, marked in blue.

Title: FairBatch: Batch Selection for Model Fairness

Authors: Yuji Roh (KAIST EE), Kangwook Lee (Wisconsin-Madison Electrical & Computer Engineering), Steven Euijong Whang (KAIST EE), and Changho Suh (KAIST EE)

Paper: https://openreview.net/forum?id=YNnpaAKeCfx

Source code: https://github.com/yuji-roh/fairbatch

Slides: https://docs.google.com/presentation/d/1IfaYovisZUYxyofhdrgTYzHGXIwixK9EyoAsoE1YX-w/edit?usp=sharing

Ministry of Science and ICT (IITP) awards CAMEL for AI-augmented Flash-based storage for self-driving car research. Specifically, CAMEL will perform the research of machine-learning algorithms that recover all runtime and device faults observed in automobiles. As the reliability of storage devices has a significant impact on self-driving automobiles, self-governing algorithms and fault-tolerant hardware architecture are significantly important. Prof. Jung as a single PI will be supported by around $1.6 million USD to develop lightweight machine-learning algorithms, hardware automation technology, and computer architecture for reliable storage and self-driving automobiles.

Sangwon Lee, Gyuyoung Park, and Myoungsoo Jung

12th USENIX Workshop on Hot Topics in Storage and File Systems (HotStorage), 2020, Poster

https://www.usenix.org/conference/hotstorage20/presentation/lee

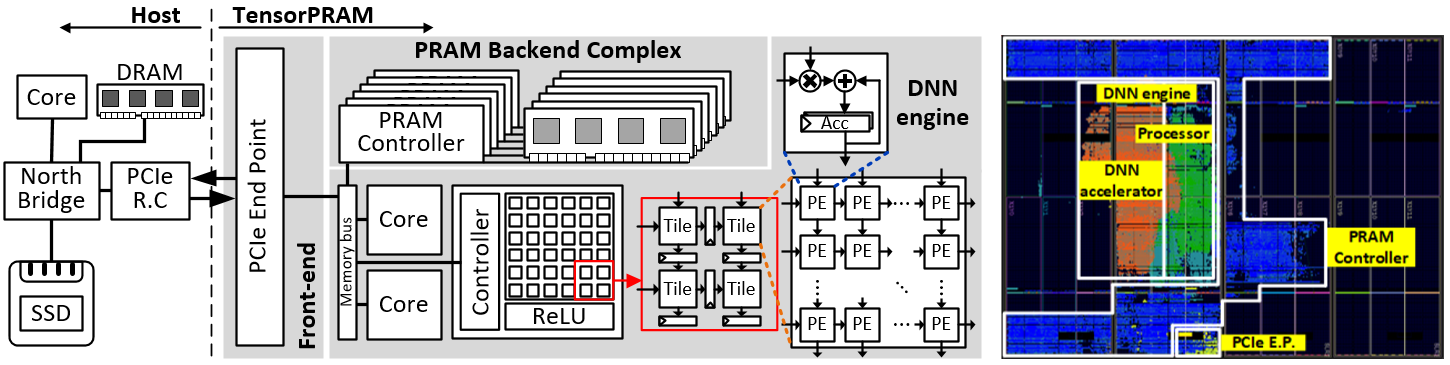

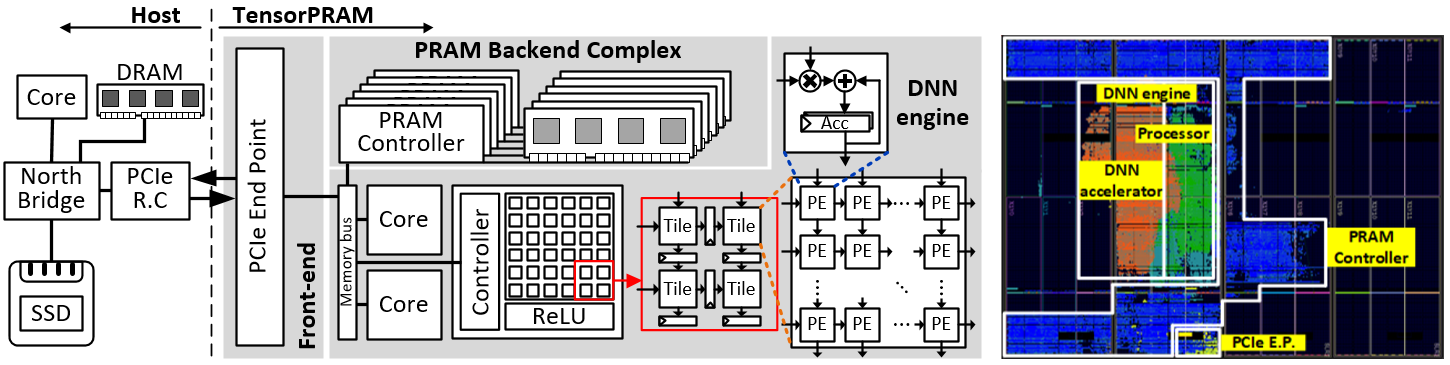

We present TensorPRAM, a scalable heterogeneous deep learning accelerator that realizes FPGA-based domain specific architecture, and it can be used for forming a computational array for deep neural networks (DNNs). The current design of TensorPRAM includes a systolic-array hardware, which accelerates general matrix multiplication (GEMM) and convolution of DNNs. To reduce data movement overhead between a host and the accelerator, we further replace TensorPRAM’s on-board memory with a dense, but byte-addressable storage class memory (PRAM). We prototype TensorPRAM by placing all the logic of a general processor, front-end host interface module, systolic-array and PRAM controllers into a single FPGA chip, such that one or more TensorPRAMs can be attached to the host over PCIe fabric as a scalable option. Our real system evaluations show that TensorPRAM can reduce the execution time of various DNN workloads, compared to a processor only accelerator and a systolic-array only accelerator by 99% and 48%, on average, respectively.

Junhyeok Jang, Donghyun Gouk, Jinwoo Shin, and Myoungsoo Jung

12th USENIX Workshop on Hot Topics in Storage and File Systems (HotStorage), 2020, Poster

https://www.usenix.org/conference/hotstorage20/presentation/jang

Flash block reclaiming, called garbage collection (GC), is the major performance bottleneck and sits on the critical path in modern SSDs. Thus, both industry and academia have paid significant attentions to address the overhead imposed by GC. To eliminate GC overhead from users’ viewpoint, there exist several studies to perform GCs at user idle times. While these scheduling methods, called background GC, are a very practical approach, the main challenge behind the background GC is to predict the exact arrival time of a next I/O request.

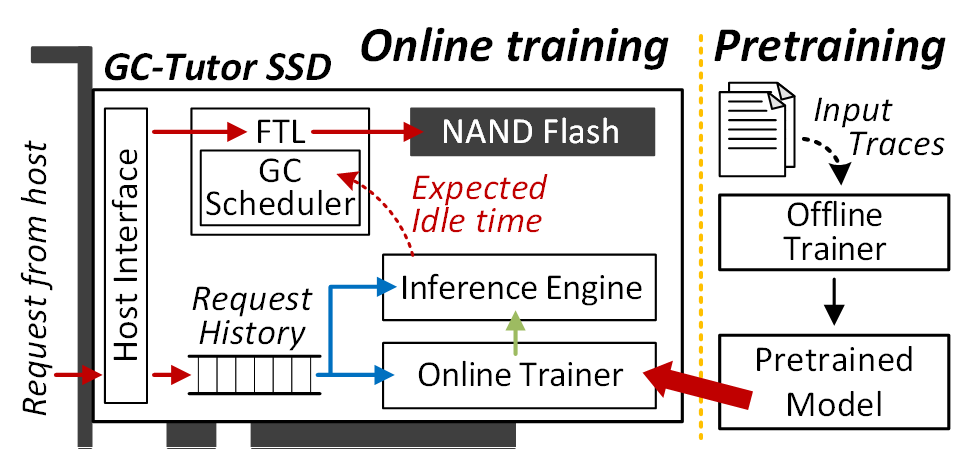

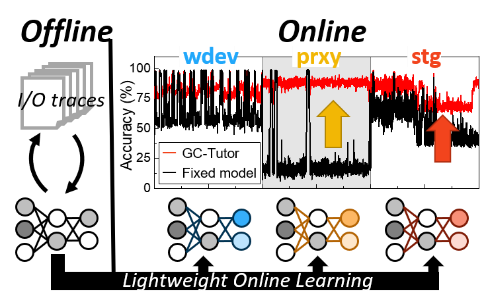

We propose GC-Tutor, which is a garbage collection (GC) scheduler that makes GC overhead invisible to users by precisely predicting future I/O arrival times with a deep learning algorithm. For the prediction of future arrivals, applying conventional deep neural networks (DNNs) to SSD is unfortunately an infeasible option as typical model training takes tens of hours or days. Instead, GC-Tutor leverages a light-weight online-learning method that learns the dynamic request arrival behavior with a small amount of runtime information within the target SSD. Our evaluation results show that GC-Tutor reduces the request suspending time than a conventional rule-based and DNN-only GC schedulers by 82.4% and 67.9%, respectively, while increasing the prediction accuracy by 16.9%, on average, under diverse real workloads.

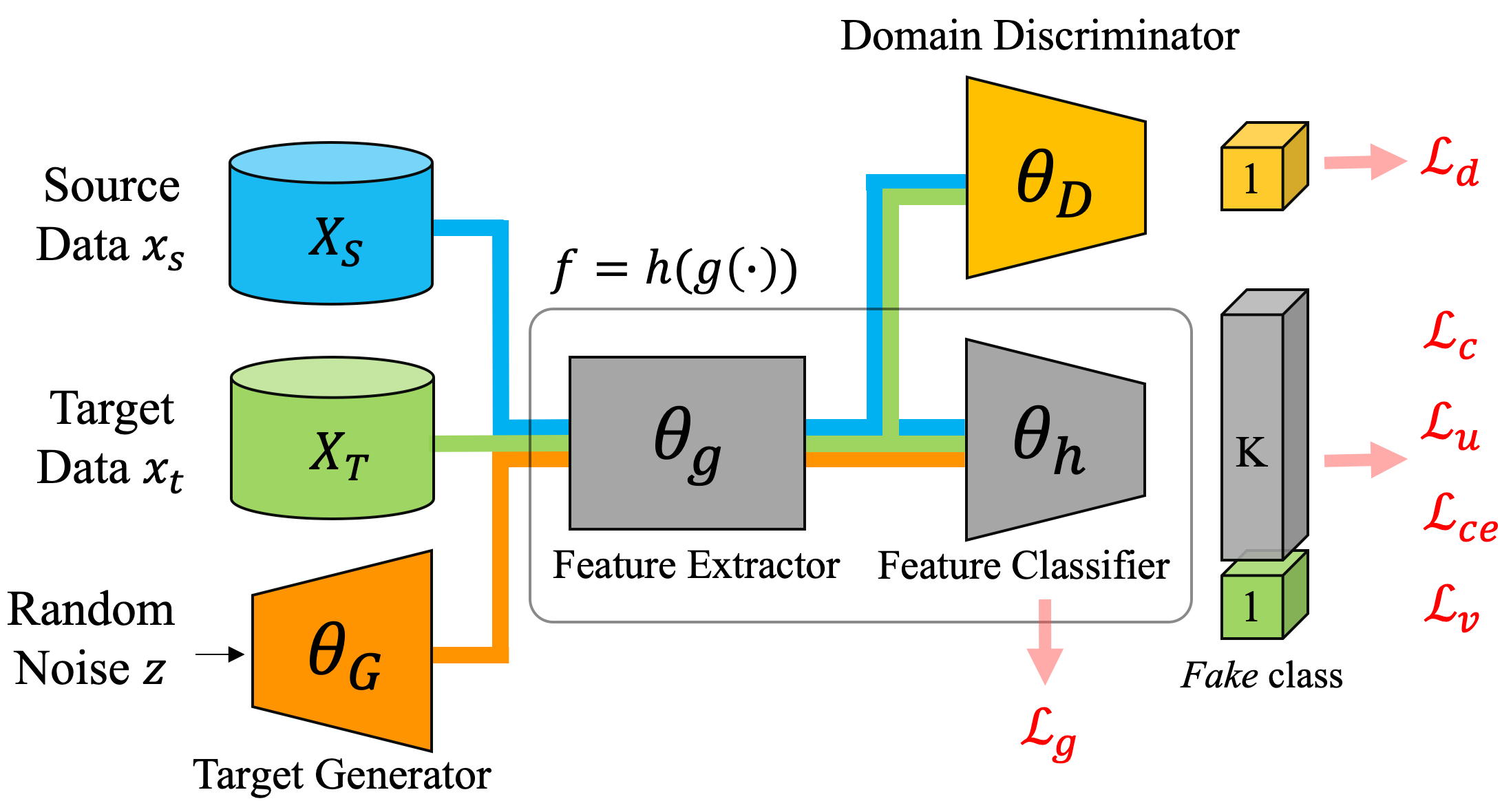

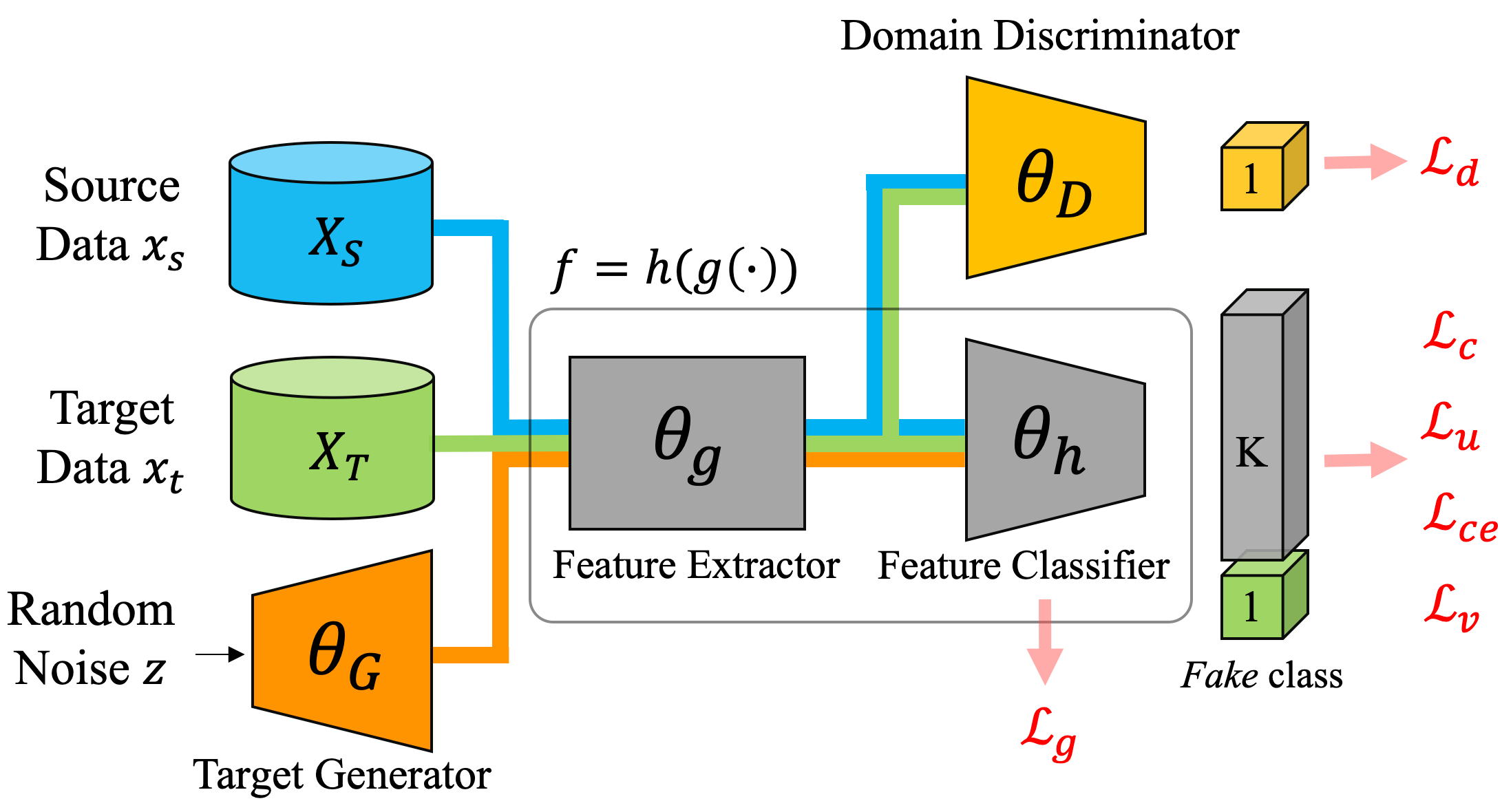

We study the problem of unsupervised domain adaptation that aims at obtaining a prediction model for the target domain using labeled data from the source domain and unlabeled data from the target domain. There exists an array of recent research based on the idea of extracting features that are not only invariant for both domains but also provide high discriminative power for the target domain.

In this paper, we propose an idea of improving the discriminativeness: Adding an extra artificial class and training the model on the given data together with the GAN-generated samples of the new class.

The trained model based on the new class samples is capable of extracting the features that are more discriminative by repositioning data of current classes in the target domain and therefore

increasing the distances among the target clusters in the feature space. Our idea is highly generic so that it is compatible with many existing methods such as DANN, VADA, and DIRT-T.

We conduct various experiments for the standard data commonly used for the evaluation of unsupervised domain adaptations and demonstrate that our algorithm achieves the SOTA performance for many scenarios.

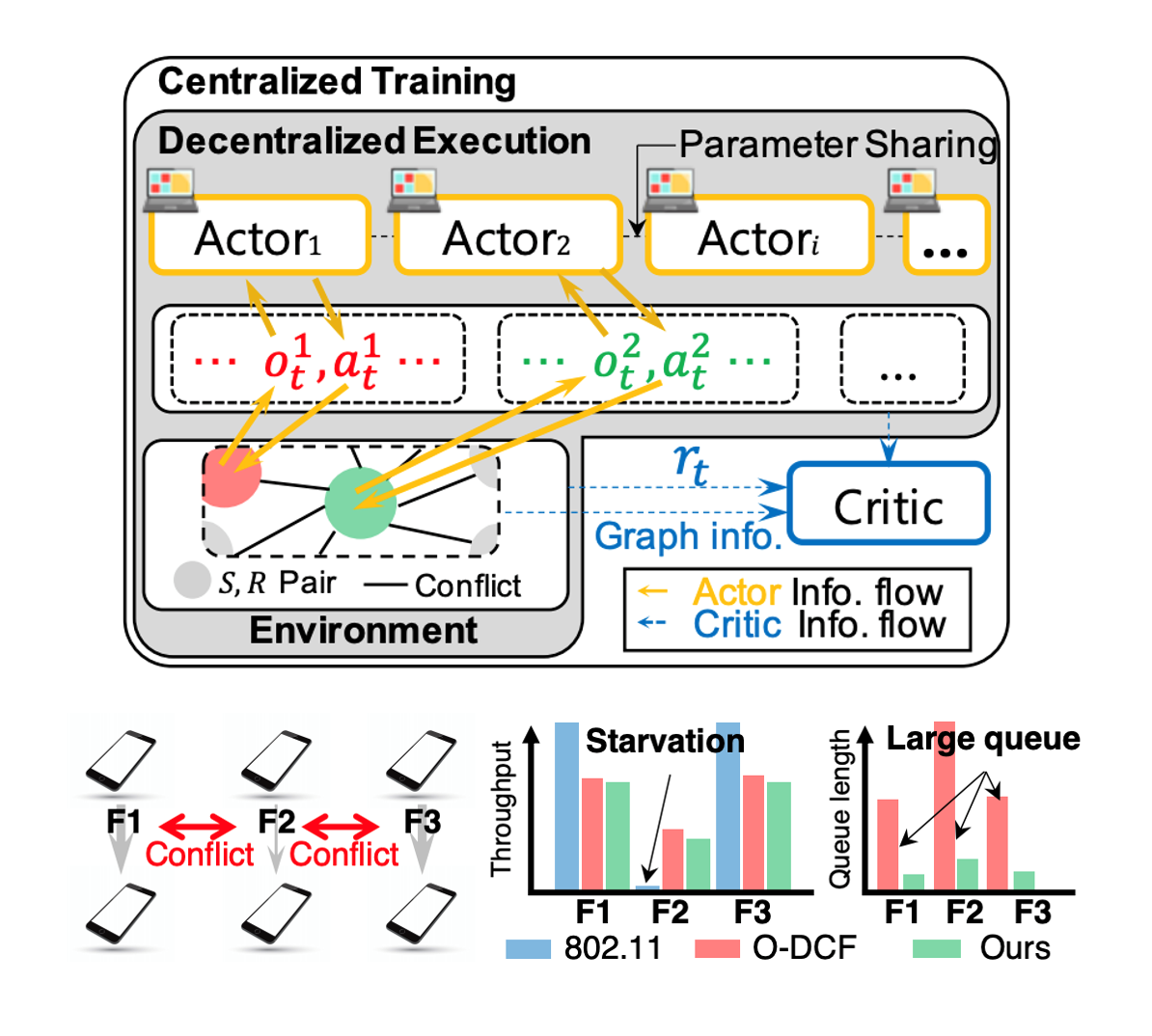

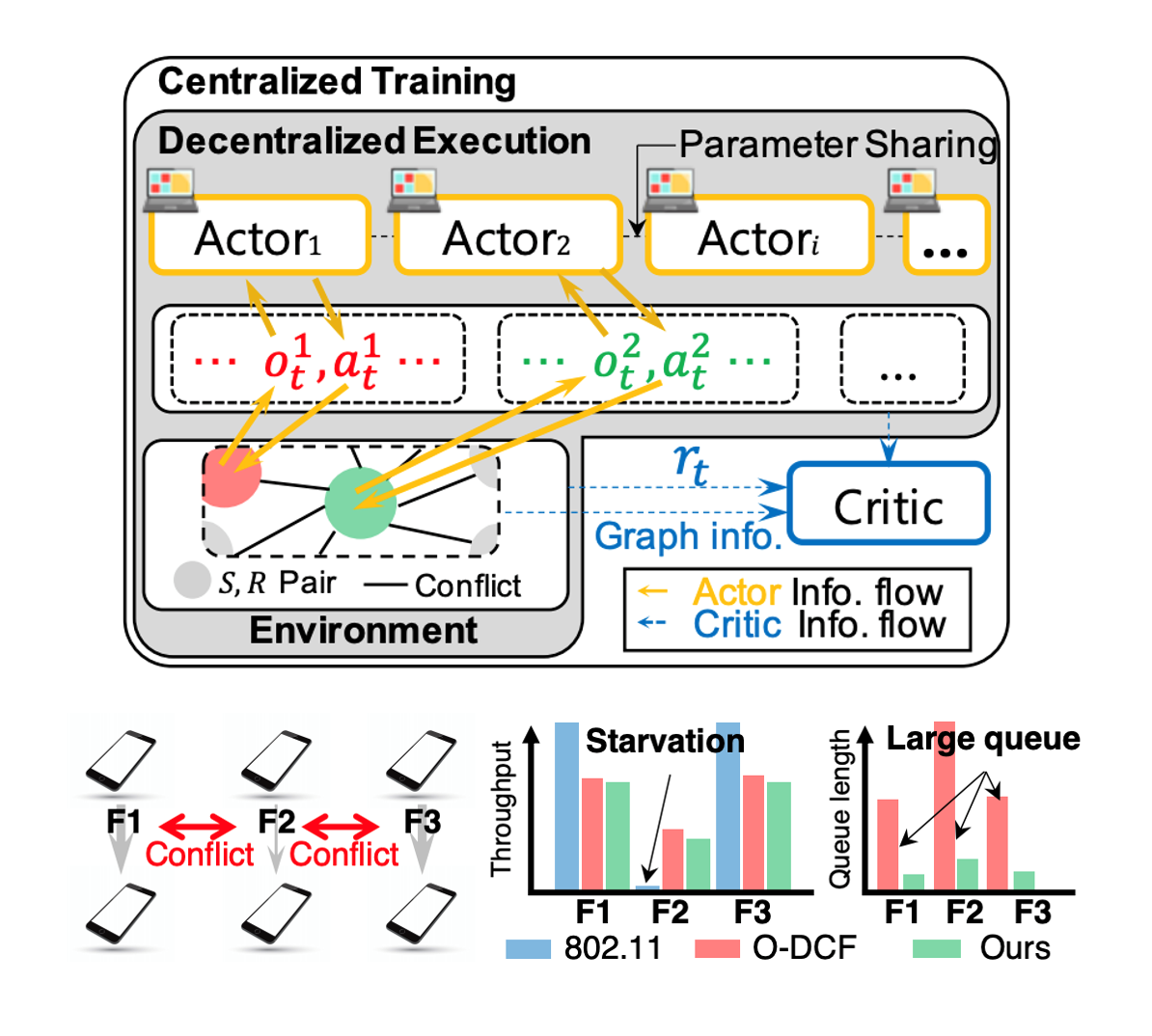

The carrier sense multiple access (CSMA) algorithm has been used in the wireless medium access control (MAC) under standard 802.11 implementation due to its simplicity and generality. An extensive body of research on CSMA has long been made not only in the context of practical protocols, but also in a distributed way of optimal MAC scheduling. However, the current state-of-the-art CSMA (or its extensions) still suffers from poor performance, especially in multi-hop scenarios, and often requires patch-based solutions rather than a universal solution. In this paper, we propose an algorithm which adopts an experience-driven approach and train CSMA-based wireless MAC by using deep reinforcement learning. We name our protocol, Neuro-DCF. Two key challenges are: (i) a stable training method for distributed execution and (ii) a unified training method for embracing various interference patterns and configurations. For (i), we adopt a multi-agent reinforcement learning framework, and for (ii) we introduce a novel graph neural network (GNN) based training structure. We provide extensive simulation results which demonstrate that our protocol, Neuro-DCF, significantly outperforms 802.11 DCF and O-DCF, a recent theory-based MAC protocol, especially in terms of improving delay performance while preserving optimal utility. We believe our multi-agent reinforcement learning based approach would get broad interest from other learning-based network controllers in different layers that require distributed operation.

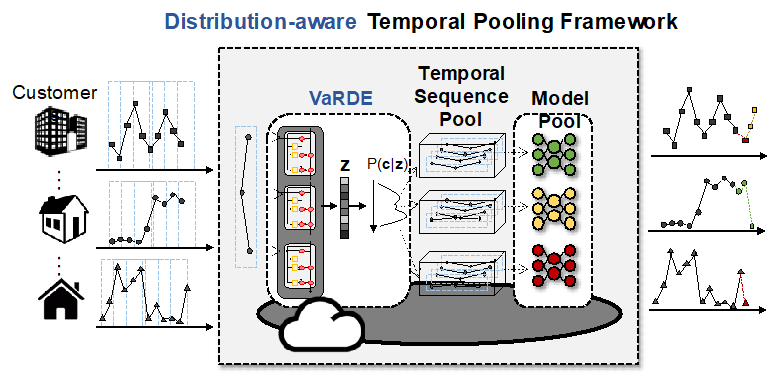

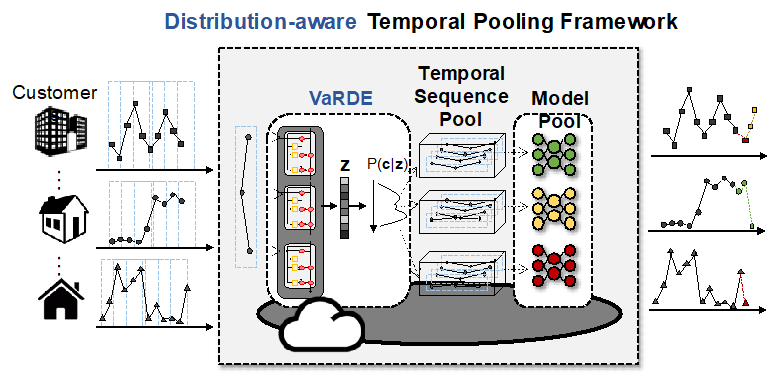

For smart grid services, accurate individual load forecasting is an essential element. When training individual forecasting models for multi-customers, discrepancies in data distribution among customers should be considered; there are two simple ways to build the models considering multi-customers: constructing each model independently or training as one model encompassing multi-customers. The independent approach shows higher accuracy than the latter. However, it deploys copious models, causing resource/management inefficiency; the latter is the opposite. A compromise between these two could be clustering-based forecasting. However, the previous studies are limited in applying to individual forecasting in that they focus on aggregated load and do not consider concept drift, which degrades accuracy over time. Therefore, we propose a distribution-aware temporal pooling framework that is enhanced clustering-based forecasting. For the clustering, we propose Variational Recurrent Deep Embedding (VaRDE) working in a distribution-aware manner, so it is suitable to process individual load. It allocates clusters to customers every time, so the clusters, where customers are assigned, are dynamically changed to resolve distribution change. We conducted experiments with real data for evaluation, and the result showed better performance than previous studies, especially with a few models even for unseen data, leading to high scalability.

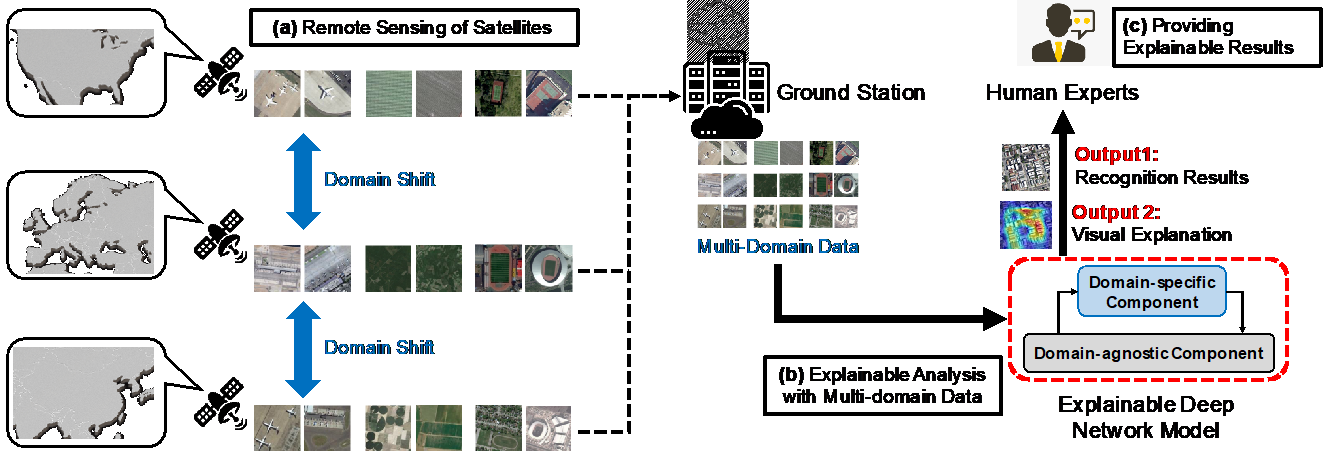

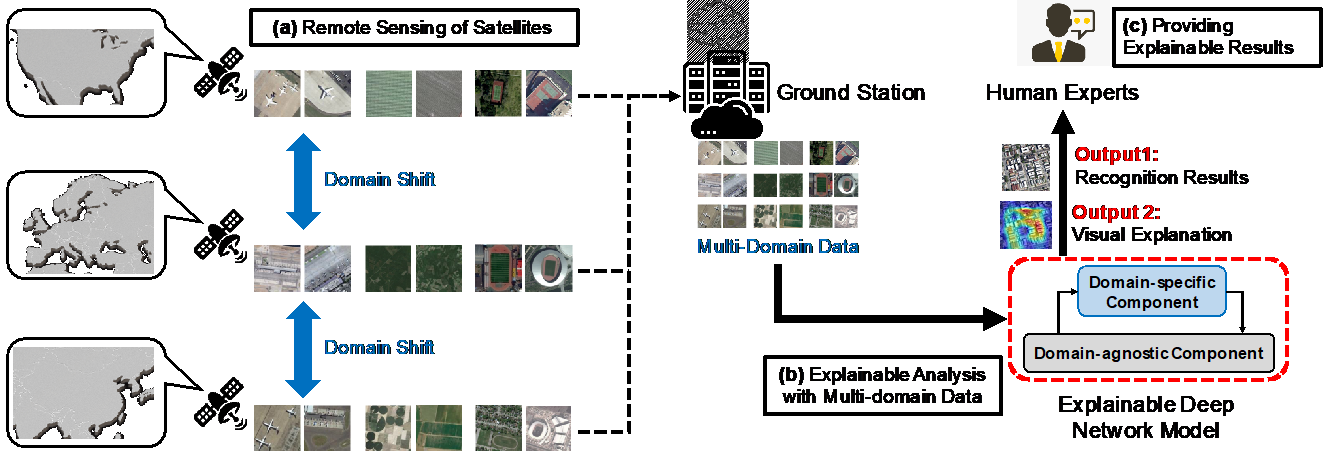

Recently, satellite image analytics based on convolutional neural networks have been vigorously investigated; however, in order for the artificial intelligence systems to be applied in practice, there still exists several challenges: (a) model explanability to improve the reliability of the artificial intelligence system by providing the evidence for the prediction results; (b) dealing with domain shift among images captured by multiple satellites of which the specification of the image sensors is various. To resolve the two issues in the development of a deep model for satellite image analytics, in this paper we propose a multi-domain learning method based on attention-based adapters. As plug-ins to the backbone network, the adapter modules are designed to extract domain-specific features as well as improve visual attention for input images. In addition, we also discuss an alternating training strategy of the backbone network and the adapters in order to effectively separate domain-invariant features and -specific features, respectively. Finally, we utilize Grad-CAM/LIME to provide visual explanation on the proposed network architecture. The experimental results demonstrate that the proposed method can be used to improve test accuracy, and its enhancement in visual explanability is also validated.

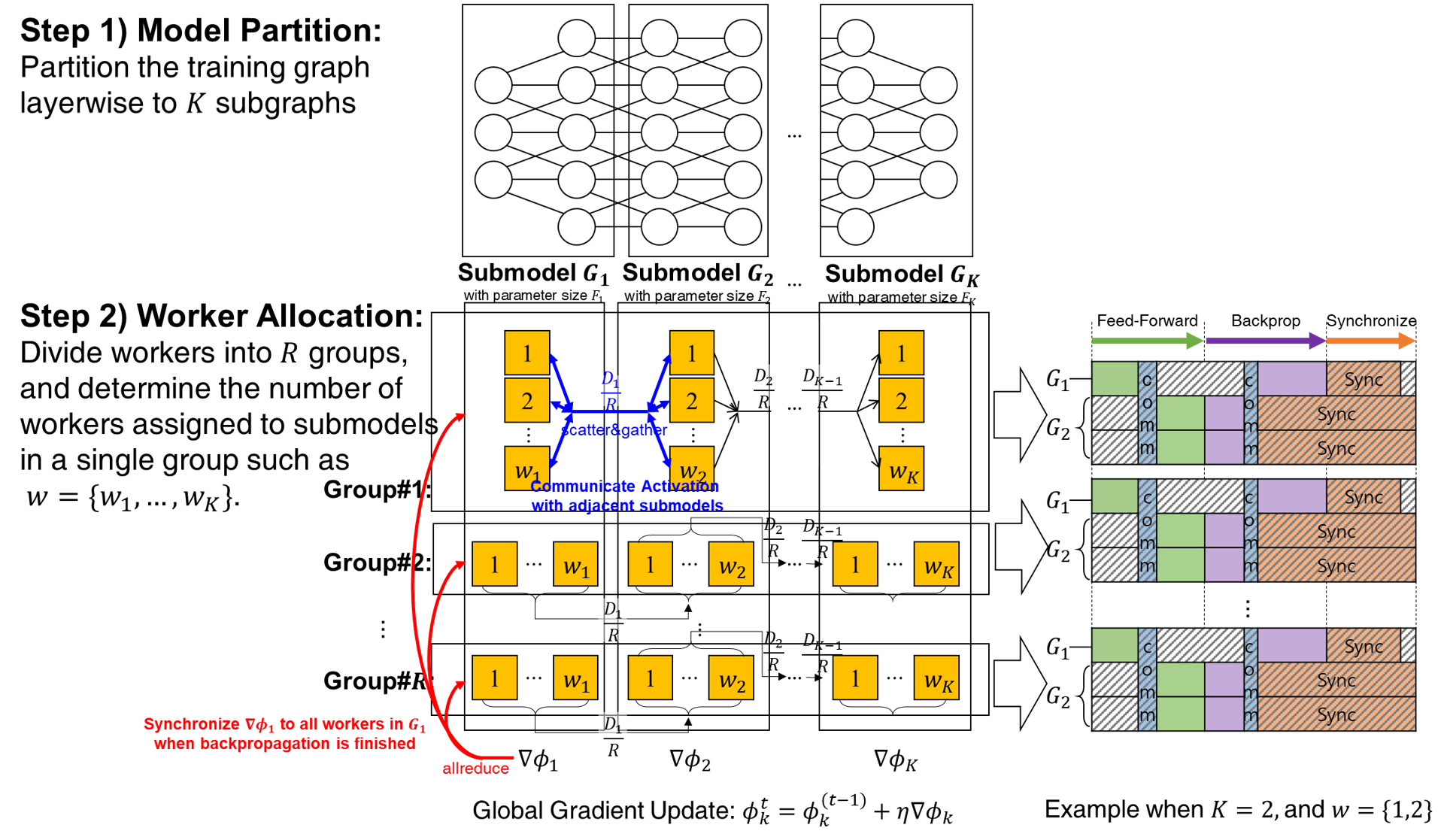

The scale of model parameters and datasets is rapidly growing for high accuracy in various areas. To train a large-scale deep neural network (DNN) model, a huge amount of computation and memory is required; therefore, a parallelization technique for training large-scale DNN models has attracted attention. A number of approaches have been proposed to parallelize large-scale DNN models, but these schemes lack scalability because of their long communication time and limited worker memory. They often sacrifice accuracy to reduce communication time.

In this work, we proposed an efficient parallelism strategy named group hybrid parallelism (GHP) to minimize the training time without any accuracy loss. Two key ideas inspired our approach. First, grouping workers and training them by groups reduces unnecessary communication overhead among workers. It saves a huge amount of network resources in the course of training large-scale networks. Second, mixing data and model parallelism can reduce communication time and mitigate the worker memory issue. Data and model paralleism are complementary to each other so the training time can be enhanced when they are combined. We analyzed the training time model of the data and model parallelism, and based on the training time model, we demonstrated the heuristics that determine the parallelization strategy for minimizing training time.

We evaluated group hybrid parallelism in comparison with existing parallelism schemes, and our experimental results show that group hybrid parallelism outperforms them.