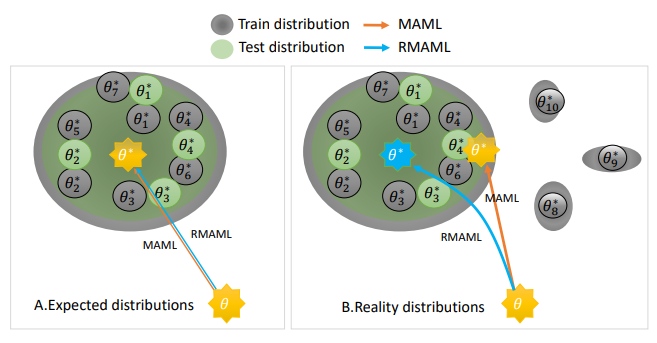

Model agnostic meta-learning (MAML) is a popular state-ofthe-art meta-learning algorithm that provides good weight initialization of a model given a variety of learning tasks. The model initialized by provided weight can be fine-tuned to an unseen task despite only using a small amount of samples and within a few adaptation steps. MAML is simple and versatile but requires costly learning rate tuning and careful design of the task distribution which affects its scalability and generalization. This paper proposes a more robust MAML based on an adaptive learning scheme and a prioritization task buffer (PTB) referred to as Robust MAML (RMAML) for improving scalability of training process and alleviating the problem of distribution mismatch. RMAML uses gradient-based hyper-parameter optimization to automatically find the optimal learning rate and uses the PTB to gradually adjust training task distribution toward testing task distribution over the course of training. Experimental results on meta reinforcement learning environments demonstrate a substantial performance gain as well as being less sensitive to hyper-parameter choice and robust to distribution mismatch.

Figure 5. The demonstration of distribution mismatch between train/test and the corresponding behavior of MAML.

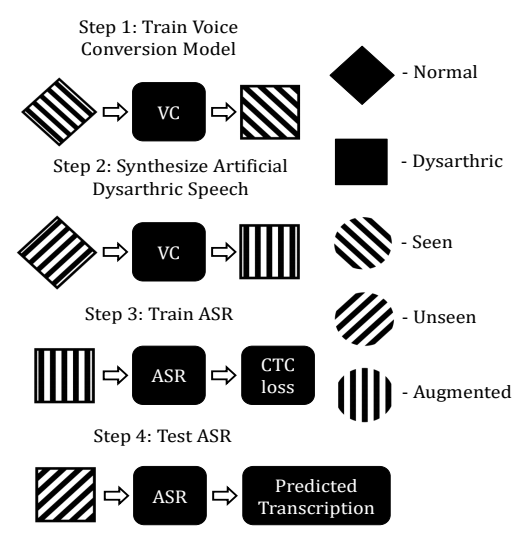

Dysarthria is a condition where people experience a reduction in speech intelligibility due to a neuromotor disorder. Previous works in dysarthric speech recognition have focused on accurate recognition of words encountered in training data. Due to the rarity of dysarthria in the general population, a relatively small amount of publicly-available training data exists for dysarthric speech. The number of unique words in these datasets is small, so ASR systems trained with existing dysarthric speech data are limited to recognition of those words. In this paper, we propose a data augmentation method using voice conversion that allows dysarthric ASR systems to accurately recognize words outside of the training set vocabulary. We demonstrate that a small amount of dysarthric speech data can be used to capture the relevant vocal characteristics of a speaker with dysarthria through a parallel voice conversion system. We show that it’s possible to synthesize utterances of new words that were never recorded by speakers with dysarthria, and that these synthesized utterances can be used to train a dysarthric ASR system.

Figure 4. Proposed Approach: Diamond or square shapes refer to normal or dysarthric speech, respectively. Overlay patterns denote whether data is from the seen or unseen partition, or is augmented (synthetic).

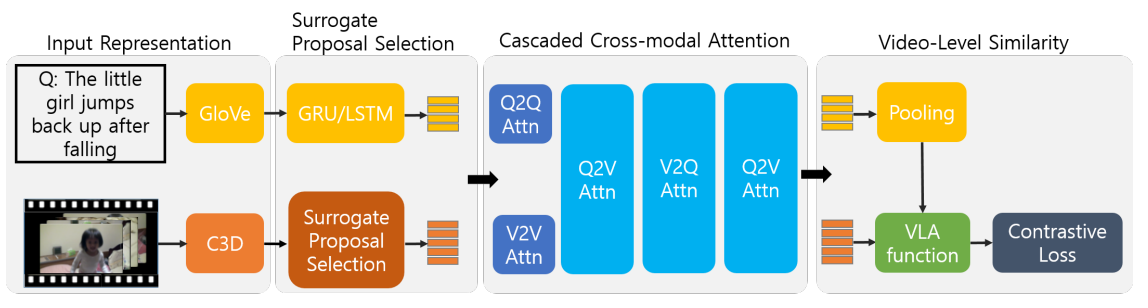

Video Moment Retrieval (VMR) is a task to localize the temporal moment in untrimmed video specified by natural language query. For VMR, several methods that require full supervision for training have been proposed. Unfortunately, acquiring a large number of training videos with labeled temporal boundaries for each query is a laborintensive process. This paper explores a method for performing VMR in a weakly-supervised manner (wVMR): training is performed without temporal moment labels but only with the text query that describes a segment of the video. Existing methods on wVMR generate multi-scale proposals and apply query-guided attention mechanism to highlight the most relevant proposal. To leverage the weak supervision, contrastive learning is used which predicts higher scores for the correct video-query pairs than for the incorrect pairs. It has been observed that a large number of candidate proposals, coarse query representation, and one-way attention mechanism lead to blurry attention map which limits the localization performance. To address this issue, Video-Language Alignment Network (VLANet) is proposed that learns a sharper attention by pruning out spurious candidate proposals and applying a multi-directional attention mechanism with fine-grained query representation. The Surrogate Proposal Selection module selects a proposal based on the proximity to the query in the joint embedding space, and thus substantially reduces candidate proposals which leads to lower computation load and sharper attention. Next, the Cascaded Cross-modal Attention module considers dense feature interactions and multi-directional attention flows to learn the multi-modal alignment. VLANet is trained end-to-end using contrastive loss which enforces semantically similar videos and queries to cluster. The experiments show that the method achieves state-of-the-art performance on Charades-STA and DiDeMo datasets.

Figure 3. Illustration of VLANet architecture. The Surrogate Proposal Selection module prunes out irrelevant proposals based on the similarity metric. Cascaded Cross-modal Attention considers various attention flows to learn multi-modal alignment. The network is trained end-to-end using contrastive loss.

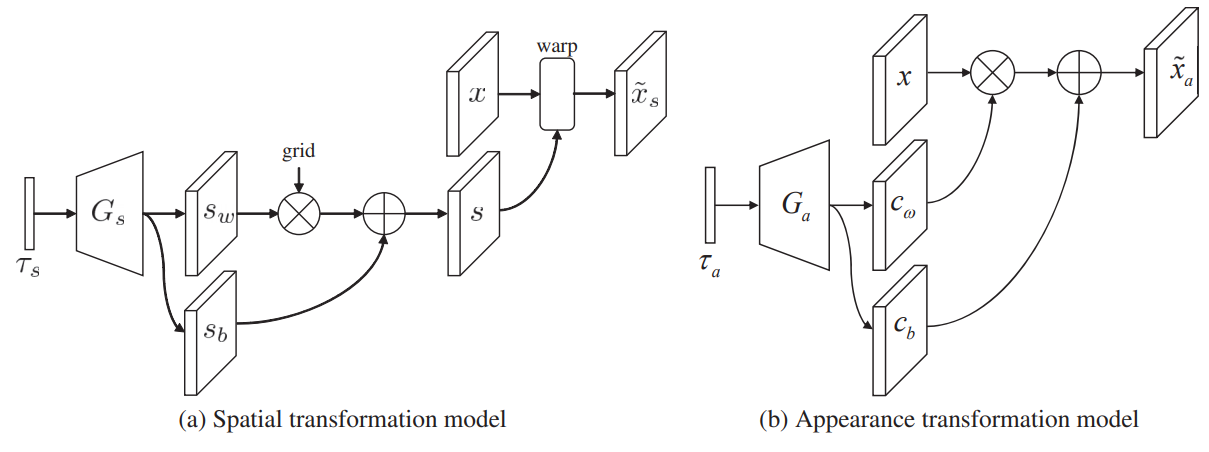

Data augmentation can impact the generalization performance of an image classification model in a significant way. However, it is currently conducted on the basis of trial and error, and its impact on the generalization performance cannot be predicted during training. This paper considers an influence function that predicts how generalization performance, in terms of validation loss, is affected by a particular augmented training sample. The influence function provides an approximation of the change in validation loss without actually comparing the performances that include and exclude the sample in the training process. Based on this function, a differentiable augmentation network is learned to augment an input training sample to reduce validation loss. The augmented sample is fed into the classification network, and its influence is approximated as a function of the parameters of the last fully-connected layer of the classification network. By backpropagating the influence to the augmentation network, the augmentation network parameters are learned. Experimental results on CIFAR-10, CIFAR-100, and ImageNet show that the proposed method provides better generalization performance than conventional data augmentation methods do.

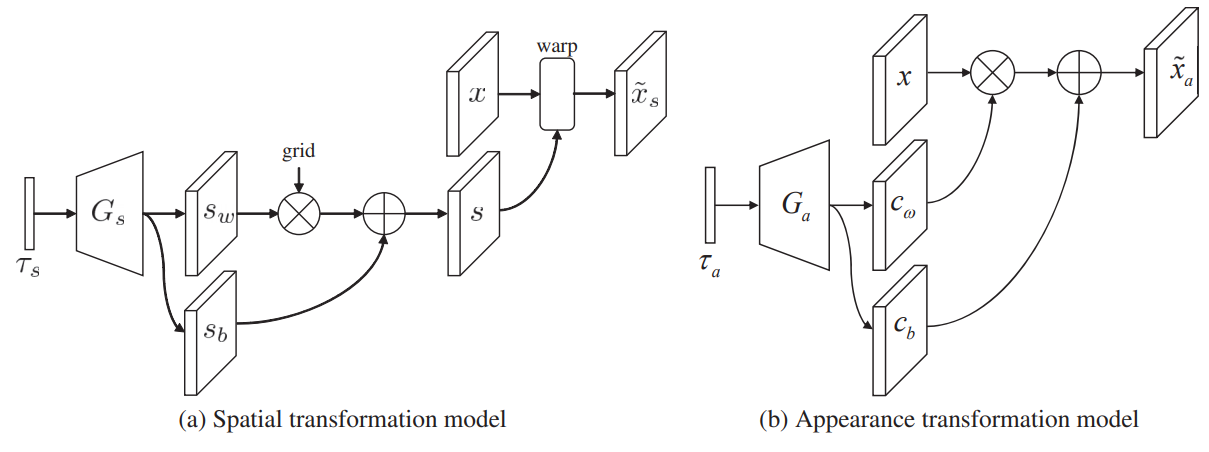

Figure 2. The proposed spatial and appearance transformation models for data augmentation.

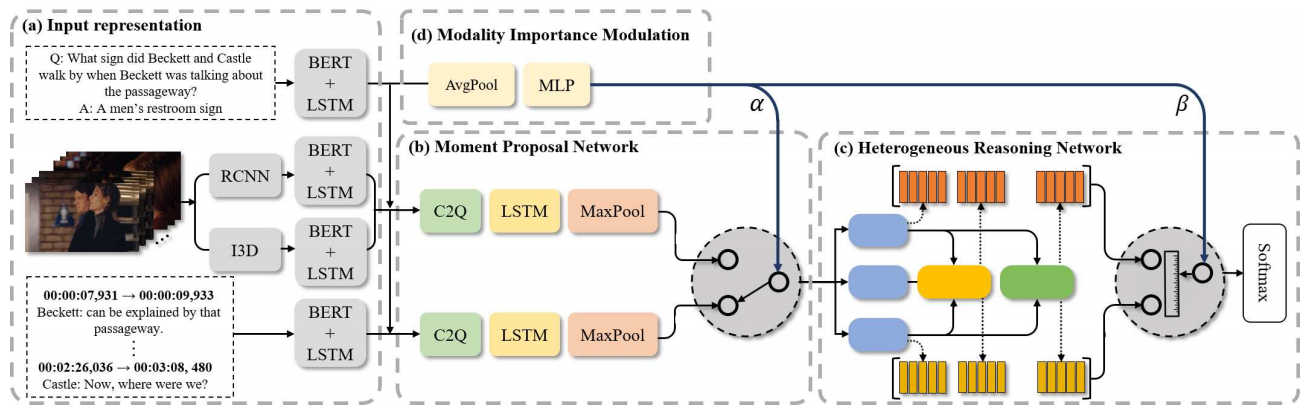

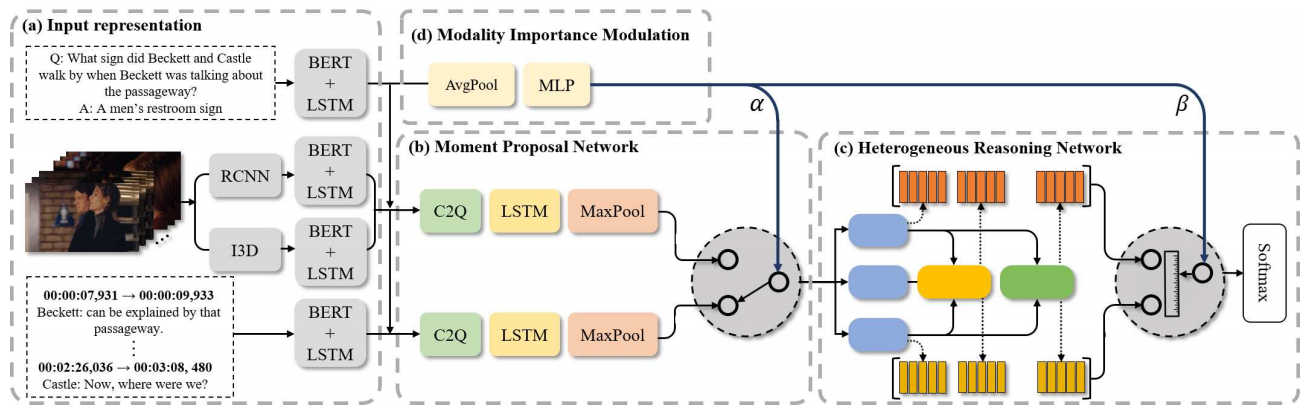

This paper considers a network referred to as Modality Shifting Attention Network (MSAN) for Multimodal Video Question Answering (MVQA) task. MSAN decomposes the task into two sub-tasks: (1) localization of temporal moment relevant to the question, and (2) accurate prediction of the answer based on the localized moment. The modality required for temporal localization may be different from that for answer prediction, and this ability to shift modality is essential for performing the task. To this end, MSAN is based on (1) the moment proposal network (MPN) that attempts to locate the most appropriate temporal moment from each of the modalities, and also on (2) the heterogeneous reasoning network (HRN) that predicts the answer using an attention mechanism on both modalities. MSAN is able to place importance weight on the two modalities for each sub-task using a component referred to as Modality Importance Modulation (MIM). Experimental results show that MSAN outperforms previous state-of-the-art by achieving 71.13% test accuracy on TVQA benchmark dataset. Extensive ablation studies and qualitative analysis are conducted to validate various components of the network.

Figure 1. Illustration of modality shifting attention network (MSAN) which is composed of the following components: (a) Video and text representation utilizing BERT for embedding, (b) Moment proposal network to localize the required temporal moment of interest for answering the question, (c) Heterogeneous reasoning network to infer the correct answer based on the localized moment, and (d) Modality importance modulation to weight the output of (b) and of (c) differently according to their importance.

Woo-han Yun, Byungok Han, Jaeyeon Lee, Jaehong Kim and Junmo Kim

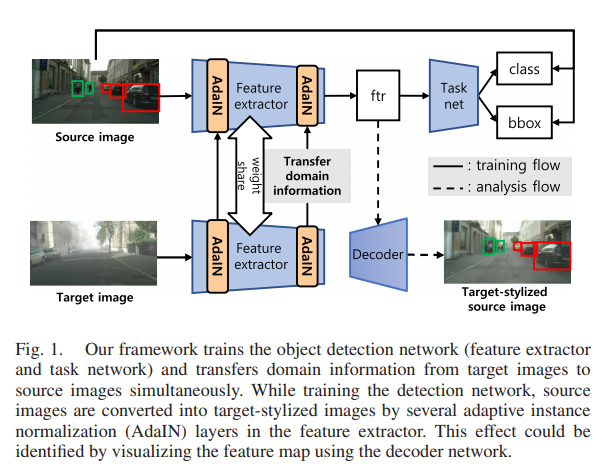

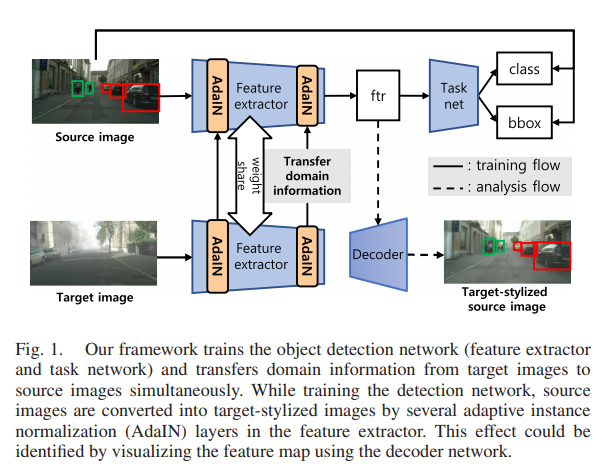

Vision modules running on mobility platforms, such as robots and cars, often face challenging situations such as a domain shift where the distributions of training (source) data and test (target) data are different. The domain shift is caused by several variation factors, such as style, camera viewpoint, object appearance, object size, backgrounds, and scene layout. In this work, we propose an object detection training framework for unsupervised domain-style adaptation. The proposed training framework transfers target-style information to source samples and simultaneously trains the detection network with these target-stylized source samples in an end-to-end manner. The detection network can learn the target domain from the target-stylized source samples. The style is extracted from object areas obtained by using pseudo-labels to reflect the style of the object areas more than that of the irrelevant backgrounds. We empirically verified that the proposed methods improve detection accuracy in diverse domain shift scenarios using the Cityscapes, FoggyCityscapes, Sim10k, BDD100k, PASCAL, and Watercolor datasets.

Youngdong Kim, Juseung Yun, Hyounguk Shon and Junmo Kim

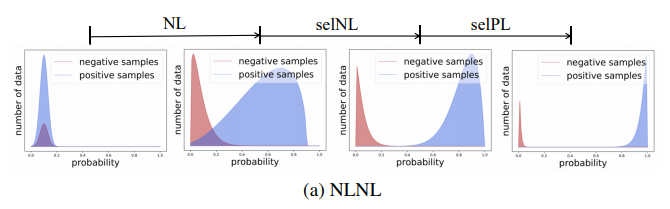

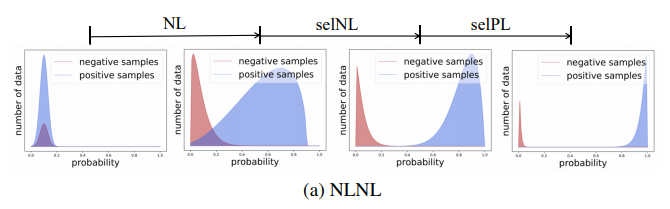

Training of Convolutional Neural Networks (CNNs) with data with noisy labels is known to be a challenge. Based on the fact that directly providing the label to the data (Positive Learning; PL) has a risk of allowing CNNs to memorize the contaminated labels for the case of noisy data, the indirect learning approach that uses complementary labels (Negative Learning for Noisy Labels; NLNL) has proven to be highly effective in preventing overfitting to noisy data as it reduces the risk of providing faulty target. NLNL further employs a three-stage pipeline to improve convergence. As a result, filtering noisy data through the NLNL pipeline is cumbersome, increasing the training cost. In this study, we propose a novel improvement of NLNL, named Joint Negative and Positive Learning (JNPL), that unifies the filtering pipeline into a single stage. JNPL trains CNN via two losses, NL+ and PL+, which are improved upon NL and PL loss functions, respectively. We analyze the fundamental issue of NL loss function and develop new NL+ loss function producing gradient that enhances the convergence of noisy data. Furthermore, PL+ loss function is designed to enable faster convergence to expected-to-be-clean data. We show that the NL+ and PL+ train CNN simultaneously, significantly simplifying the pipeline, allowing greater ease of practical use compared to NLNL. With a simple semi-supervised training technique, our method achieves state-of-the-art accuracy for noisy data classification based on the superior filtering ability.

Byungju Kim, Hyeong Gwon Hong and Junmo Kim

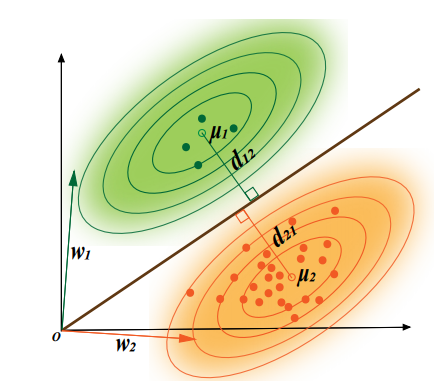

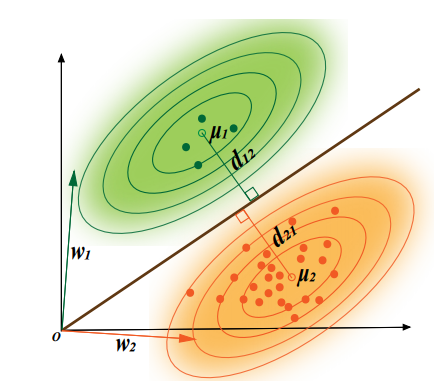

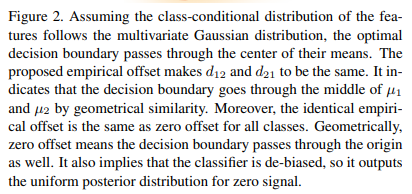

The imbalanced distribution of the training data makes the networks biased to the frequent classes. Existing methods to resolve the problem involve re-sampling, re-weighting, or cost-sensitive learning. Most of them anticipate that emphasizing the minority classes during the training would help the network to learn better representations. In this paper, we propose a method for reparameterizing softmax classifiers’ offsets so that training is less sensitive to class imbalance. We first observe that the trained offset of the baseline linear classifier is biased toward the majority classes due to the imbalance. Instead of the trained offset, we define the estimated offset, and constrain it to be uniform over the classes. In experiments with long-tailed benchmarks, our method exhibits the best performance. These experiments verify that our proposed method effectively encourages the networks to learn better representations for minority classes while preserving the performance for the majority classes.

Donggyu Joo, Eojindl Yi, Sunghyun Baek, and Junmo Kim

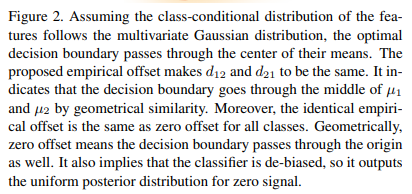

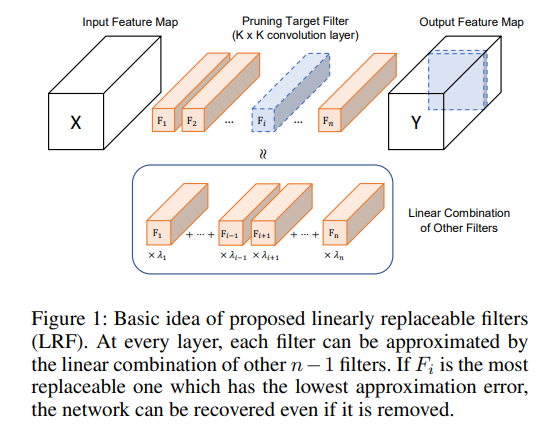

Convolutional neural networks (CNNs) have achieved remarkable results; however, despite the development of deep learning, practical user applications are fairly limited because heavy networks can be used solely with the latest hardware and software supports. Therefore, network pruning is gaining attention for general applications in various fields. This paper proposes a novel channel pruning method, Linearly Replaceable Filter (LRF), which suggests that a filter that can be approximated by the linear combination of other filters is replaceable. Moreover, an additional method called Weights Compensation is proposed to support the LRF method. This is a technique that effectively reduces the output difference caused by removing filters via direct weight modification. Through various experiments, we have confirmed that our method achieves state-of-the-art performance in several benchmarks. In particular, on ImageNet, LRF-60 reduces approximately 56% of FLOPs on ResNet-50 without top-5 accuracy drop. Further, through extensive analyses, we proved the effectiveness of our approaches.

Beomyoung Kim, Sangeun Han and Junmo Kim

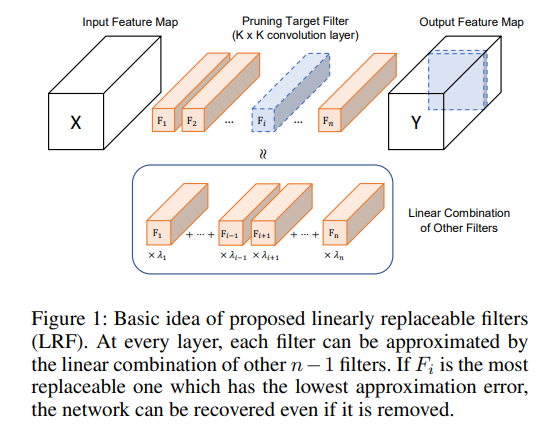

Weakly-supervised semantic segmentation (WSSS) using image-level labels has recently attracted much attention for reducing annotation costs. Existing WSSS methods utilize localization maps from the classification network to generate pseudo segmentation labels. However, since localization maps obtained from the classifier focus only on sparse discriminative object regions, it is difficult to generate high-quality segmentation labels. To address this issue, we introduce discriminative region suppression (DRS) module that is a simple yet effective method to expand object activation regions. DRS suppresses the attention on discriminative regions and spreads it to adjacent non-discriminative regions, generating dense localization maps. DRS requires few or no additional parameters and can be plugged into any network. Furthermore, we introduce an additional learning strategy to give a self-enhancement of localization maps, named localization map refinement learning. Benefiting from this refinement learning, localization maps are refined and enhanced by recovering some missing parts or removing noise itself. Due to its simplicity and effectiveness, our approach achieves mIoU 71.4% on the PASCAL VOC 2012 segmentation benchmark using only image-level labels. Extensive experiments demonstrate the effectiveness of our approach.