Conference/Journal, Year: NeurIPS 2020

Deep reinforcement learning (DRL) algorithms and evolution strategies (ES) have been applied to various tasks, showing excellent performances. These have the opposite properties, with DRL having good sample efficiency and poor stability, while ES being vice versa. Recently, there have been attempts to combine these algorithms, but these methods fully rely on synchronous update scheme, making it not ideal to maximize the benefits of the parallelism in ES. To solve this challenge, asynchronous update scheme was introduced, which is capable of good time-efficiency and diverse policy exploration. In this paper, we introduce an Asynchronous Evolution Strategy-Reinforcement Learning (AES-RL) that maximizes the parallel efficiency of ES and integrates it with policy gradient methods. Specifically, we propose 1) a novel framework to merge ES and DRL asynchronously and 2) various asynchronous update methods that can take all advantages of asynchronism, ES, and DRL, which are exploration and time efficiency, stability, and sample efficiency, respectively. The proposed framework and update methods are evaluated in continuous control benchmark work, showing superior performance as well as time efficiency compared to the previous methods.

Conference/Journal, Year: BMVC 2020

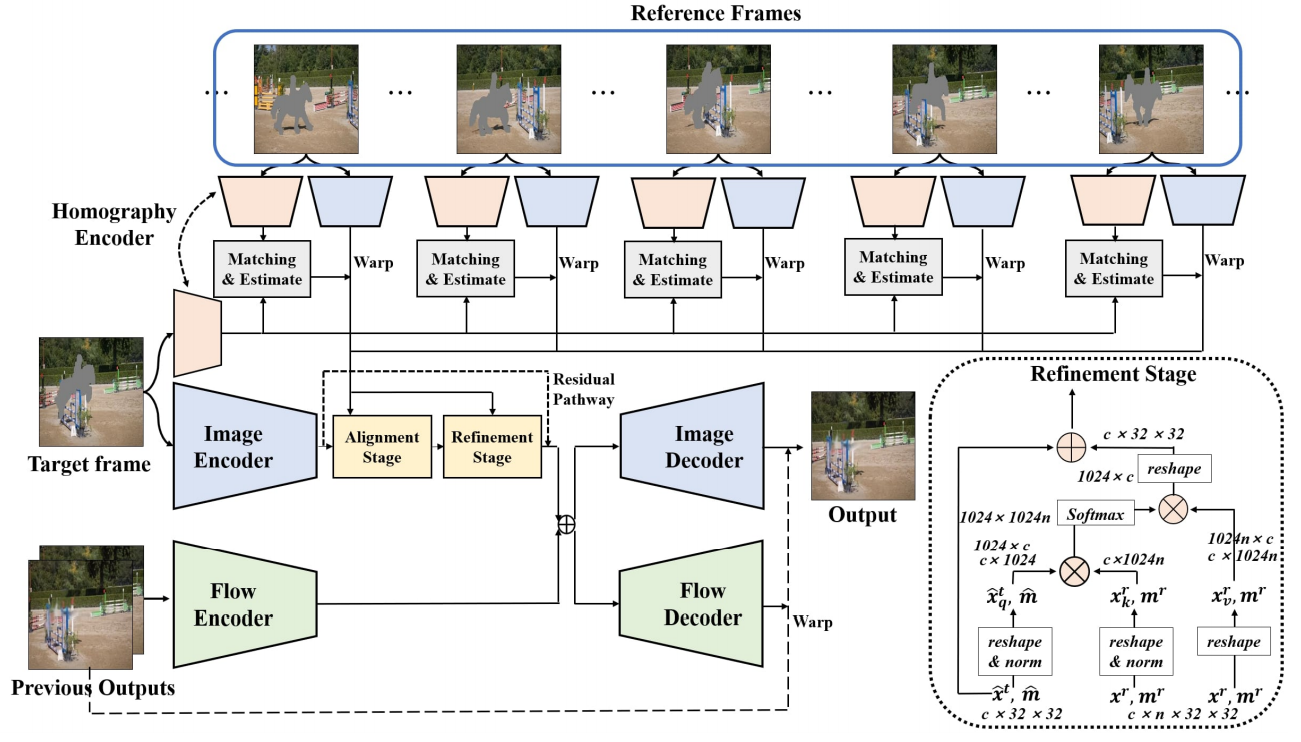

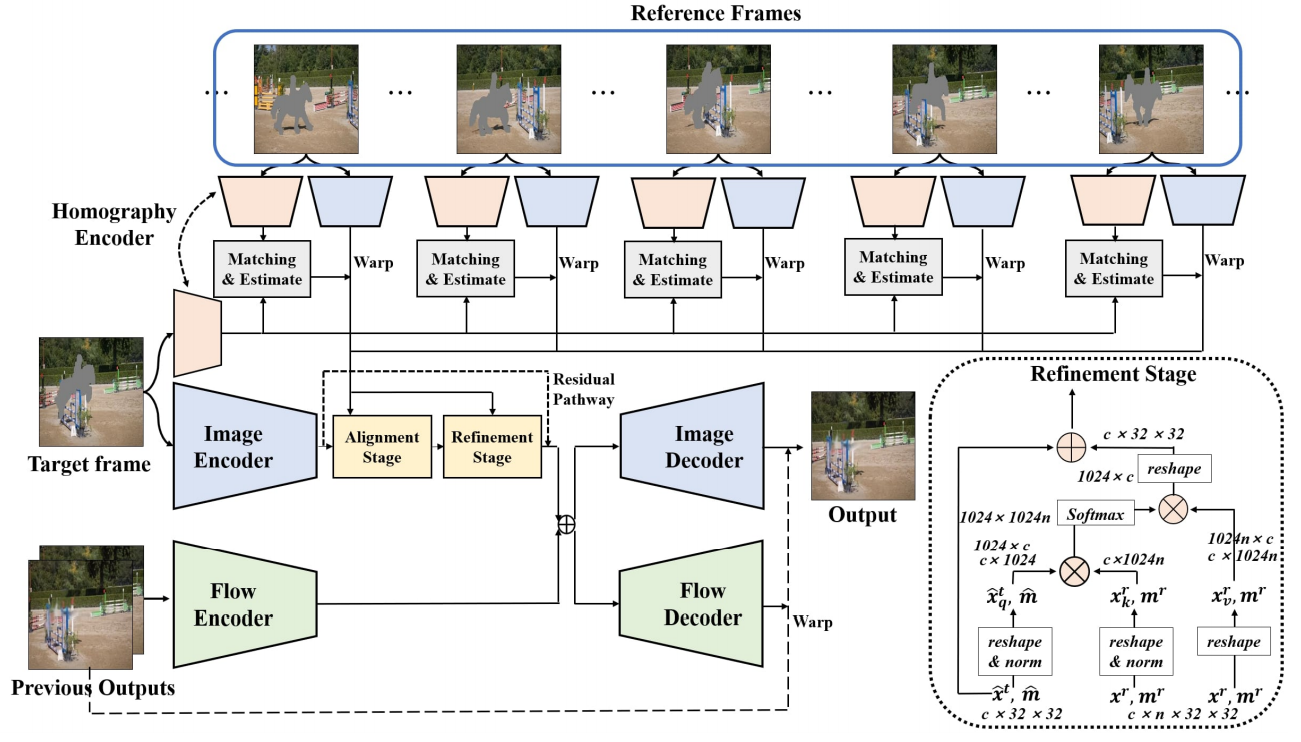

We propose a novel feed-forward network for video inpainting. We use a set of sampled video frames as the reference to take visible contents to fill the hole of a target frame. Our video inpainting network consists of two stages. The first stage is an alignment module that uses computed homographies between the reference frames and the target frame. The visible patches are then aggregated based on the frame similarity to fill in the target holes roughly. The second stage is a nonlocal attention module that matches the generated patches with known reference patches (in space and time) to refine the previous global alignment stage. Both stages consist of large spatial-temporal window size for the reference and thus enable modeling long-range correlations between distant information and the hole regions. Therefore, even challenging scenes with large or slowly moving holes can be handled, which have been hardly modeled by existing flow-based approach. Our network is also designed with a recurrent propagation stream to encourage temporal consistency in video results. Experiments on video object removal demonstrate that our method inpaints the holes with globally and locally coherent contents.

Conference/Journal, Year: IROS 2020

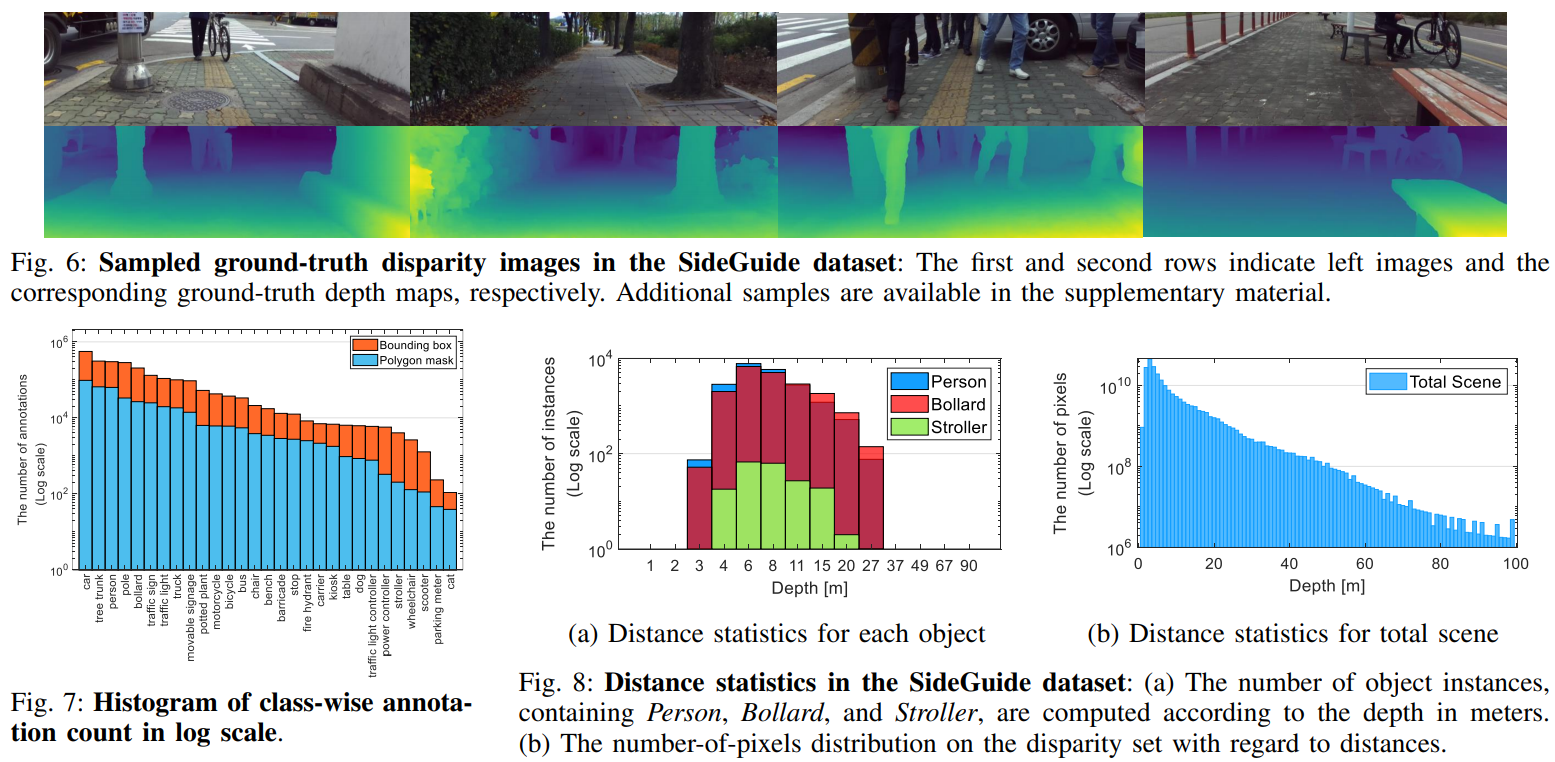

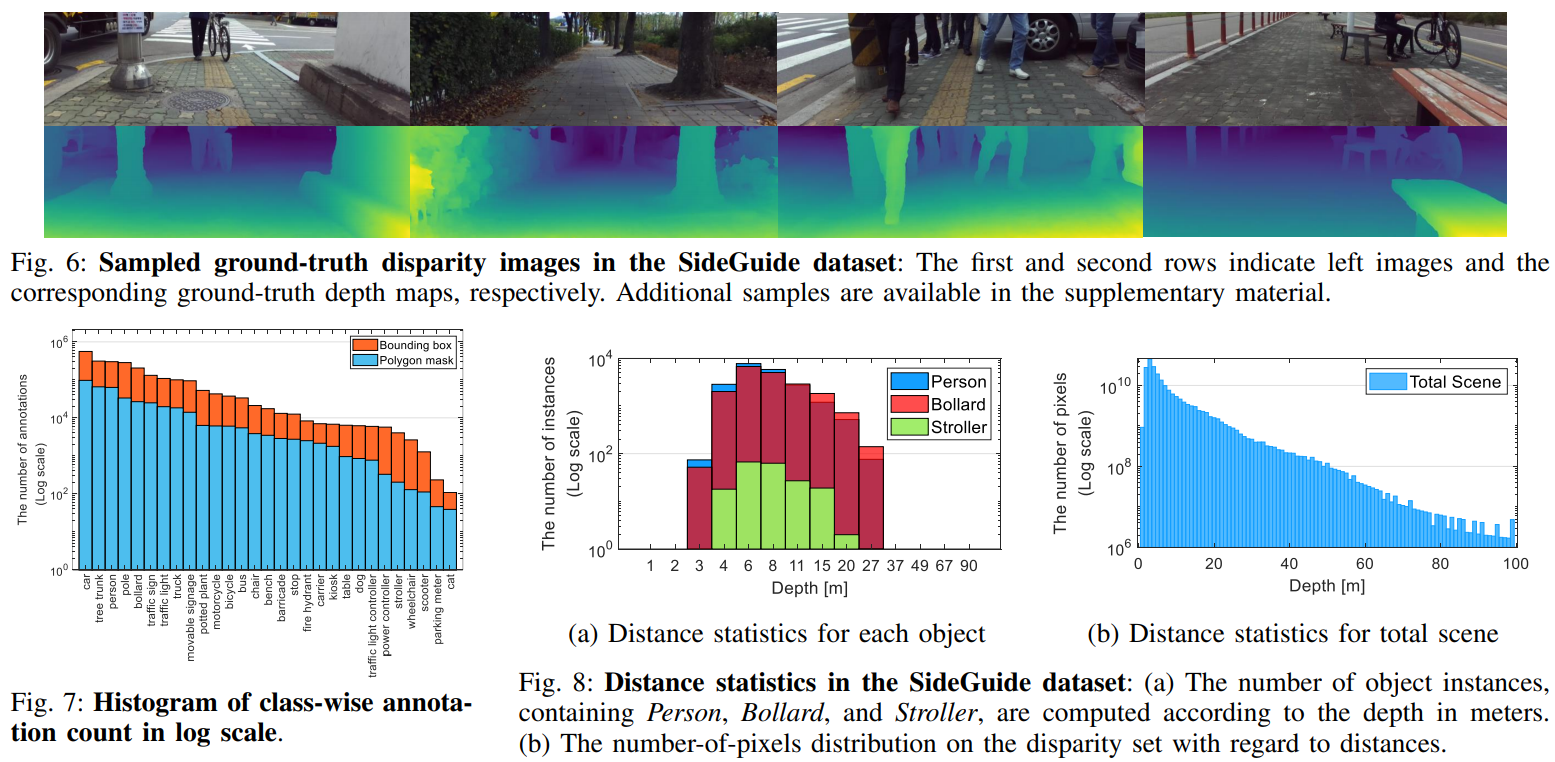

In this paper, we introduce a new large-scale sidewalk dataset called SideGuide that could potentially help impaired people. Unlike most previous datasets, which are focused on road environments, we paid attention to sidewalks, where understanding the environment could provide the potential for improved walking of humans, especially impaired people. Concretely, we interviewed impaired people and carefully selected target objects from the interviewees’ feedback (objects they encounter on sidewalks). We then acquired two different types of data: crowd-sourced data and stereo data. We labeled target objects at instance-level (i.e., bounding box and polygon mask) and generated a ground-truth disparity map for the stereo data. SideGuide consists of 350K images with bounding box annotation, 100K images with a polygon mask, and 180K stereo pairs with the ground-truth disparity. We analyzed our dataset by performing baseline analysis for object detection, instance segmentation, and stereo matching tasks. In addition, we developed a prototype that recognizes the target objects and measures distances, which could potentially assist people with disabilities. The prototype suggests the possibility of practical application of our dataset in real life.

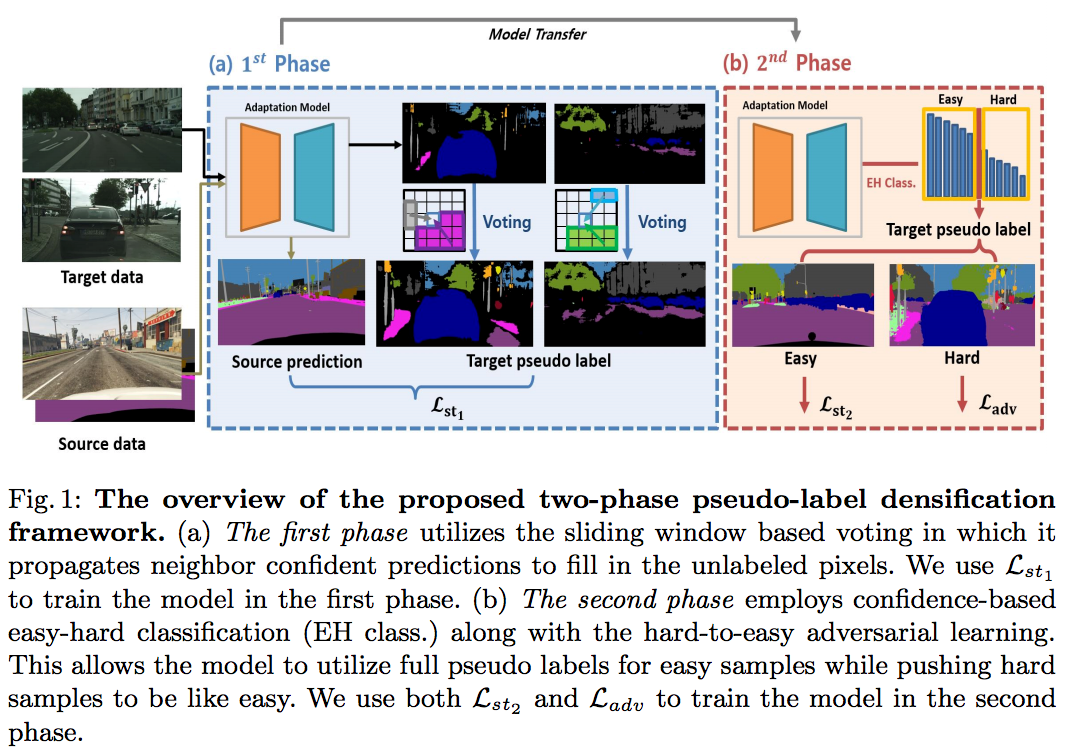

Conference/Journal, Year: ECCV, 2020

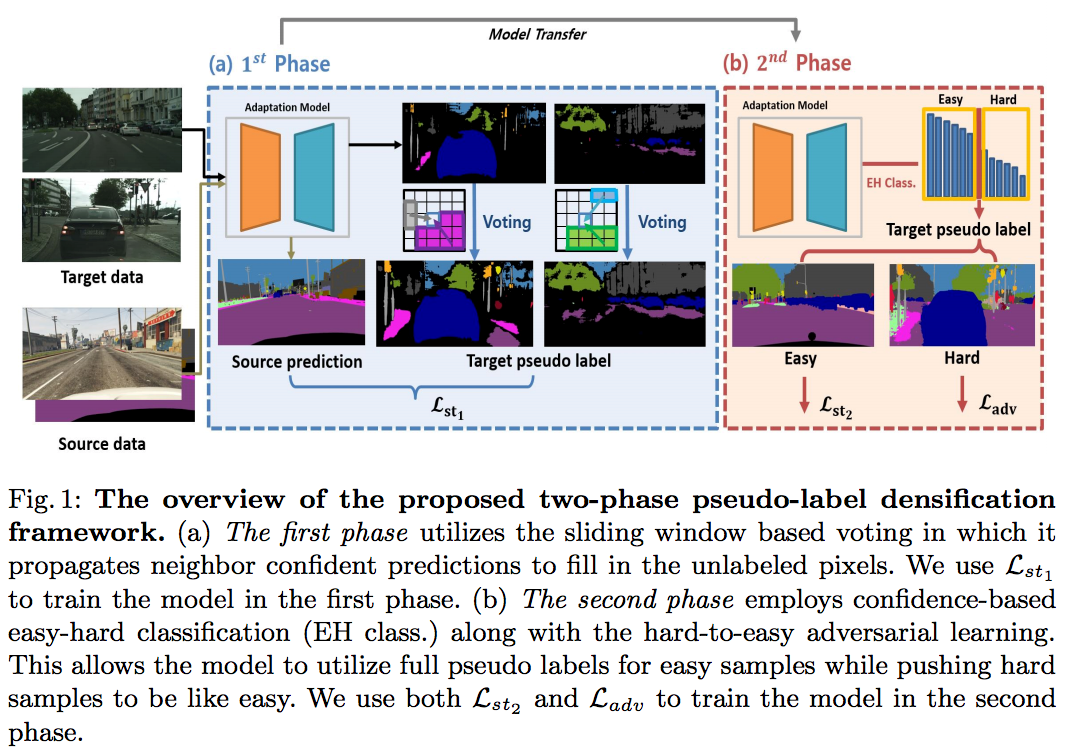

Recently, deep self-training approaches emerged as a powerful solution to the unsupervised domain adaptation. The self-training scheme involves iterative processing of target data; it generates target pseudo labels and retrains the network. However, since only the confident predictions are taken as pseudo labels, existing self-training approaches inevitably produce sparse pseudo labels in practice. We see this is critical because the resulting insufficient training-signals lead to a suboptimal, error-prone model. In order to tackle this problem, we propose a novel Two-phase Pseudo Label Densification framework, referred to as TPLD. In the first phase, we use sliding window voting to propagate the confident predictions, utilizing intrinsic spatial-correlations in the images. In the second phase, we perform a confidence-based easy-hard classification. For the easy samples, we now employ their full pseudolabels. For the hard ones, we instead adopt adversarial learning to enforce hard-to-easy feature alignment. To ease the training process and avoid noisy predictions, we introduce the bootstrapping mechanism to the original self-training loss. We show the proposed TPLD can be easily integrated into existing self-training based approaches and improves the performance significantly. Combined with the recently proposed CRST self-training framework, we achieve new state-of-the-art results on two standard UDA benchmarks.

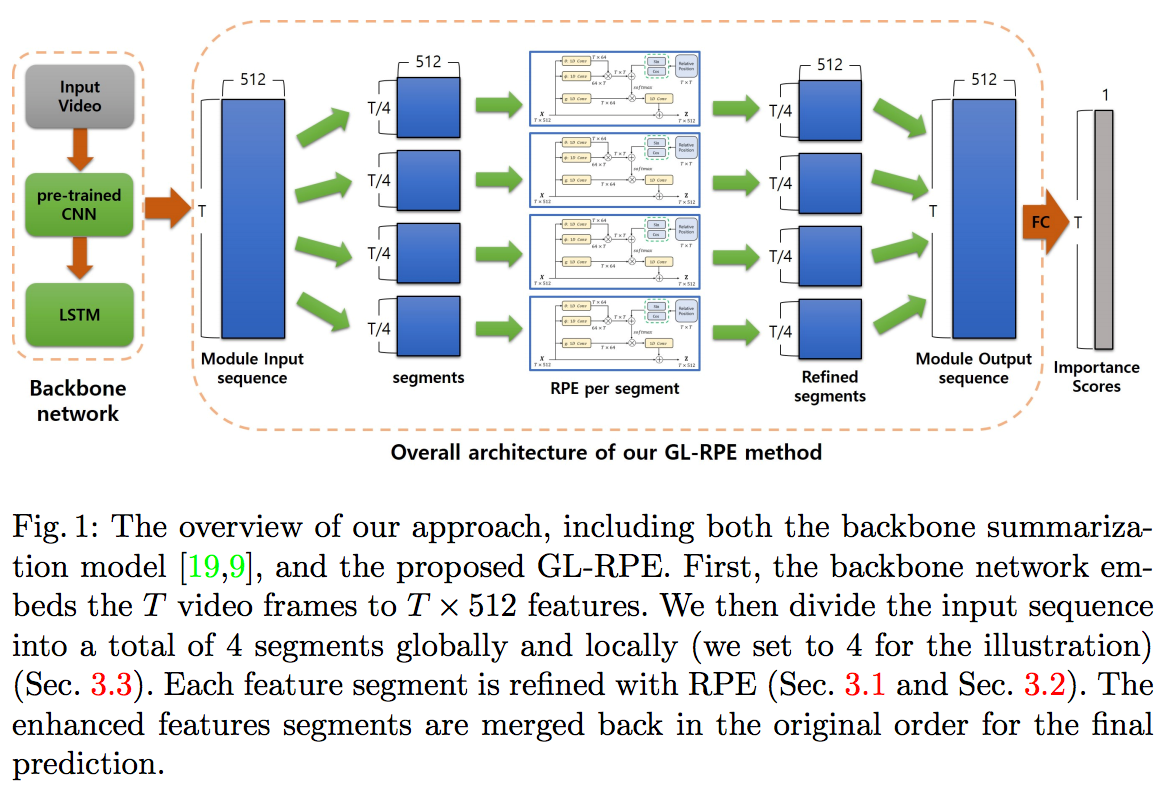

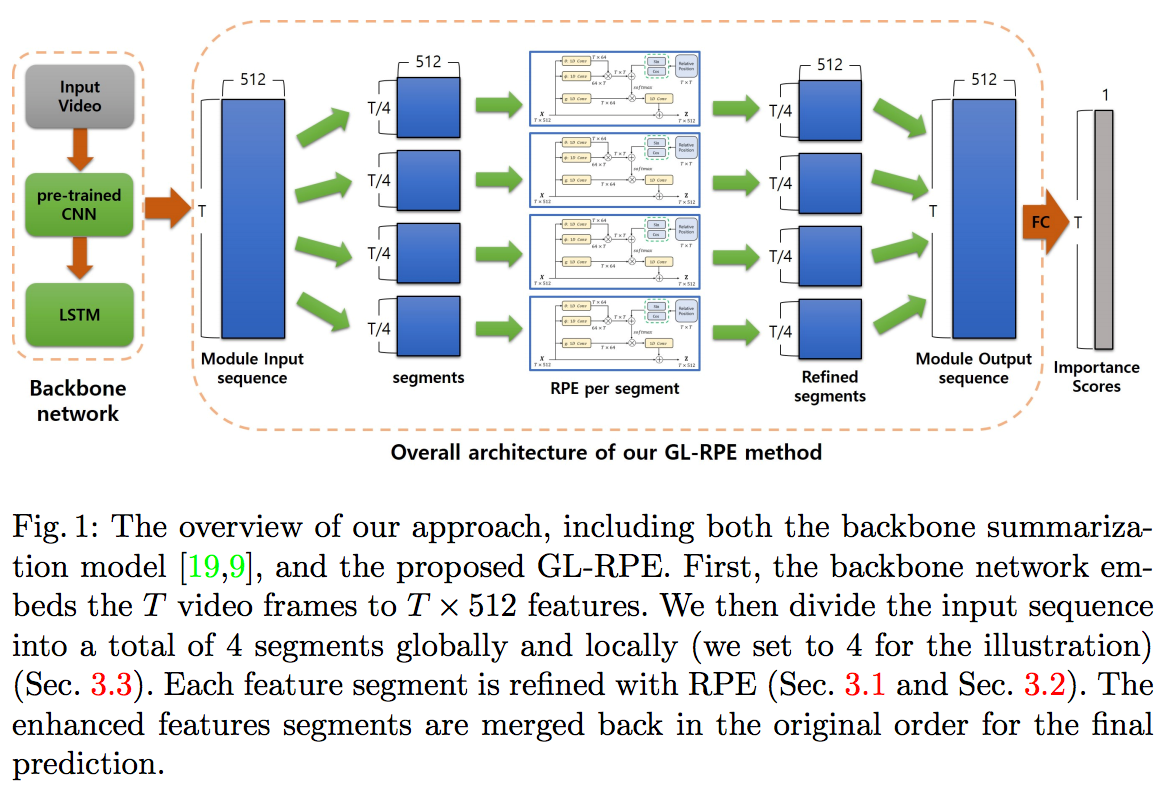

Conference/Journal, Year: ECCV, 2020

In order to summarize a content video properly, it is important to grasp the sequential structure of video as well as the long-term dependency between frames. The necessity of them is more obvious, especially for unsupervised learning. One possible solution is to utilize a well-known technique in the field of natural language processing for long-term dependency and sequential property: self-attention with relative position embedding (RPE). However, compared to natural language processing, video summarization requires capturing a much longer length of the global context. In this paper, we therefore present a novel input decomposition strategy, which samples the input both globally and locally. This provides an effective temporal window for RPE to operate and improves overall computational efficiency significantly. By combining both Global-and-Local input decomposition and RPE together, we come up with GL-RPE. Our approach allows the network to capture both local and global interdependencies between video frames effectively. Since GL-RPE can be easily integrated into the existing methods, we apply it to two different unsupervised backbones. We provide extensive ablation studies and visual analysis to verify the effectiveness of the proposals. We demonstrate our approach achieves new state-of-the-art performance using the recently proposed rank order-based metrics: Kendall’s τ and Spearman’s ρ. Furthermore, despite our method is unsupervised, we show ours perform on par with the fully-supervised method.

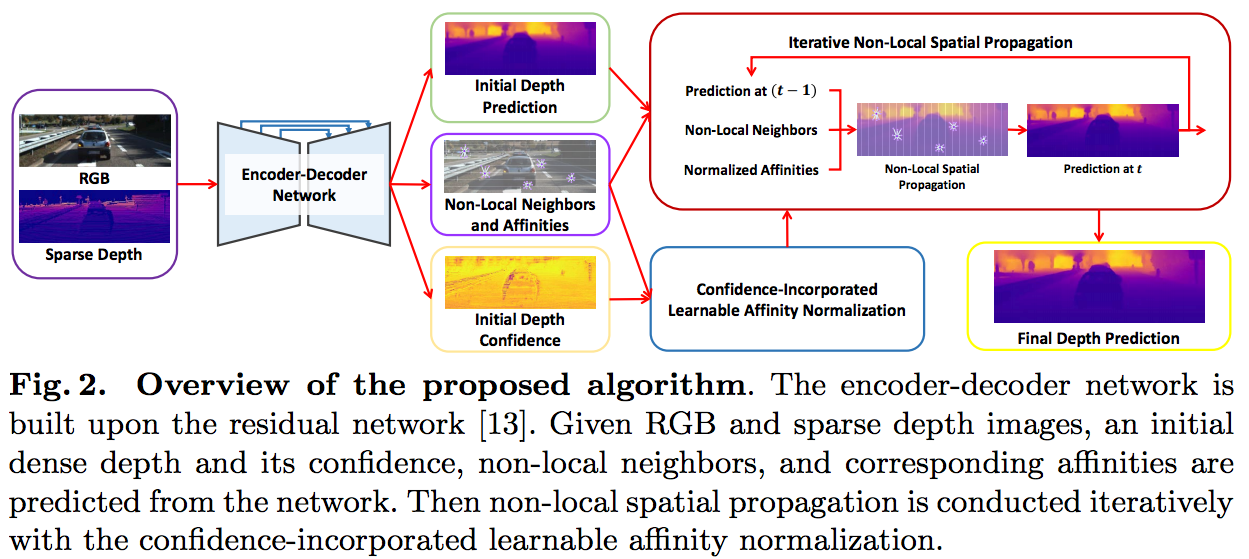

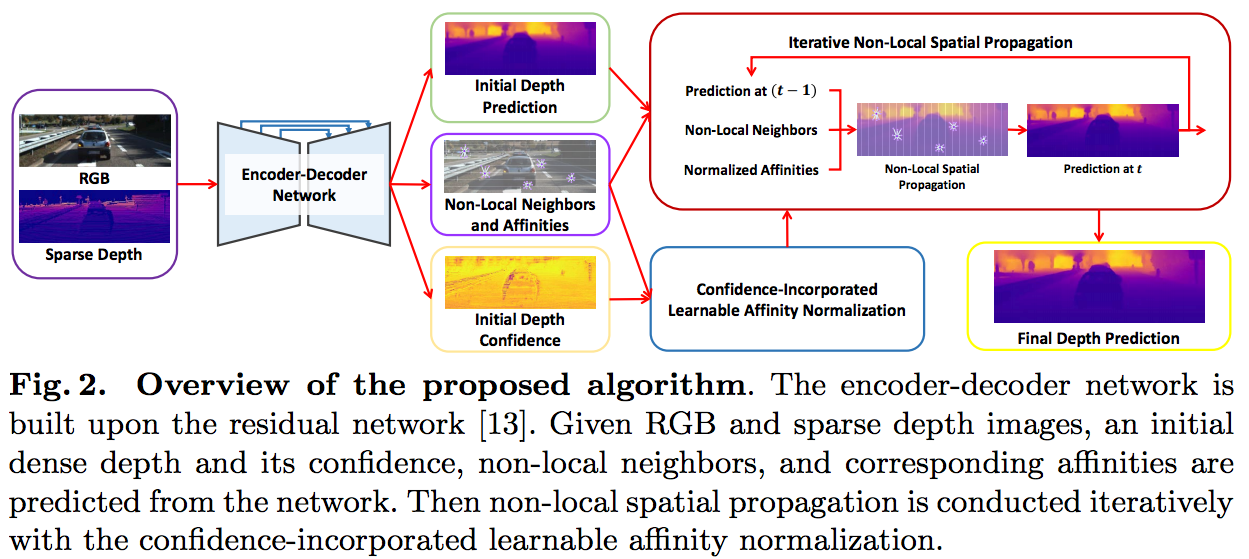

Conference/Journal, Year: ECCV, 2020

In this paper, we propose a robust and efficient end-to-end non-local spatial propagation network for depth completion. The proposed network takes RGB and sparse depth images as inputs and estimates non-local neighbors and their affinities of each pixel, as well as an initial depth map with pixel-wise confidences. The initial depth prediction is then iteratively refined by its confidence and non-local spatial propagation procedure based on the predicted non-local neighbors and corresponding affinities. Unlike previous algorithms that utilize fixedlocal neighbors, the proposed algorithm effectively avoids irrelevant local neighbors and concentrates on relevant non-local neighbors during propagation. In addition, we introduce a learnable affinity normalization to better learn the affinity combinations compared to conventional methods. The proposed algorithm is inherently robust to the mixed-depth problem on depth boundaries, which is one of the major issues for existing depth estimation/completion algorithms. Experimental results on indoor and outdoor datasets demonstrate that the proposed algorithm is superior to conventional algorithms in terms of depth completion accuracy and robustness to the mixed-depth problem. Our implementation is publicly available on the project page.

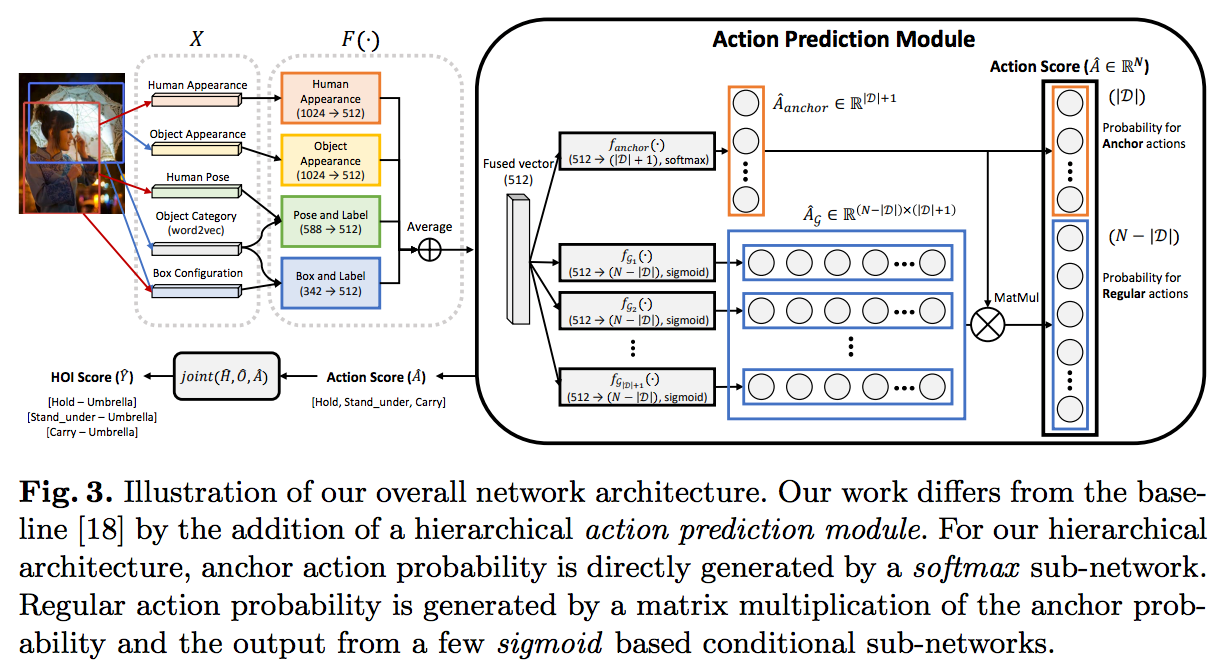

Conference/Journal, Year: ECCV, 2020

A common problem in human-object interaction (HOI) detection task is that numerous HOI classes have only a small number of labeled examples, resulting in training sets with a long-tailed distribution. The lack of positive labels can lead to low classification accuracy for these classes. Towards addressing this issue, we observe that there exist natural correlations and anti-correlations among human-object interactions. In this paper, we model the correlations as action co-occurrence matrices and present techniques to learn these priors and leverage them for more effective training, especially on rare classes. The utility of our approach is demonstrated experimentally, where the performance of our approach exceeds the state-of-the-art methods on both of the two leading HOI detection benchmark datasets, HICO-Det and V-COCO.

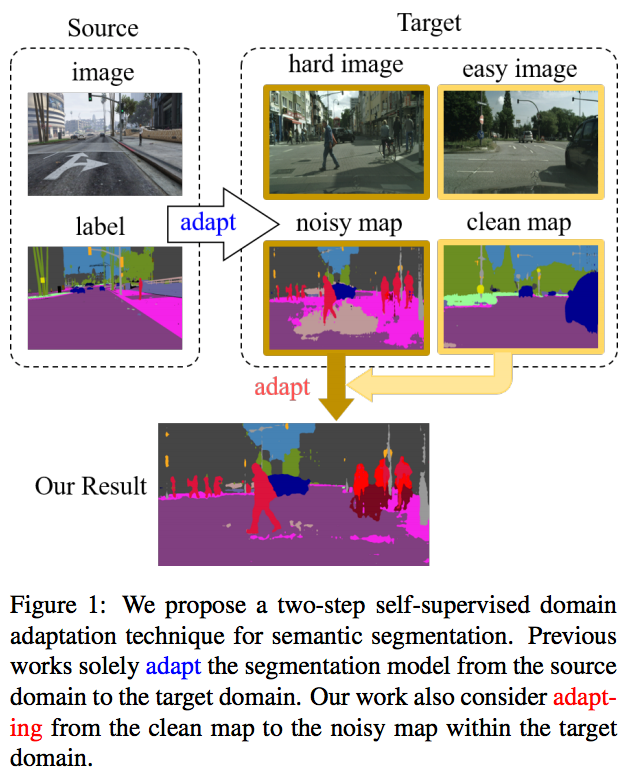

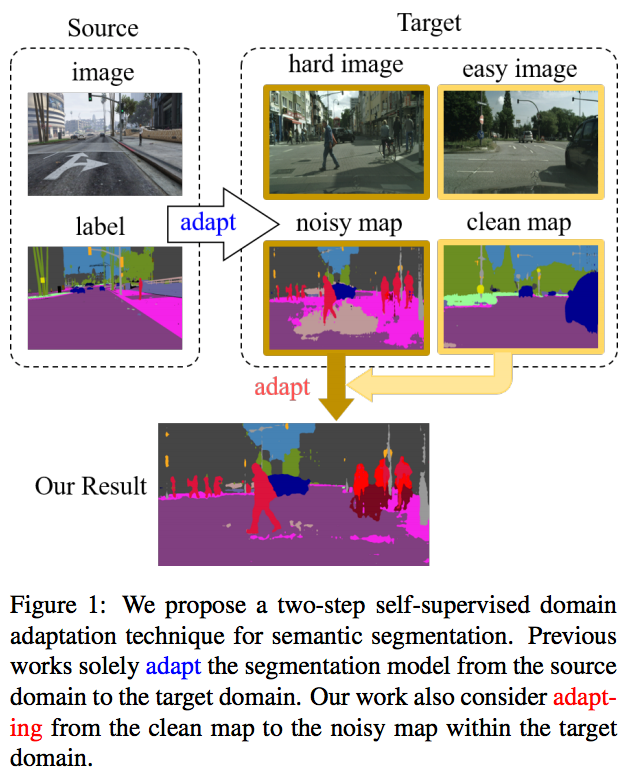

Conference/Journal, Year: CVPR, 2020

Convolutional neural network-based approaches have achieved remarkable progress in semantic segmentation. However, these approaches heavily rely on annotated data which are labor intensive. To cope with this limitation, automatically annotated data generated from graphic engines are used to train segmentation models. However, the models trained from synthetic data are difficult to transfer to real images. To tackle this issue, previous works have considered directly adapting models from the source data to the unlabeled target data (to reduce the inter-domain gap). Nonetheless, these techniques do not consider the large distribution gap among the target data itself (intra-domain gap). In this work, we propose a two-step self-supervised domain adaptation approach to minimize the inter-domain and intra-domain gap together. First, we conduct the interdomain adaptation of the model; from this adaptation, we separate the target domain into an easy and hard split using an entropy-based ranking function. Finally, to decrease the intra-domain gap, we propose to employ a self-supervised adaptation technique from the easy to the hard split. Experimental results on numerous benchmark datasets highlight the effectiveness of our method against existing state-of-theart approaches. The source code is available at https: //github.com/feipan664/IntraDA.git.

Conference/Journal, Year: CVPR, 2020

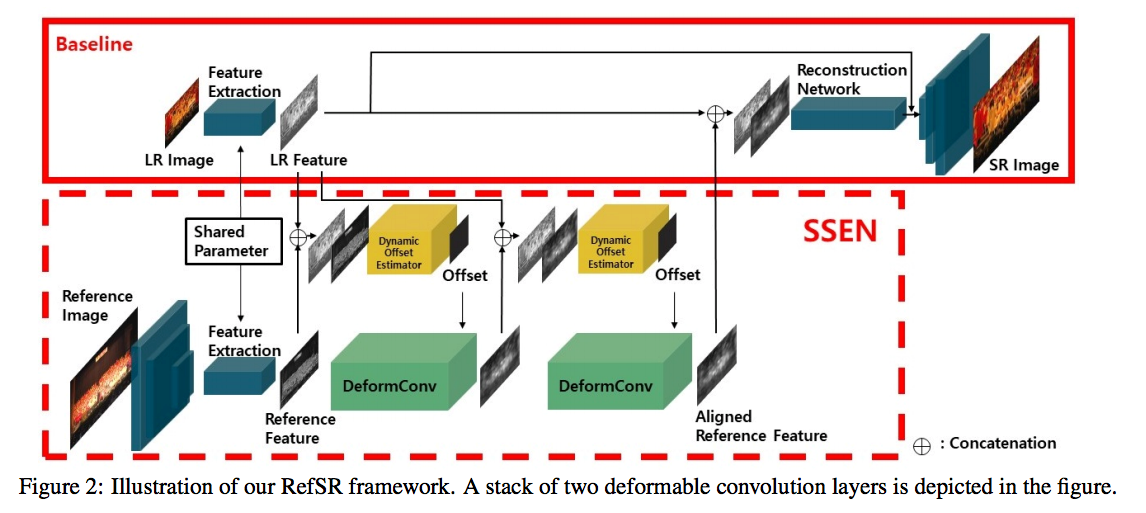

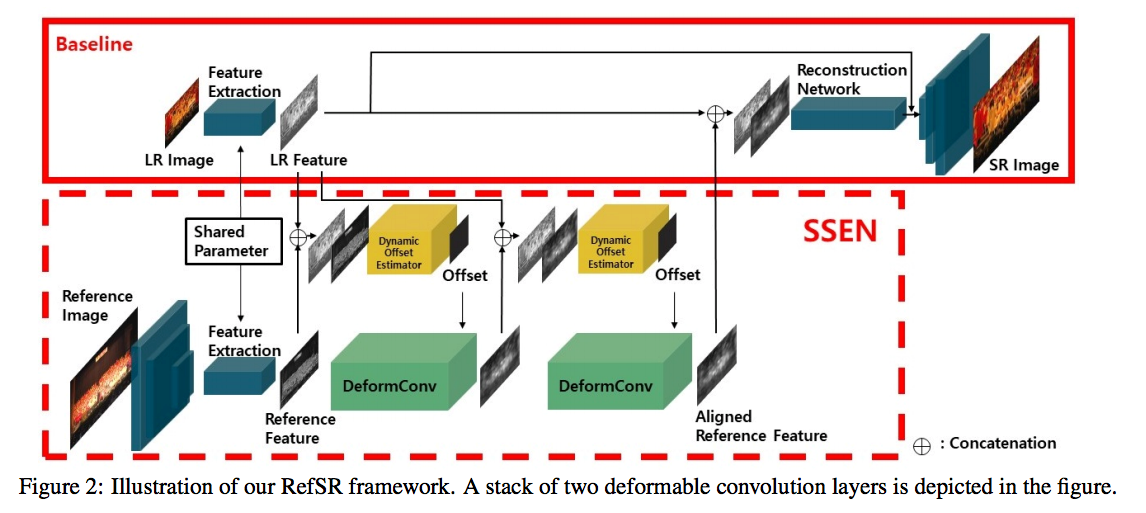

In this paper, we propose a novel and efficient reference feature extraction module referred to as the Similarity Search and Extraction Network (SSEN) for referencebased super-resolution (RefSR) tasks. The proposed module extracts aligned relevant features from a reference image to increase the performance over single image superresolution (SISR) methods. In contrast to conventional algorithms which utilize brute-force searches or optical flow estimations, the proposed algorithm is end-to-end trainable without any additional supervision or heavy computation, predicting the best match with a single network forward operation. Moreover, the proposed module is aware of not only the best matching position but also the relevancy of the best match. This makes our algorithm substantially robust when irrelevant reference images are given, overcoming the major cause of the performance degradation when using existing RefSR methods. Furthermore, our module can be utilized for self-similarity SR if no reference image is available. Experimental results demonstrate the superior performance of the proposed algorithm compared to previous works both quantitatively and qualitatively.

Conference/Journal, Year: CVPR, 2020

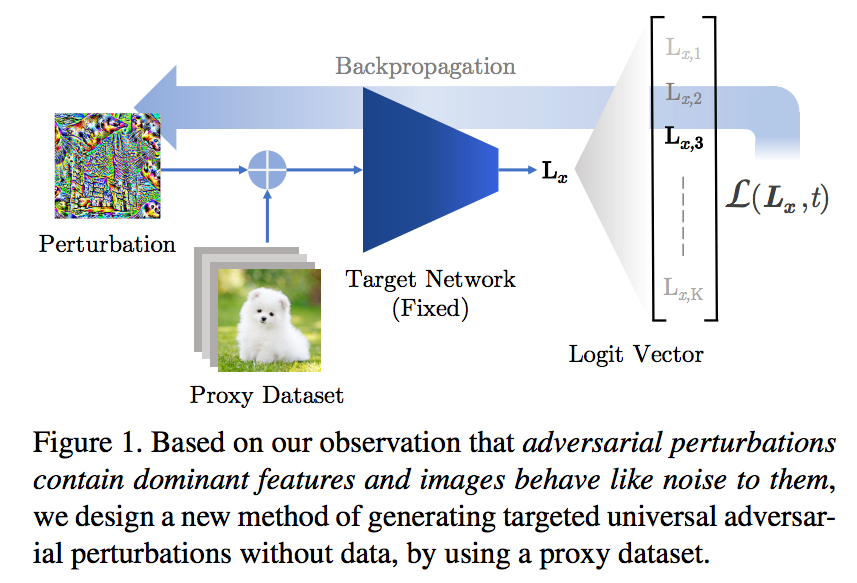

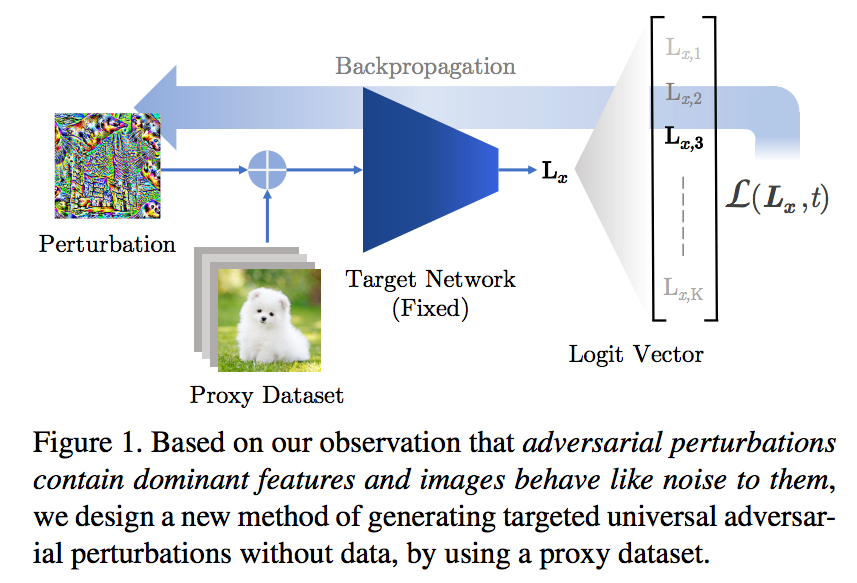

A wide variety of works have explored the reason for the existence of adversarial examples, but there is no consensus on the explanation. We propose to treat the DNN logits as a vector for feature representation, and exploit them to analyze the mutual influence of two independent inputs based on the Pearson correlation coefficient (PCC). We utilize this vector representation to understand adversarial examples by disentangling the clean images and adversarial perturbations, and analyze their influence on each other. Our results suggest a new perspective towards the relationship between images and universal perturbations: Universal perturbations contain dominant features, and images behave like noise to them. This feature perspective leads to a new method for generating targeted universal adversarial perturbations using random source images. We are the first to achieve the challenging task of a targeted universal attack without utilizing original training data. Our approach using a proxy dataset achieves comparable performance to the state-of-the-art baselines which utilize the original training dataset.