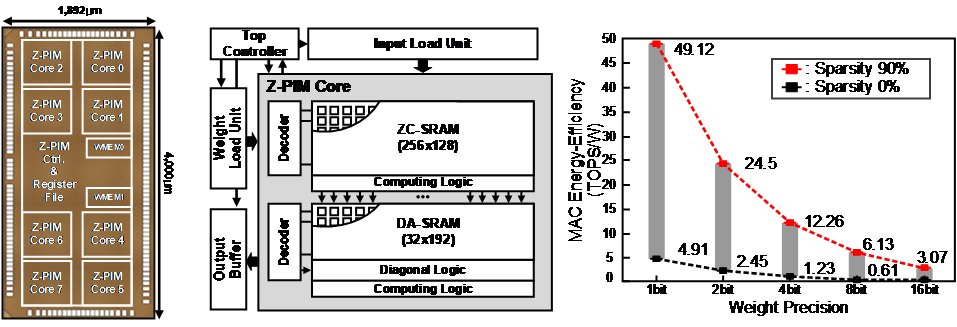

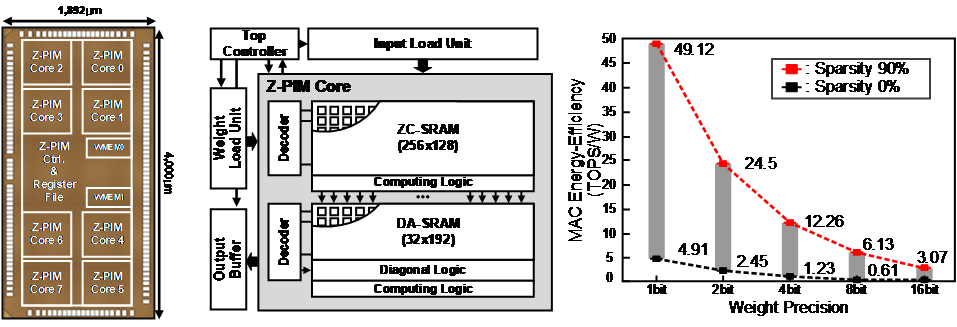

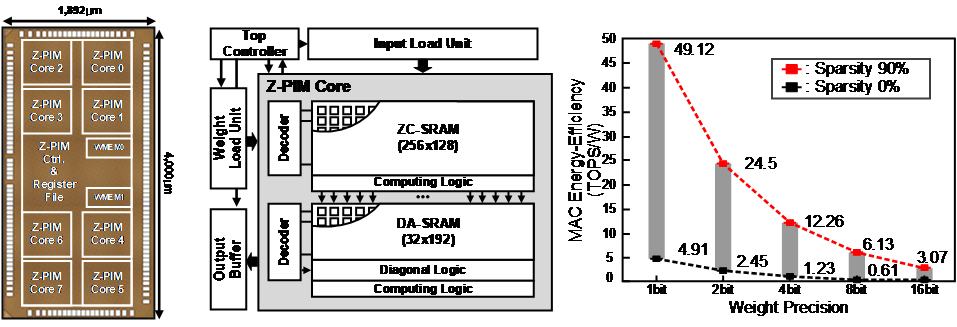

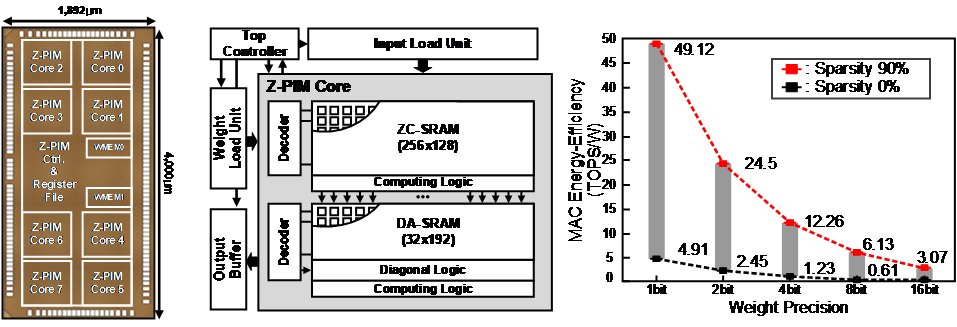

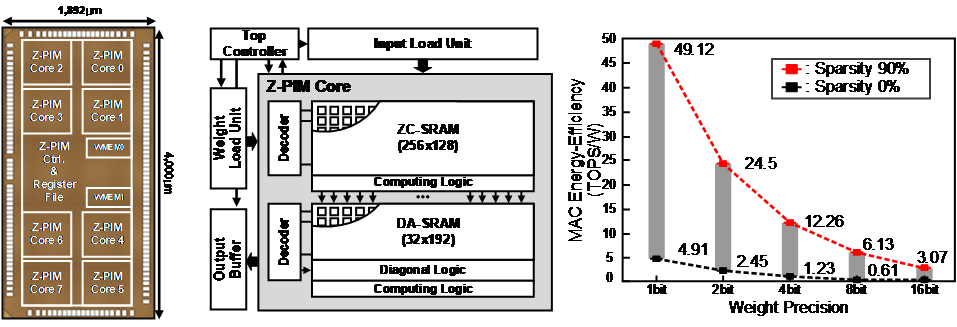

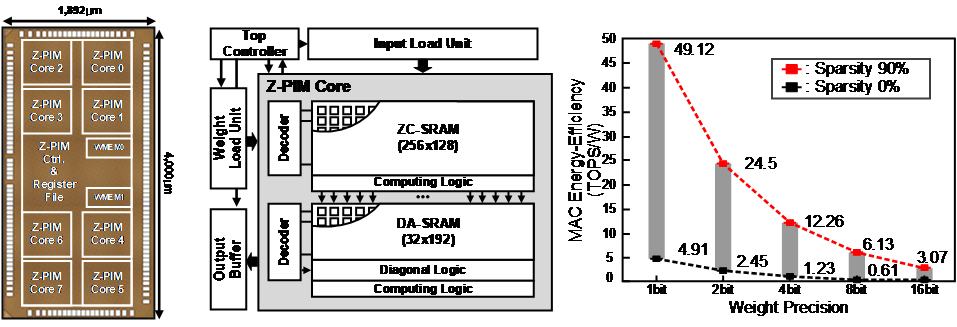

We present an energy-efficient processing-in-memory (PIM) architecture named Z-PIM that supports both sparsity handling and fully-variable bit-precision in weight data for energy-efficient deep neural networks. Z-PIM adopts the bit-serial arithmetic that performs a multiplication bit-by-bit through multiple cycles to reduce the complexity of the operation in a single cycle and to provide flexibility in bit-precision. To this end, it employs a zero-skipping convolution SRAM, which performs in-memory AND operations based on custom 8T-SRAM cells and channel-wise accumulations, and a diagonal accumulation SRAM that performs bit-wise and spatial-wise accumulation on the channel-wise accumulation results using diagonal logic and adders to produce the final convolution outputs. We propose the hierarchical bit-line structure for energy-efficient weight bit pre-charging and computational read-out by reducing the parasitic capacitances of the bit-lines. Its charge reuse scheme reduces the switching rate by 95.42% for the convolution layers of VGG-16 model. In addition, Z-PIM’s channel-wise data mapping enables sparsity handling by skip-reading the input channels with zero weight. Its read-operation pipelining enabled by a read-sequence scheduling improves the throughput by 66.1%. The Z-PIM chip is fabricated in 65-nm CMOS process on a 7.568 mm2 die while it consumes average 5.294mW power at 1.0V voltage and 200MHz frequency. It achieves 0.31-49.12 TOPS/W energy efficiency for convolution operations as the weight sparsity and bit-precision vary from 0.1 to 0.9 and 1-bit to 16-bit, respectively. For the figure of merit considering input bit-width, weight bit-width, and energy efficiency, the Z-PIM shows more than 2.1 times improvement over the state-of-the-art PIM implementations.

We present an energy-efficient processing-in-memory (PIM) architecture named Z-PIM that supports both sparsity handling and fully-variable bit-precision in weight data for energy-efficient deep neural networks. Z-PIM adopts the bit-serial arithmetic that performs a multiplication bit-by-bit through multiple cycles to reduce the complexity of the operation in a single cycle and to provide flexibility in bit-precision. To this end, it employs a zero-skipping convolution SRAM, which performs in-memory AND operations based on custom 8T-SRAM cells and channel-wise accumulations, and a diagonal accumulation SRAM that performs bit-wise and spatial-wise accumulation on the channel-wise accumulation results using diagonal logic and adders to produce the final convolution outputs. We propose the hierarchical bit-line structure for energy-efficient weight bit pre-charging and computational read-out by reducing the parasitic capacitances of the bit-lines. Its charge reuse scheme reduces the switching rate by 95.42% for the convolution layers of VGG-16 model. In addition, Z-PIM’s channel-wise data mapping enables sparsity handling by skip-reading the input channels with zero weight. Its read-operation pipelining enabled by a read-sequence scheduling improves the throughput by 66.1%. The Z-PIM chip is fabricated in 65-nm CMOS process on a 7.568 mm2 die while it consumes average 5.294mW power at 1.0V voltage and 200MHz frequency. It achieves 0.31-49.12 TOPS/W energy efficiency for convolution operations as the weight sparsity and bit-precision vary from 0.1 to 0.9 and 1-bit to 16-bit, respectively. For the figure of merit considering input bit-width, weight bit-width, and energy efficiency, the Z-PIM shows more than 2.1 times improvement over the state-of-the-art PIM implementations.

Journal: IEEE Journal of Solid-State Circuits 2021

We present an energy-efficient processing-in-memory (PIM) architecture named Z-PIM that supports both sparsity handling and fully-variable bit-precision in weight data for energy-efficient deep neural networks. Z-PIM adopts the bit-serial arithmetic that performs a multiplication bit-by-bit through multiple cycles to reduce the complexity of the operation in a single cycle and to provide flexibility in bit-precision. To this end, it employs a zero-skipping convolution SRAM, which performs in-memory AND operations based on custom 8T-SRAM cells and channel-wise accumulations, and a diagonal accumulation SRAM that performs bit-wise and spatial-wise accumulation on the channel-wise accumulation results using diagonal logic and adders to produce the final convolution outputs. We propose the hierarchical bit-line structure for energy-efficient weight bit pre-charging and computational read-out by reducing the parasitic capacitances of the bit-lines. Its charge reuse scheme reduces the switching rate by 95.42% for the convolution layers of VGG-16 model. In addition, Z-PIM’s channel-wise data mapping enables sparsity handling by skip-reading the input channels with zero weight. Its read-operation pipelining enabled by a read-sequence scheduling improves the throughput by 66.1%. The Z-PIM chip is fabricated in 65-nm CMOS process on a 7.568 mm2 die while it consumes average 5.294mW power at 1.0V voltage and 200MHz frequency. It achieves 0.31-49.12 TOPS/W energy efficiency for convolution operations as the weight sparsity and bit-precision vary from 0.1 to 0.9 and 1-bit to 16-bit, respectively. For the figure of merit considering input bit-width, weight bit-width, and energy efficiency, the Z-PIM shows more than 2.1 times improvement over the state-of-the-art PIM implementations.

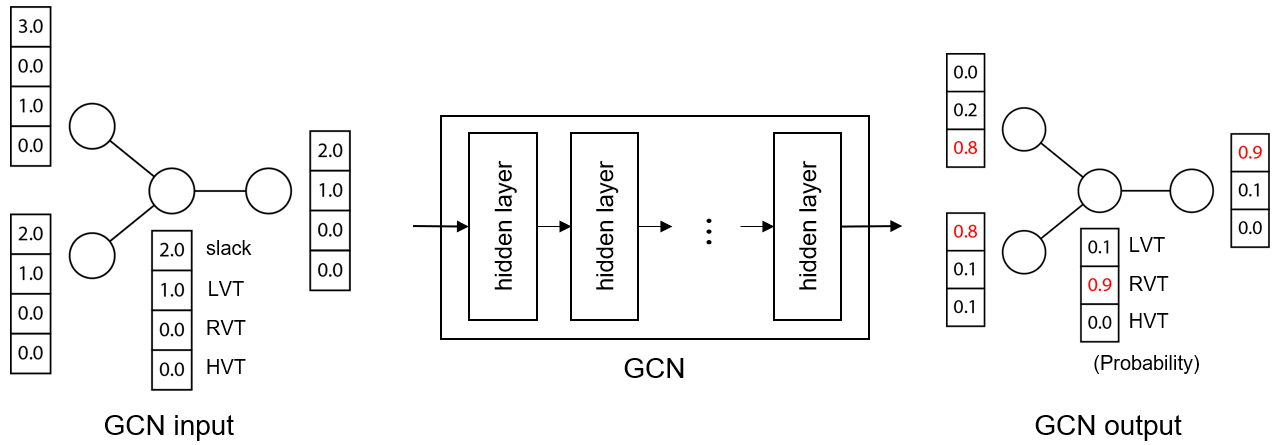

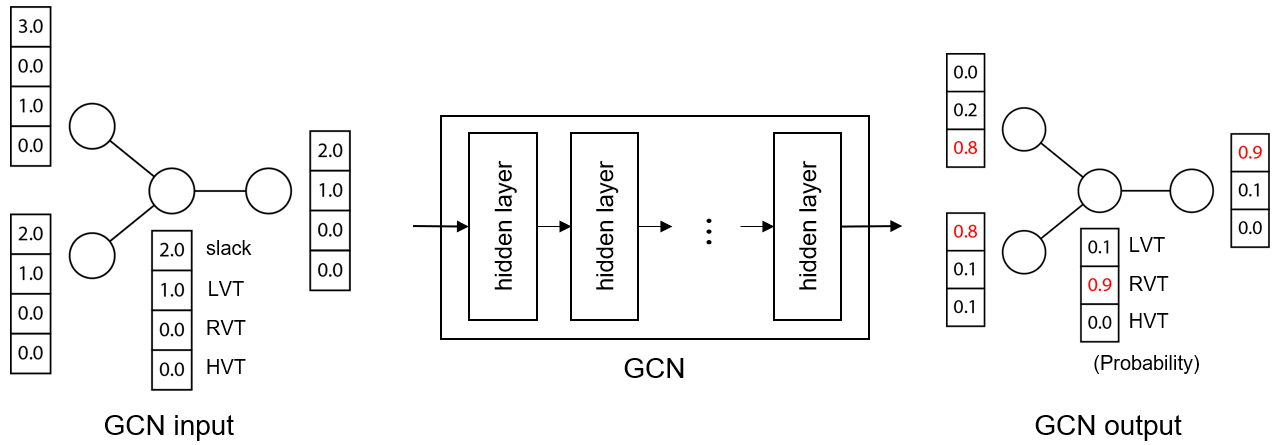

At the very late design stage, engineering change order (ECO) leakage optimization is often performed to swap some cells for the ones with lower leakage, e.g. the cells with higher threshold voltage (Vth) or with longer gate length. It is very effective but time consuming due to iterative nature of swap and timing check with correction. We introduce a graph convolutional network (GCN) for quick ECO leakage optimization. GCN receives a number of input parameters that model the current timing information of a netlist as well as the connectivity of the cells in a form of a weighted connectivity matrix. Once it is trained, GCN predicts exact Vth (with Vth given by commercial ECO leakage optimization as a reference) of 83% of cells, on average of test circuits. The remaining 17% of cells are responsible for some negative timing slack. To correct such timing as well as to remove any minimum implant width (MIW) violations, we propose a heuristic Vth reassignment. The combined GCN and heuristic achieve 52% reduction of leakage, which can be compared to 61% reduction from commercial ECO, but with less than half of runtime.

Machine learning models have been applied to a wide range of computational lithography applications since around 2010. They provide higher modeling capability, so their application allows modeling of higher accuracy. Many applications which are computationally expensive can take advantage of machine learning models, since a well-trained model provides a quick estimation of outcome. This tutorial reviews a number of such computational lithography applications that have been using machine learning models. They include mask optimization with OPC (optical proximity correction) and EPC (etch proximity correction), assist features insertion and their printability check, lithography modeling with optical model and resist model, test patterns, and hotspot detection and correction

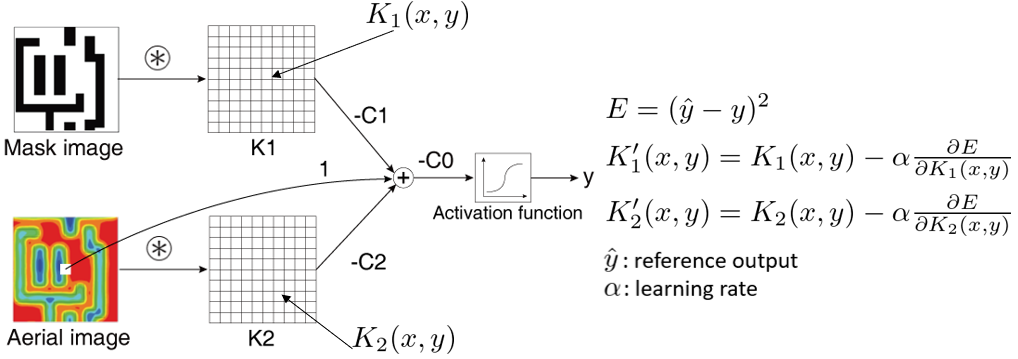

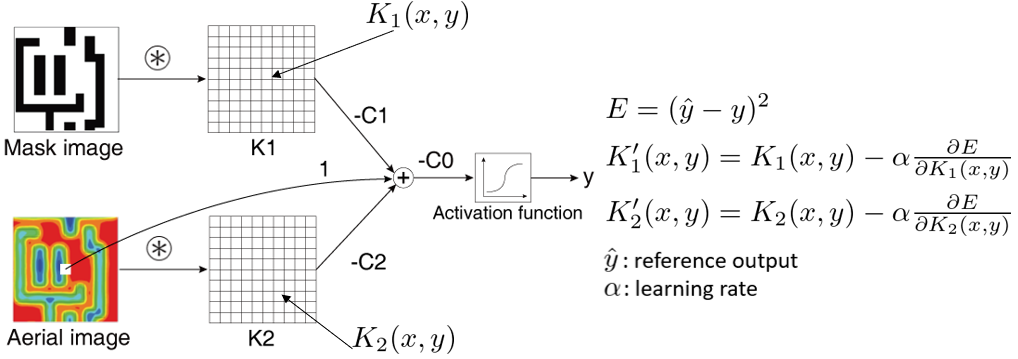

Accurate and fast lithography model is necessary for computational lithography applications such as optical proximity correction (OPC) and lithography rule check. In lithography model, optical model calculates image intensity followed by resist model that outputs a resist contour. Resist model is an empirical model, in which images are convolved with resist kernels and their weighted sum is used to derive a resist model signal that is compared with some threshold. Conventional resist model use a simple form of resist kernels such as Gaussian kernels, therefore it requires many kernels to achieve high accuracy. We propose to use free-form resist kernels. Resist model has the same structure as convolutional neural network (CNN), thus, we represent resist model with free-form kernels in CNN and train the network. To avoid overfitting of the proposed model, we initialize the model with conventional Gaussian kernels. Training data is carefully selected so that resist contour is accurately predicted. A conventional resist model with 9 Gaussian kernels is converted into a model with 2 free-form kernels, which achieves 35% faster lithography simulation. In addition, simulation accuracy in CD is improved by 15%.

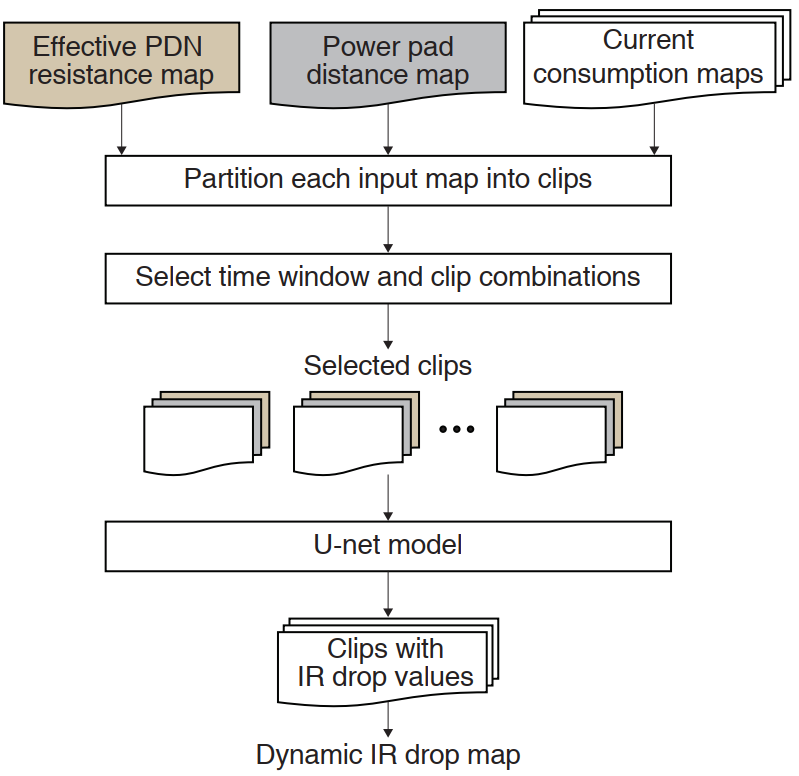

Dynamic IR drop analysis is very time consuming, so it is only applied in signoff stage before tapeout. U-net model, which is an image-to-image translation neural network, is employed for quick analysis of dynamic IR drop. A number of feature maps are used for u-net input: a map of effective PDN resistance seen from each gate, a map of current consumption of each gate (in particular time instance), and a map of relative distance to nearest power supply pad. A layout is partitioned into a grid of regions and IR drop is predicted region-by-region. For fast prediction, (1) analysis is performed only in time windows which are estimated to cause high IR drop, and (2) effective PDN resistance is approximated through a proposed simplification method. Experiments with a few test circuits demonstrate that dynamic IR drop is predicted 20 times faster than commercial analysis package with 15% error.

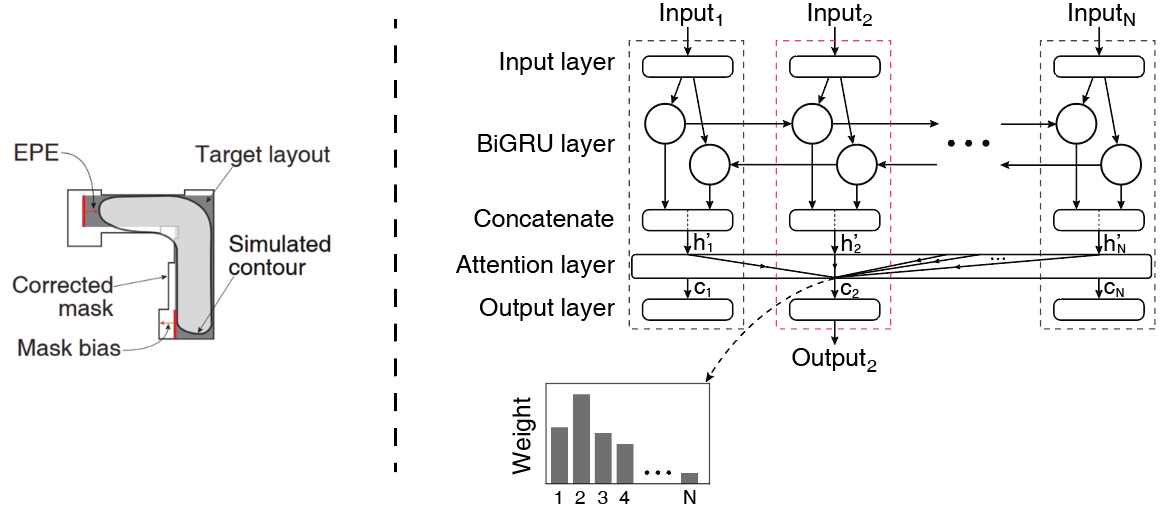

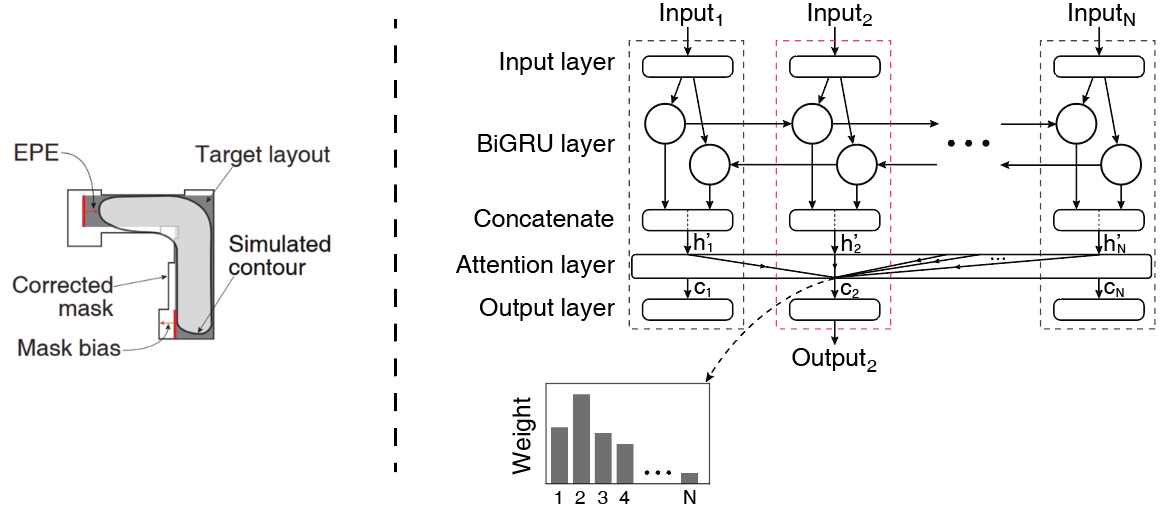

Recurrent neural network (RNN) is employed as a machine learning model for fast optical proximity correction (OPC). RNN consists of a number of neural network instances which are serially connected, with each instance in charge of one segment. RNN thus allows some localized segments to be corrected together in one execution, which offers higher accuracy. A basic RNN is extended by introducing gated recurrent unit (GRU) cells in recurrent hidden layers, which are then connected in both forward and backward directions; attention layer is adopted to help output layer predict mask bias more accurately. The proposed RNN structure is used for OPC implementation. A choice of input features, sampling of training data, an algorithm of mapping segments to neural network instances, and final mask bias calculation are addressed toward efficient implementation. Experiments demonstrate that the proposed OPC method corrects a mask layout with 36% lower EPE compared to state-of-the-art OPC method using artificial neural network structure.

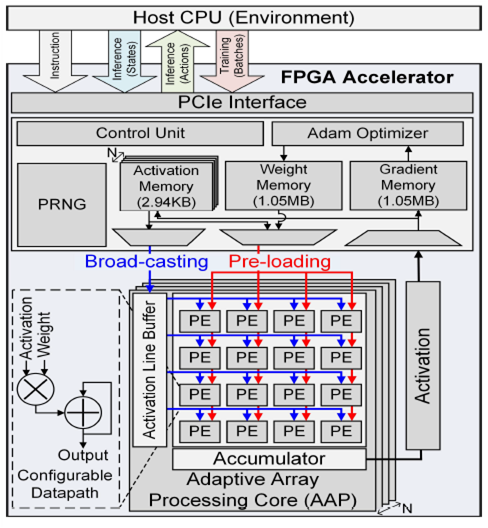

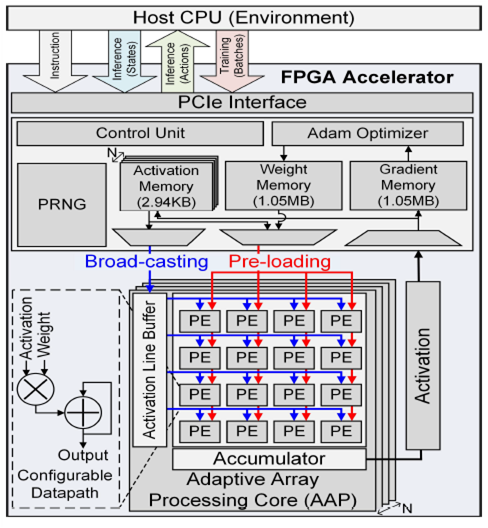

Deep reinforcement learning (DRL) is a powerful technology to deal with decision-making problem in various application domains such as robotics and gaming, by allowing an agent to learn its action policy in an environment to maximize a cumulative reward. Unlike supervised models which actively use data quantization, DRL still uses the single-precision floating-point for training accuracy while it suffers from computationally intensive deep neural network (DNN) computations.

In this paper, we present a deep reinforcement learning acceleration platform named FIXAR, which employs fixed-point data types and arithmetic units for the first time using a SW/HW co-design approach. We propose a quantization-aware training algorithm in fixed-point, which enables to reduce the data precision by half after a certain amount of training time without losing accuracy. We also design a FPGA accelerator that employs adaptive dataflow and parallelism to handle both inference and training operations. Its processing element has configurable datapath to efficiently support the proposed quantized-aware training. We validate our FIXAR platform, where the host CPU emulates the DRL environment and the FPGA accelerates the agent’s DNN operations, by running multiple benchmarks in continuous action spaces based on a latest DRL algorithm called DDPG. Finally, the FIXAR platform achieves 25293.3 inferences per second (IPS) training throughput, which is 2.7 times higher than the CPU-GPU platform. In addition, its FPGA accelerator shows 53826.8 IPS and 2638.0 IPS/W energy efficiency, which are 5.5 times higher and 15.4 times more energy efficient than those of GPU, respectively. FIXAR also shows the best IPS throughput and energy efficiency among other state-of-the-art acceleration platforms using FPGA, even it targets one of the most complex DNN models.

Machine learning models have been applied to a wide range of computational lithography applications since around 2010. They provide higher modeling capability, so their application allows modeling of higher accuracy. Many applications which are computationally expensive can take advantage of machine learning models, since a well-trained model provides a quick estimation of outcome. This tutorial reviews a number of such computational lithography applications that have been using machine learning models. They include mask optimization with OPC (optical proximity correction) and EPC (etch proximity correction), assist features insertion and their printability check, lithography modeling with optical model and resist model, test patterns, and hotspot detection and correction