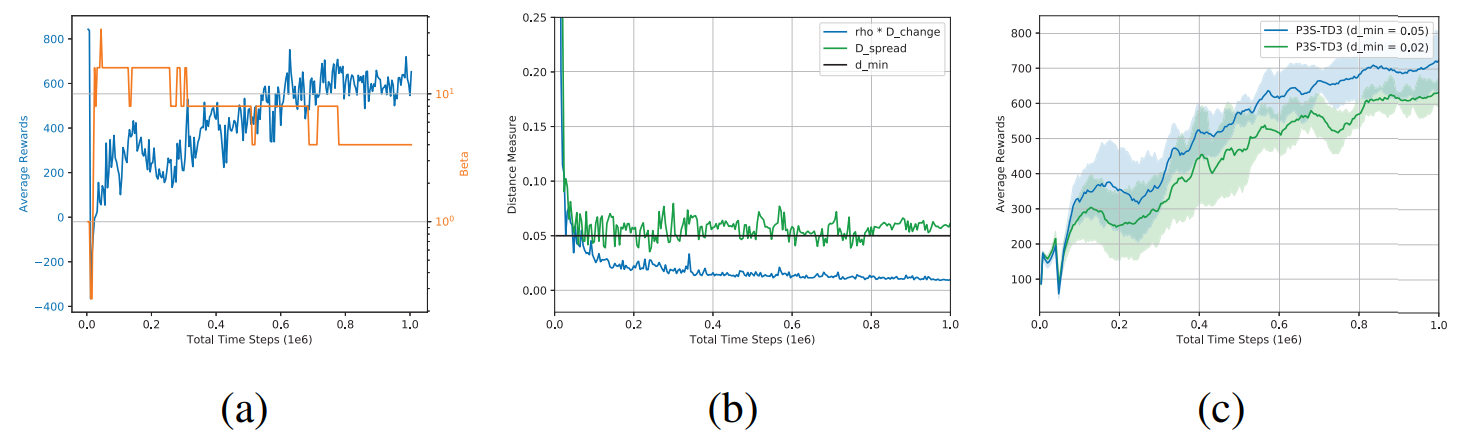

In sparse-reward reinforcement learning settings, extrinsic rewards from the environment are sparsely given to the agent. In this work, we consider intrinsic reward generation based on model prediction error. In typical model-prediction-error-based intrinsic reward generation, the agent has a learning model for the target value or distribution. Then, the intrinsic reward is designed as the error between the model prediction output and the target value or distribution, based on the fact that for less- or un-visited state-action pairs, the learned model yields larger prediction errors. This paper generalizes this model-prediction-error-based intrinsic reward generation method to the case of multiple prediction models. We propose a new adaptive fusion method relevant to the multiple-model case, which learns optimal prediction-error fusion across the learning phase to enhance the overall learning performance. Numerical results show that for representative locomotion tasks, the proposed intrinsic reward generation method outperforms most of the previous methods, and the gain is significant in some tasks.

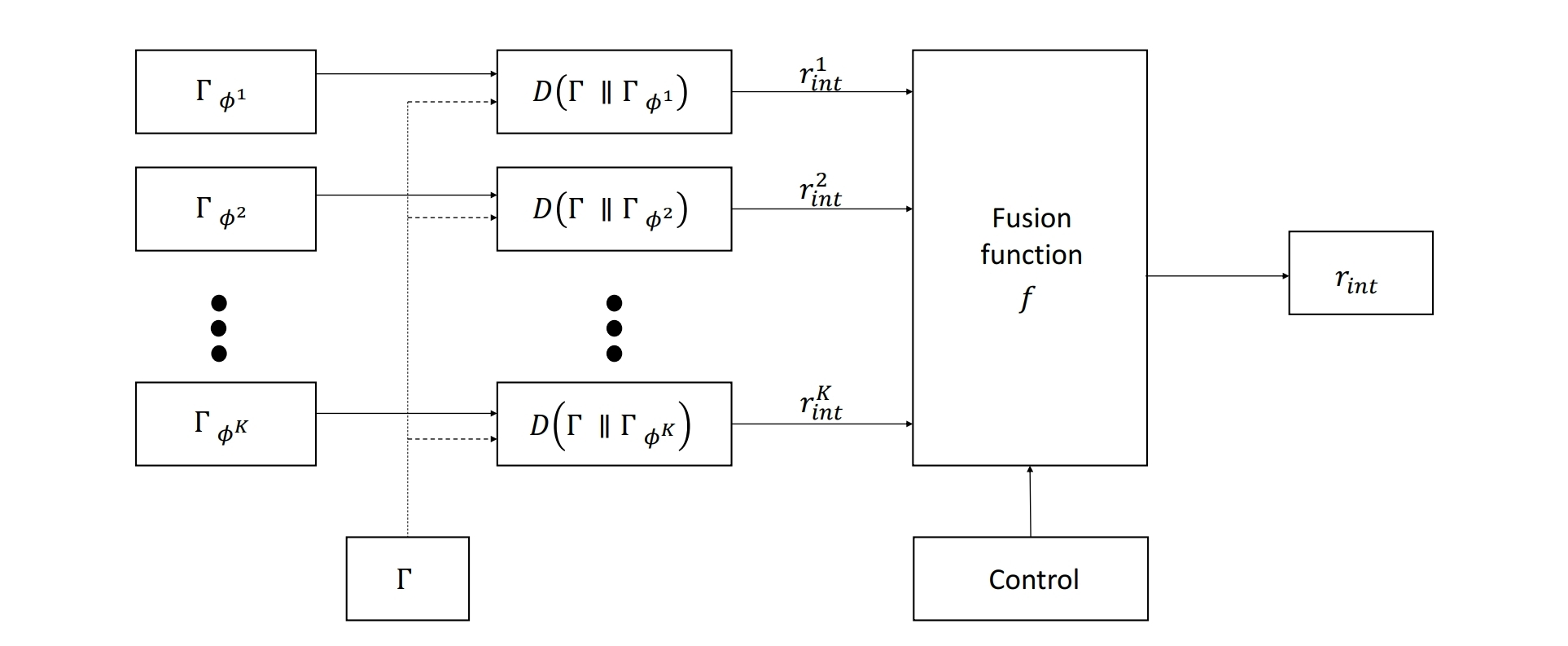

Fig. 1. Adaptive fusion of $K$ prediction errors from the multiple models.

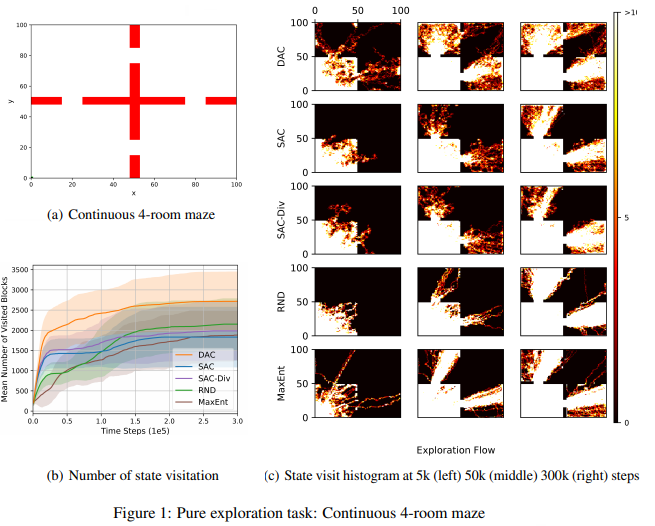

Fig. 2. Mean number of visited states in continuous 4-room maze environment over ten random seeds (the blue curves represent performance of the proposed method).

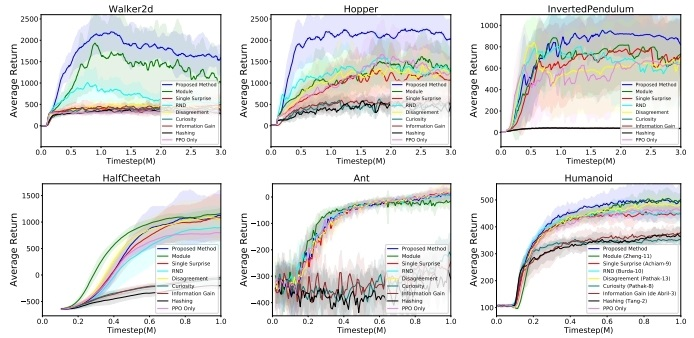

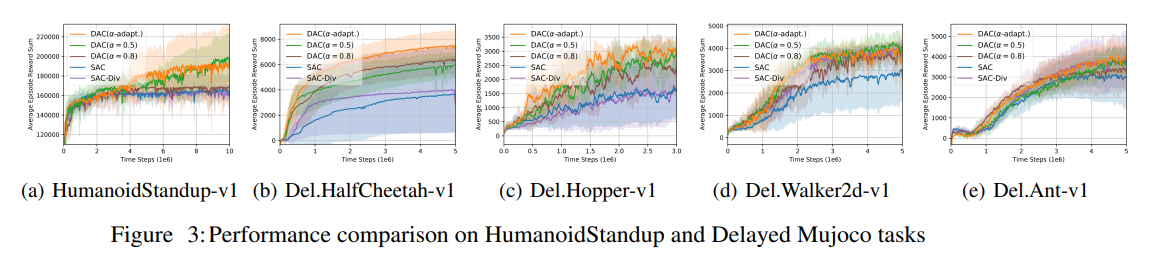

Fig. 3. Performance comparison in delayed Mujoco environments over ten random seeds (the blue curves represent performance of the the proposed method).

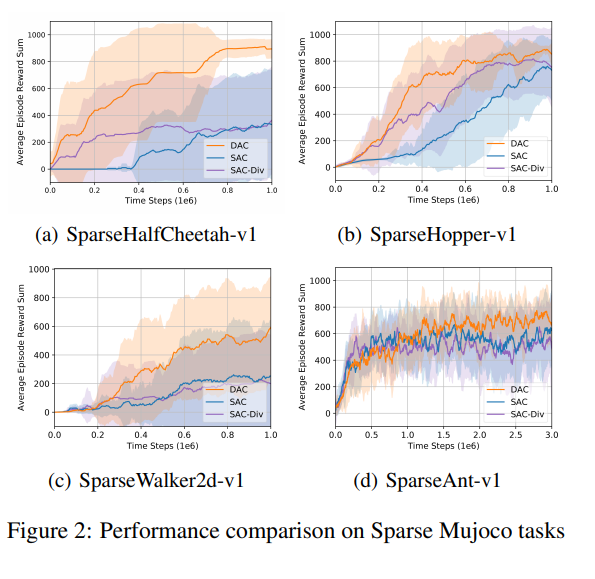

In this paper, sample-aware policy entropy regularization is proposed to enhance the conventional policy entropy regularization for better exploration. Exploiting the sample distribution obtainable from the replay buffer, the proposed sample-aware entropy regularization maximizes the entropy of the weighted sum of the policy action distribution and the sample action distribution from the replay buffer for sample-efficient exploration. A practical algorithm named diversity actor-critic (DAC) is developed by applying policy iteration to the objective function with the proposed sample-aware entropy regularization. Numerical results show that DAC significantly outperforms existing recent algorithms for reinforcement learning.

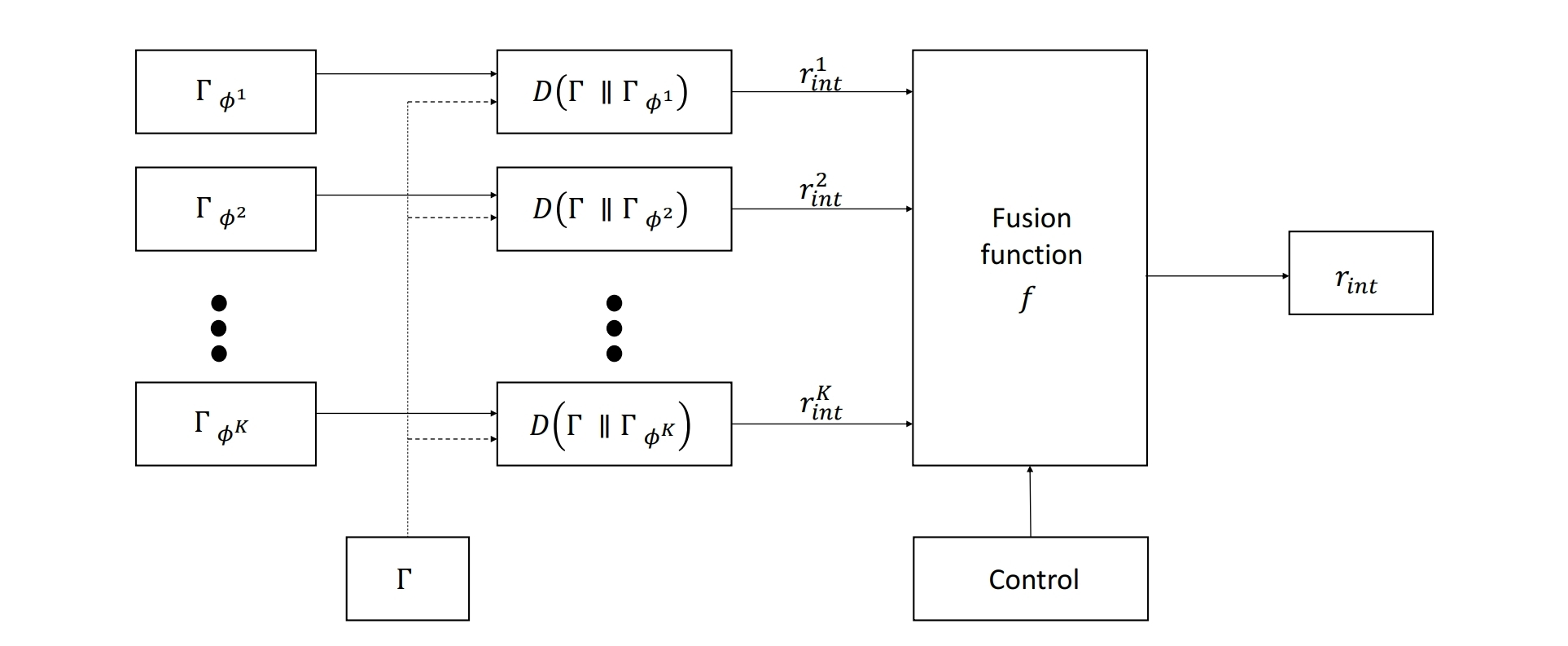

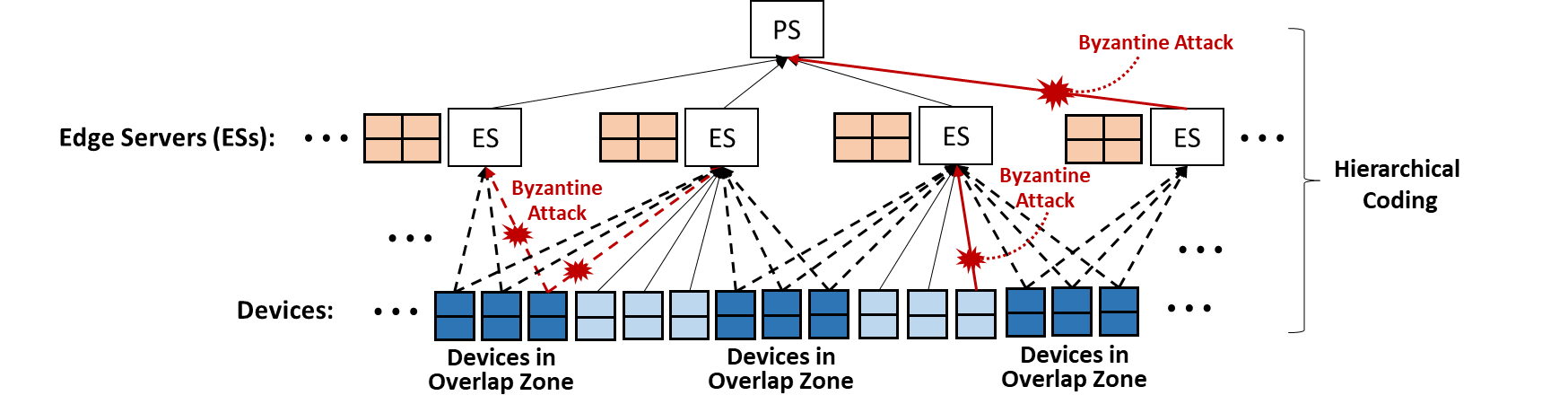

Recent proliferation of mobile devices and edge servers (e.g., small base stations) strongly motivates distributed learning at the wireless edge. In this paper, we propose a fast and secure distributed learning framework that utilizes computing resources at edge servers as well as distributed computing devices in tiered wireless edge networks. Our key ideas are to utilize 1) two-tier computing (leveraging computing powers of both ESs and devices), 2) hierarchical coding (allowing redundant computation at the nodes to combat Byzantine attacks at both tiers) and 3) broadcast nature of wireless devices (located in the overlapping cell region). A fundamental lower bound is first derived on the computational load that perfectly tolerates Byzantine attacks at both tiers. Then we propose TiBroco, a hierarchical coding framework achieving this theoretically minimum computational load. Experimental results show that TiBroco enables fast and secure distributed learning in practical tiered wireless edge networks plagued by Byzantine adversaries.

Authors: Tomer Raviv, Sangwoo Park, Nir Shlezinger, Osvaldo Simeone, Yonina C Eldar, Joonhyuk Kang

Conference: 2021 IEEE International Conference on Communications Workshops (ICC Workshops) – Emerging6G-Com

Abstract: While meta-learning reduces communication overhead via learning good inductive bias among related tasks, model-based learning solves this overhead issue by available communication-theory-based algorithms, e.g., Viterbi algorithm. We combine these two approaches, meta-learning and model-based learning, to further reduce communication overhead, namely Meta-ViterbiNet.

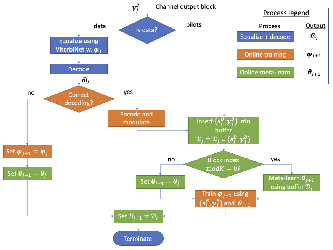

Fig. 5: Illustration of the operation of Meta-ViterbiNet

Authors: Osvaldo Simeone, Sangwoo Park, Joonhyuk Kang

Conference: 2020 2nd 6G Wireless Summit (6G SUMMIT)

Abstract: We emphasise usefulness of meta-learning for communication systems to save communication resources. In this summit, we organize the reason why we believe meta-learning would be the key ingredient for future (6G) communication systems.

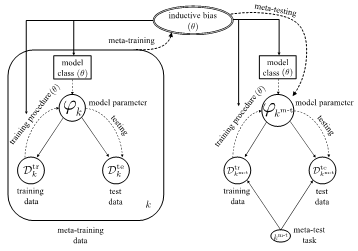

Fig. 4: Overall description of meta-learning. Based on data from multiple meta-training tasks (left part), inductive bias, or model class, is determined and applied to train a new meta-test task (right part).

Authors: Sangwoo Park, Osvaldo Simeone, Joonhyuk Kang

Conference: IEEE International Workshop on Signal Processing Advances in Wireless Communications (SPAWC) 2020

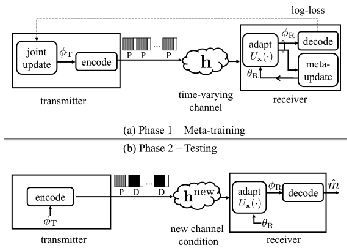

Abstract: We propose coherent AI-based end-to-end communication system via online meta-learning without channel model. Precisely, we consider single, jointly trained transmitter with meta-learned receiver that quickly adapts to any new channel with few pilots, namely online hybrid joint and meta-training.

Fig. 3: (a) The system first carries out online (meta-)training by transmitting multiple pilot packets (“P”) over time-varying channel conditions and by leveraging a feedback link; (b) Then, the system is tested on new channel conditions in the testing phase, which consists of both pilot and data (“D”) packets. The feedback link is not available for testing.

Authors: Sangwoo Park, Osvaldo Simeone, Joonhyuk Kang

Conference: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2020

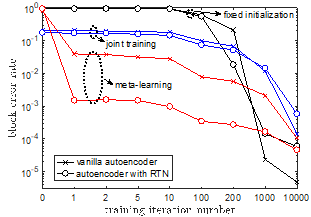

Abstract: We propose coherent AI-based end-to-end communication system via meta-learning under available channel model. With few trainings on new channel, proposed meta-learning based autoencoder finds coherent solution with reduced training complexity.

Fig. 2: Block error rate over iteration number for training on the new channel (4bits, 4complex channel uses, Rayleigh block fading channel model with 3taps, 16messages per iteration).

Authors: Sangwoo Park, Hyeryung Jang, Osvaldo Simeone, Joonhyuk Kang

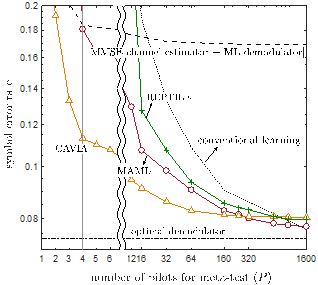

Journal: IEEE Transactions on Signal Processing (publication date: Dec. 2020)

Abstract: We propose meta-learning aided AI demodulator that outperforms conventional communication-theory-based demodulator especially for hardware imperfect IoT transmitters. Unlike conventional AI demodulators that require enormous pilot data, we significantly reduced pilot overhead (e.g., 4 pilots for 16QAM) via meta-learning. Since meta-learning requires additional offline phase for pilot data collection/training, we introduce online meta-learning that alleviates this requirement of additional phase.

Fig. 1: Symbol error rate with respect to the number of pilots (used during meta-testing) for offline meta-learning with16-QAM, Rayleigh fading, and I/Q imbalance for 1,000 meta-training devices. The symbol error is averaged over 10,000data symbols and 100 meta-test devices.

Title: Communication in Multi-Agent Reinforcement Learning: Intention Sharing

Authors: Woojun Kim, Jongeui Park and Youngchul Sung

To be presented at International Conference on Learning Representation (ICLR) 2021

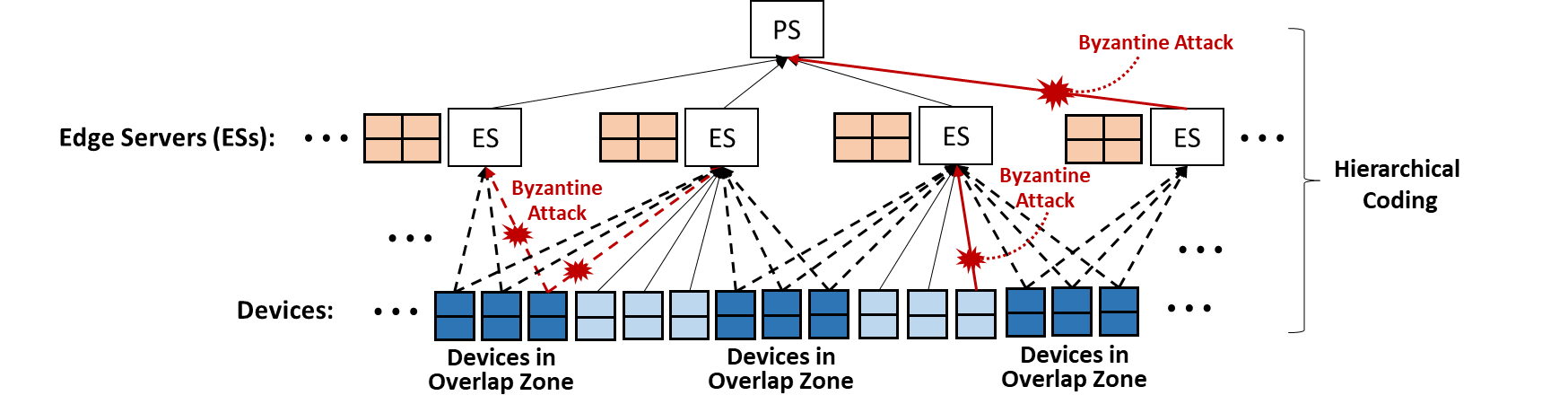

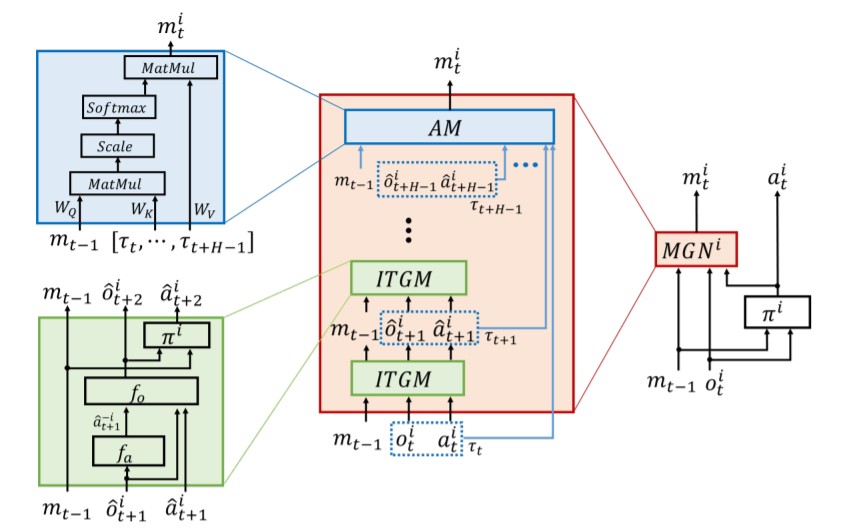

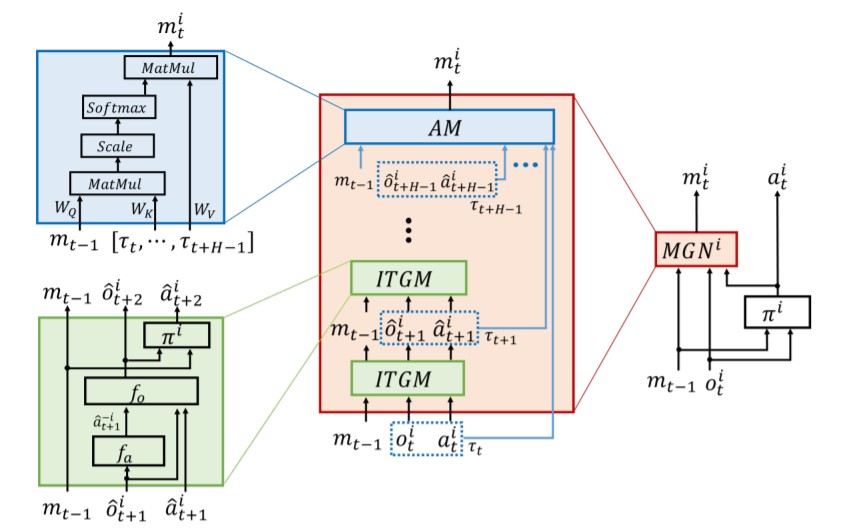

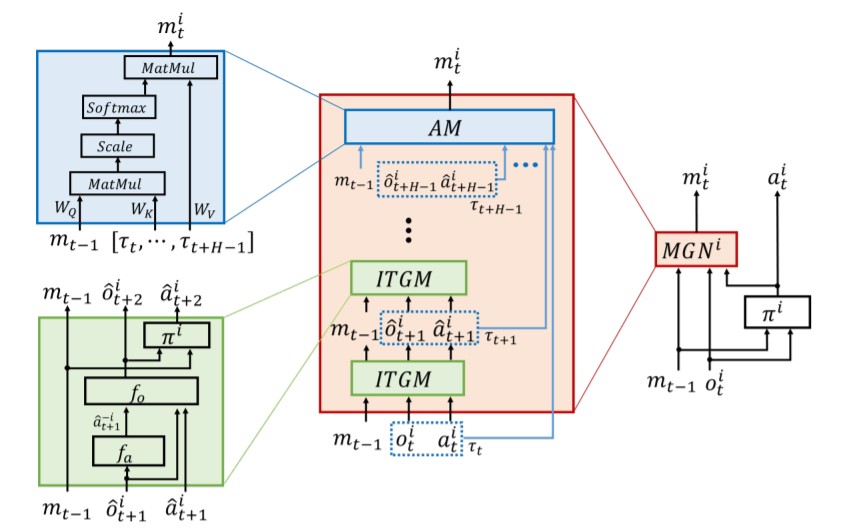

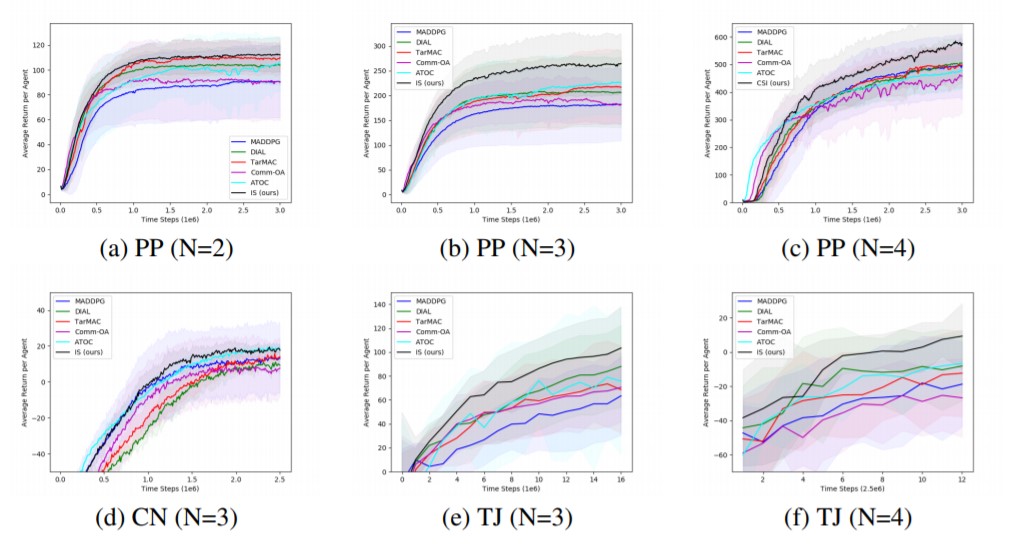

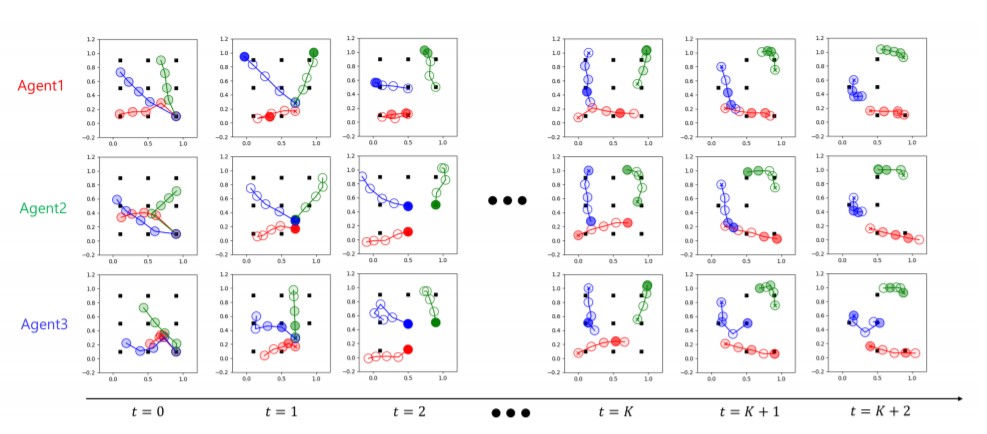

Communication is one of the core components for learning coordinated behavior in multi-agent systems. In this work, W. Kim et al. proposed a new communication scheme named Intention Sharing (IS) for multi-agent reinforcement learning in order to enhance the coordination among agents. In the proposed scheme, each agent generates an imagined trajectory by modeling the environment dynamics and other agents’ actions. The imagined trajectory is a simulated future trajectory of each agent based on the learned model of the environment dynamics and other agents and represents each agent’s future action plan. Each agent compresses this imagined trajectory capturing its future action plan to generate its intention message for communication by applying an attention mechanism to learn the relative importance of the components in the imagined trajectory based on the received message from other agents. Numeral results show that the proposed IS scheme significantly outperforms other communication schemes in multi-agent reinforcement learning.

Fig. 1. The overall structure of the proposed IS scheme from the perspective of Agent i

Fig. 2 Performance: : MADDPG (blue), DIAL (green), TarMAC (red), Comm-OA (purple), ATOC (cyan) and the proposed IS method (black). (PP: Predator-and-Prey, CN: Cooperative Navigation, TJ: Traffic Junction)

Fig. 3. Imagined trajectories and attention weights of each agent on PP (N=3): 1st row – agent1 (red), 2nd row – agent2 (green), and 3rd row – agent3 (blue). Black squares, circle inside the times icon, and other circles denote the prey, current position, and estimated future positions, respectively. The brightness of the circle is proportional to the attention weight.

Title: Population-Guided Parallel Policy Search for Reinforcement Learning

Authors: Whiyoung Jung, Giseung Park and Youngchul Sung

Presented at International Conference on Learning Representation (ICLR) 2020

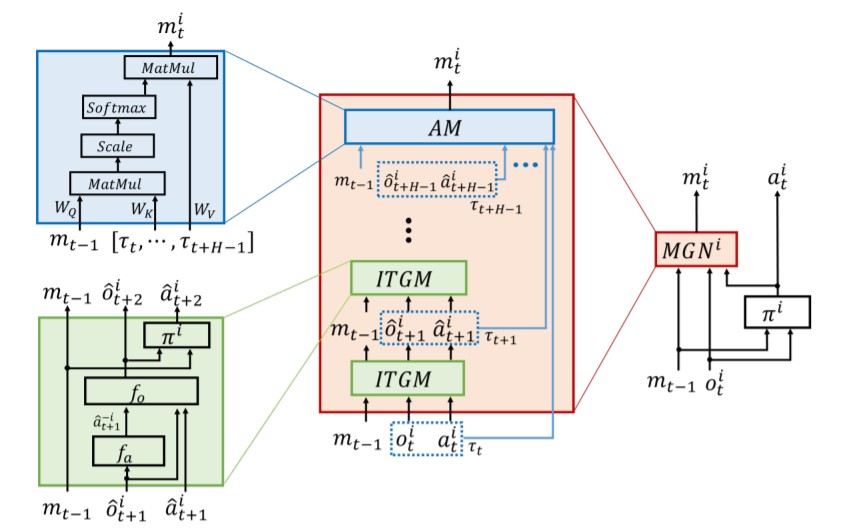

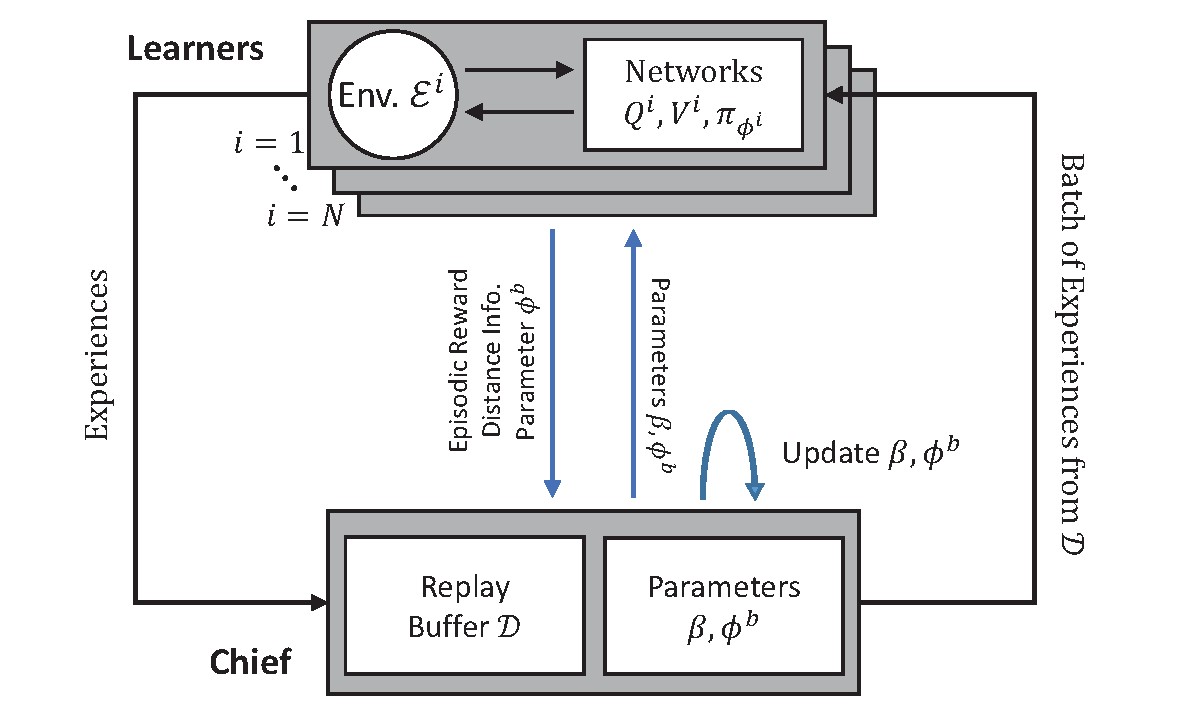

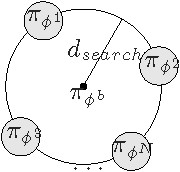

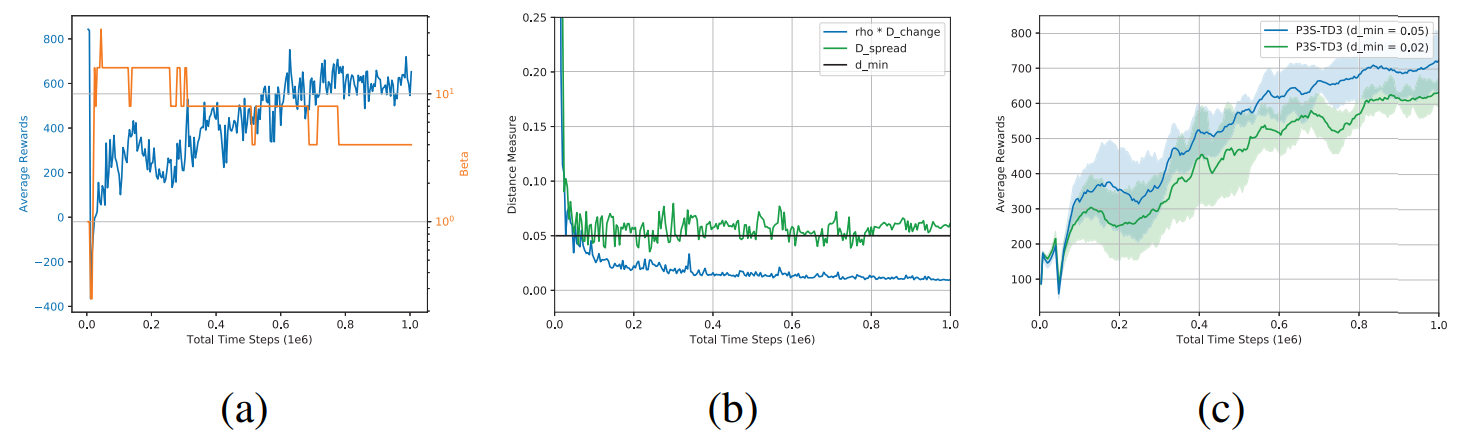

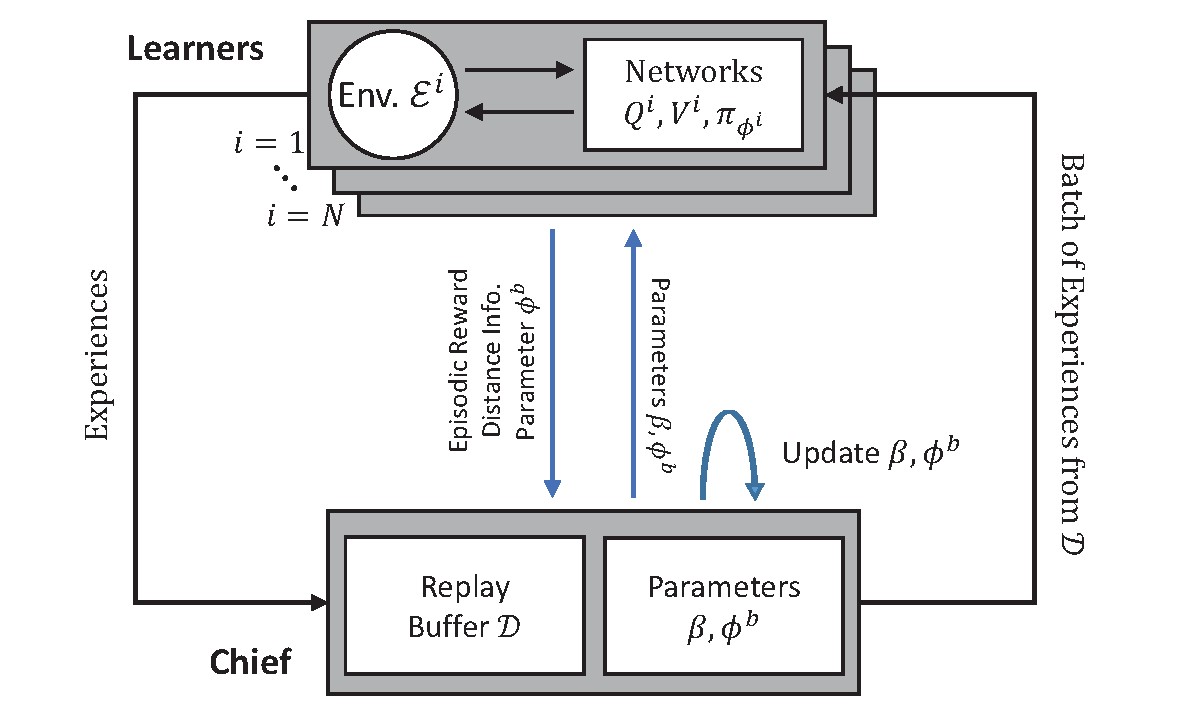

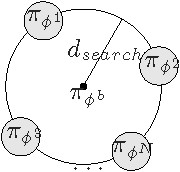

In this work, a new population-guided parallel learning scheme is proposed to enhance the performance of off-policy reinforcement learning (RL). In the proposed scheme, multiple identical learners with their own value-functions and policies share a common experience replay buffer, and search a good policy in collaboration with the guidance of the best policy information. The key point is that the information of the best policy is fused in a soft manner by constructing an augmented loss function for policy update to enlarge the overall search region by the multiple learners. The guidance by the previous best policy and the enlarged range enable faster and better policy search. Monotone improvement of the expected cumulative return by the proposed scheme is proved theoretically. Working algorithms are constructed by applying the proposed scheme to the twin delayed deep deterministic (TD3) policy gradient algorithm. Numerical results show that the constructed algorithm outperforms most of the current state-of-the-art RL algorithms, and the gain is significant in the case of sparse reward environment.

Fig. 1. The overall proposed structure (P3S):

Fig. 2. The conceptual search coverage in the policy space by parallel learners:

Fig. 3. Performance of different parallel learning methods on MuJoCo environments (up), on delayed MuJoCo environments (down)

Fig. 3. Performance of different parallel learning methods on MuJoCo environments (up), on delayed MuJoCo environments (down)

Fig. 4. Benefits of P3S (a) Performance and beta (1 seed) with d_min = 0.05, (b) Distance measures with d_min = 0.05, and (c) Comparison with different d_min = 0.02, 0.05.

Fig. 3. Performance of different parallel learning methods on MuJoCo environments (up), on delayed MuJoCo environments (down)

Fig. 3. Performance of different parallel learning methods on MuJoCo environments (up), on delayed MuJoCo environments (down)