Sihaeng Lee, Janghyeon Lee, Byungju Kim, Eojindl Yi and Junmo Kim

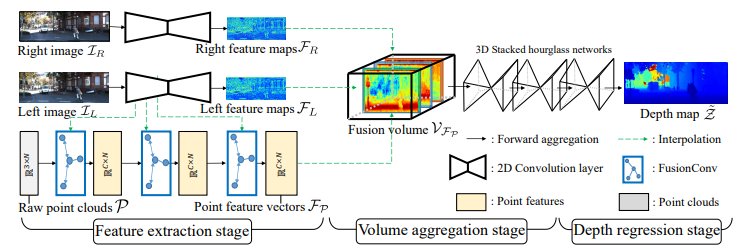

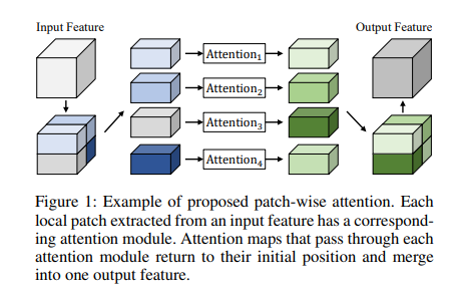

In computer vision, monocular depth estimation is the problem of obtaining a high-quality depth map from a two-dimensional image. This map provides information on three-dimensional scene geometry, which is necessary for various applications in academia and industry, such as robotics and autonomous driving. Recent studies based on convolutional neural networks achieved impressive results for this task. However, most previous studies did not consider the relationships between the neighboring pixels in a local area of the scene. To overcome the drawbacks of existing methods, we propose a patch-wise attention method for focusing on each local area. After extracting patches from an input feature map, our module generates attention maps for each local patch, using two attention modules for each patch along the channel and spatial dimensions. Subsequently, the attention maps return to their initial positions and merge into one attention feature. Our method is straightforward but effective. The experimental results on two challenging datasets, KITTI and NYU Depth V2, demonstrate that the proposed method achieves significant performance. Furthermore, our method outperforms other state-of-the-art methods on the KITTI depth estimation benchmark.

Juseung Yun, Byungjoo Kim and Junmo Kim

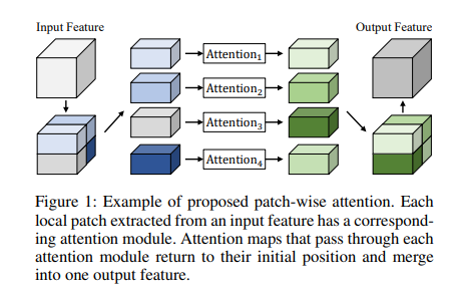

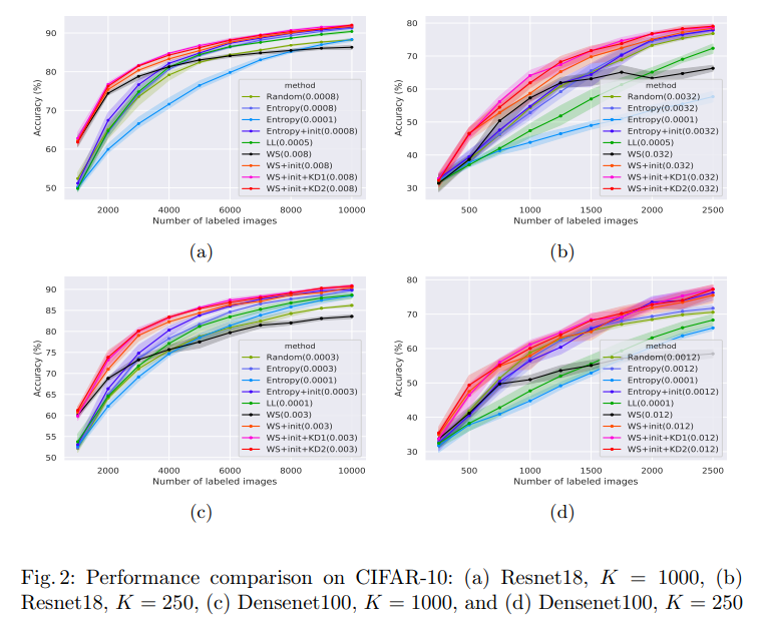

Although convolutional neural networks perform extremely well for numerous computer vision tasks, a considerably large amount of labeled data is required to ensure a good outcome. Data labeling is labor-intensive, and in some cases, the labeling budget may be limited. Active learning is a technique that can reduce the labeling required. With this technique, the neural network selects on its own the unlabeled data most helpful for learning, and then requests the human annotator for the labels. Most existing active learning methods have focused on acquisition functions for an effective selection of the informative samples. However, in this paper, we focus on the data-incremental nature of active learning, and propose a method for properly tuning the weight decay as the amount of data increases. We also demonstrate that the performance can be improved by knowledge distillation using a low-performance teacher model trained from the previous acquisition step. In addition, we present a novel perspective of the weight decay, which provides a regularization effect by limiting the number of effective parameters and channels in the convolutional filter. We validate our methods on the MNIST, CIFAR-10, and CIFAR-100 datasets using convolutional neural networks of various sizes

JuYoung Yang, Chanho Lee, Pyunghwan Ahn, Haeil Lee, Eojindl Yi and Junmo Kim

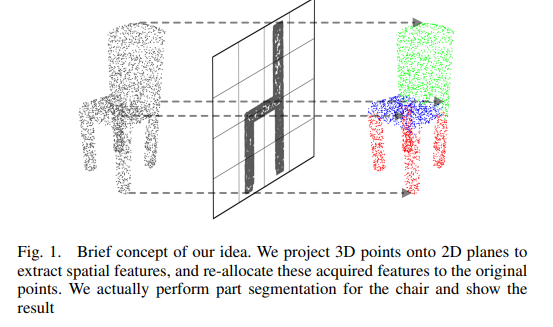

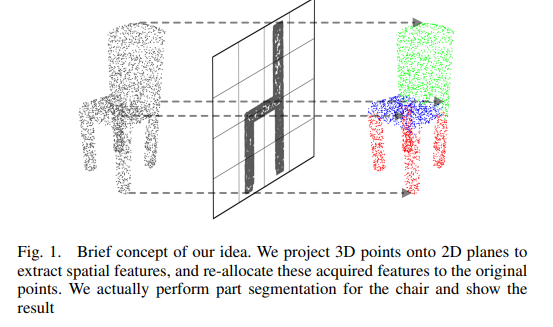

Following considerable development in 3D scanning technologies, many studies have recently been proposed with various approaches for 3D vision tasks, including some methods that utilize 2D convolutional neural networks (CNNs). However, even though 2D CNNs have achieved high performance in many 2D vision tasks, existing works have not effectively applied them onto 3D vision tasks. In particular, segmentation has not been well studied because of the difficulty of dense prediction for each point, which requires rich feature representation. In this paper, we propose a simple and efficient architecture named point projection and backprojection network (PBP-Net), which leverages 2D CNNs for the 3D point cloud segmentation. 3 modules are introduced, each of which projects 3D point cloud onto 2D planes, extracts features using a 2D CNN backbone, and back-projects features onto the original 3D point cloud. To demonstrate effective 3D feature extraction using 2D CNN, we perform various experiments including comparison to recent methods. We analyze the proposed modules through ablation studies and perform experiments on object part segmentation (ShapeNetPart dataset) and indoor scene semantic segmentation (S3DIS dataset). The experimental results show that proposed PBP-Net achieves comp

Jinhyung Kim, Seunghwan Cha, Dongyoon Wee, Soonmin Bae and Junmo Kim

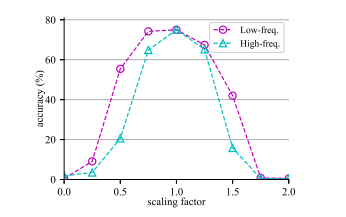

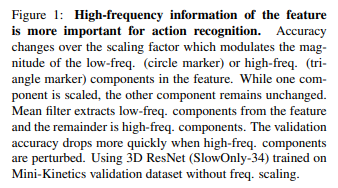

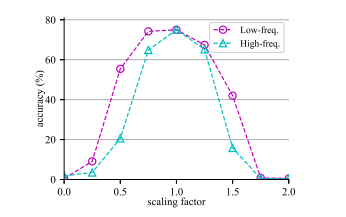

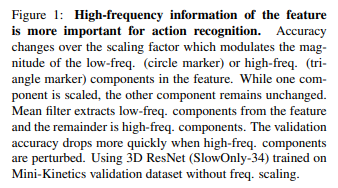

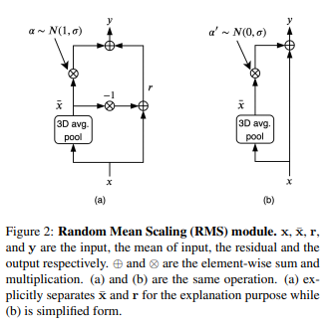

Deep neural networks for video action recognition frequently require 3D convolutional filters and often encounter overfitting due to a larger number of parameters. In this paper, we propose Random Mean Scaling (RMS), a simple and effective regularization method, to relieve the overfitting problem in 3D residual networks. The key idea of RMS is to randomly vary the magnitude of low-frequency components of the feature to regularize the model. The low-frequency component can be derived by a spatio-temporal mean on the local patch of a feature. We present that selective regularization on this locally smoothed feature makes a model handle the low-frequency and high-frequency component distinctively, resulting in performance improvement. RMS can enhance a model with little additional computation only during training, similar to other regularization methods. RMS also can be incorporated into typical training process without any bells and whistles. Experimental results show the improvement in generalization performance on a popular action recognition datasets demonstrating the effectiveness of RMS as a regularization technique, compared to other state-of-the-art regularization methods.

Janghyeon Lee, Hyeong Gwon Hong, Donggyu Joo and Junmo Kim

We propose a quadratic penalty method for continual learning of neural networks that contain batch normalization (BN) layers. The Hessian of a loss function represents the curvature of the quadratic penalty function, and a Kronecker-factored approximate curvature (K-FAC) is used widely to practically compute the Hessian of a neural network. However, the approximation is not valid if there is dependence between examples, typically caused by BN layers in deep network architectures. We extend the K-FAC method so that the inter-example relations are taken into account and the Hessian of deep neural networks can be properly approximated under practical assumptions. We also propose a method of weight merging and reparameterization to properly handle statistical parameters of BN, which plays a critical role for continual learning with BN, and a method that selects hyperparameters without source task data. Our method shows better performance than baselines in the permuted MNIST task with BN layers and in sequential learning from the ImageNet classification task to fine-grained classification tasks with ResNet-50, without any explicit or implicit use of source task data for hyperparameter selection.

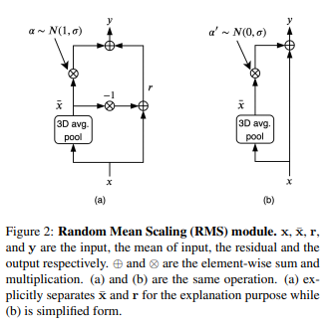

Janghyeon Lee, Donggyu Joo, Hyeong Gwon Hong and Junmo Kim

We propose a novel continual learning method called Residual Continual Learning (ResCL). Our method can prevent the catastrophic forgetting phenomenon in sequential learning of multiple tasks, without any source task information except the original network. ResCL reparameterizes network parameters by linearly combining each layer of the original network and a fine-tuned network; therefore, the size of the network does not increase at all. To apply the proposed method to general convolutional neural networks, the effects of batch normalization layers are also considered. By utilizing residuallearning-like reparameterization and a special weight decay loss, the trade-off between source and target performance is effectively controlled. The proposed method exhibits state-ofthe-art performance in various continual learning scenarios.

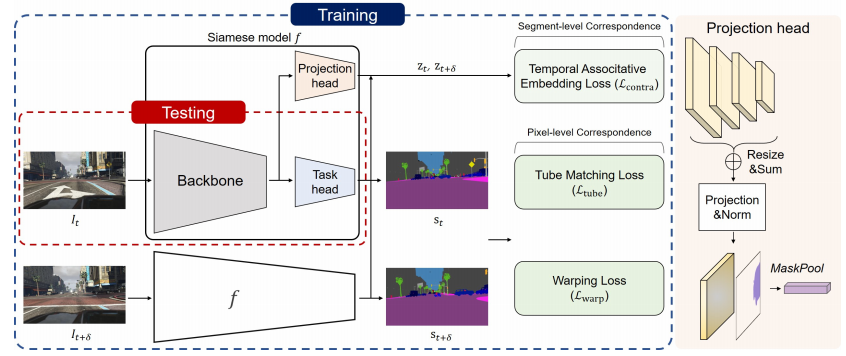

Conference/Journal, Year: CVPR 2021

Temporal correspondence – linking pixels or objects across frames – is a fundamental supervisory signal for the video models. For the panoptic understanding of dynamic scenes, we further extend this concept to every segment. Specifically, we aim to learn coarse segment-level matching and fine pixel-level matching together. We implement this idea by designing two novel learning objectives. To validate our proposals, we adopt a deep siamese model and train the model to learn the temporal correspondence on two different levels (i.e., segment and pixel) along with the target task. At inference time, the model processes each frame independently without any extra computation and post-processing. We show that our per-frame inference model can achieve new state-of-the-art results on Cityscapes-VPS and VIPER datasets. Moreover, due to its high efficiency, the model runs in a fraction of time (3×) compared to the previous state-of-the-art approach

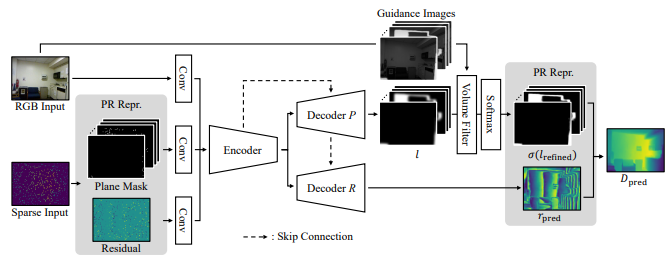

Conference/Journal, Year: CVPR 2021

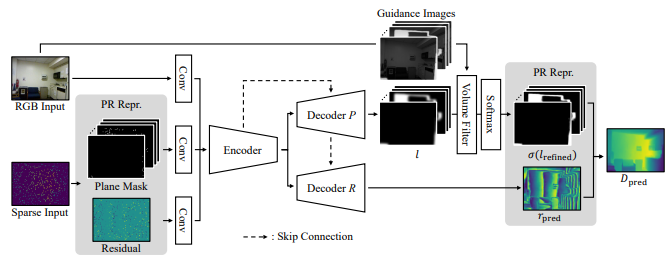

The basic framework of depth completion is to predict a pixel-wise dense depth map using very sparse input data. In this paper, we try to solve this problem in a more effective way, by reformulating the regression-based depth estimation problem into a combination of depth plane classification and residual regression. Our proposed approach is to initially densify sparse depth information by figuring out which plane a pixel should lie among a number of discretized depth planes, and then calculate the final depth value by predicting the distance from the specified plane. This will help the network to lessen the burden of directly regressing the absolute depth information from none, and to effectively obtain more accurate depth prediction result with less computation power and inference time. To do so, we firstly introduce a novel way of interpreting depth information with the closest depth plane label p and a residual value r, as we call it, Plane-Residual (PR) representation. We also propose a depth completion network utilizing PR representation consisting of a shared encoder and two decoders, where one classifies the pixel’s depth plane label, while the other one regresses the normalized distance from the classified depth plane. By interpreting depth information in PR representation and using our corresponding depth completion network, we were able to acquire improved depth completion performance with faster computation, compared to previous approaches

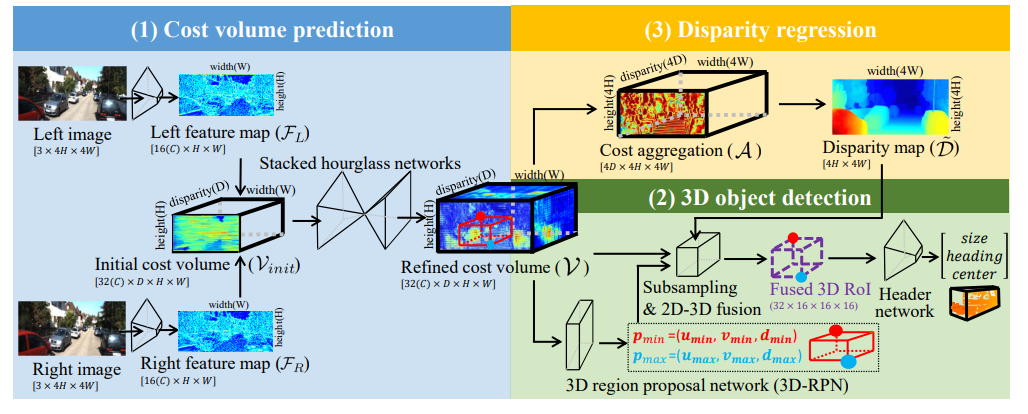

Conference/Journal, Year: ICRA 2021

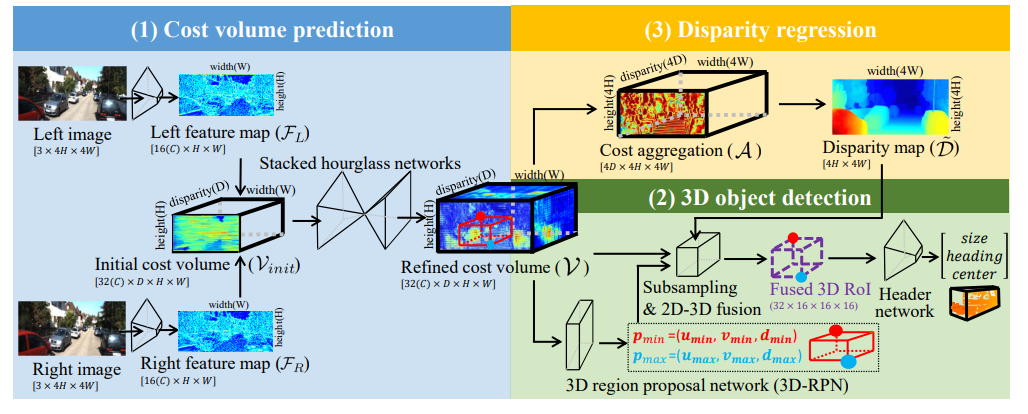

This paper presents a stereo object matching method that exploits both 2D contextual information from images as well as 3D object-level information. Unlike existing stereo matching methods that exclusively focus on the pixel-level correspondence between stereo images within a volumetric space (i.e., cost volume), we exploit this volumetric structure in a different manner. The cost volume explicitly encompasses 3D information along its disparity axis, therefore it is a privileged structure that can encapsulate the 3D contextual information from objects. However, it is not straightforward since the disparity values map the 3D metric space in a non-linear fashion. Thus, we present two novel strategies to handle 3D objectness in the cost volume space: selective sampling (RoISelect) and 2D-3D fusion (fusion-by-occupancy), which allow us to seamlessly incorporate 3D object-level information and achieve accurate depth performance near the object boundary regions. Our depth estimation achieves competitive performance in the KITTI dataset and the Virtual-KITTI 2.0 dataset.

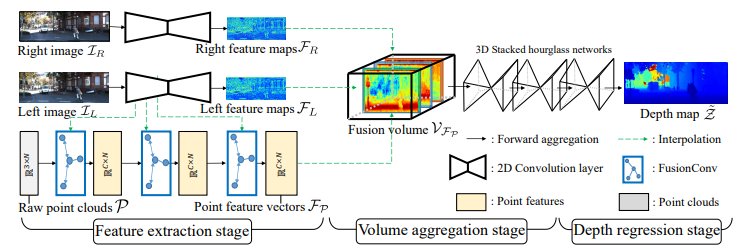

Conference/Journal, Year: RA-L 2021

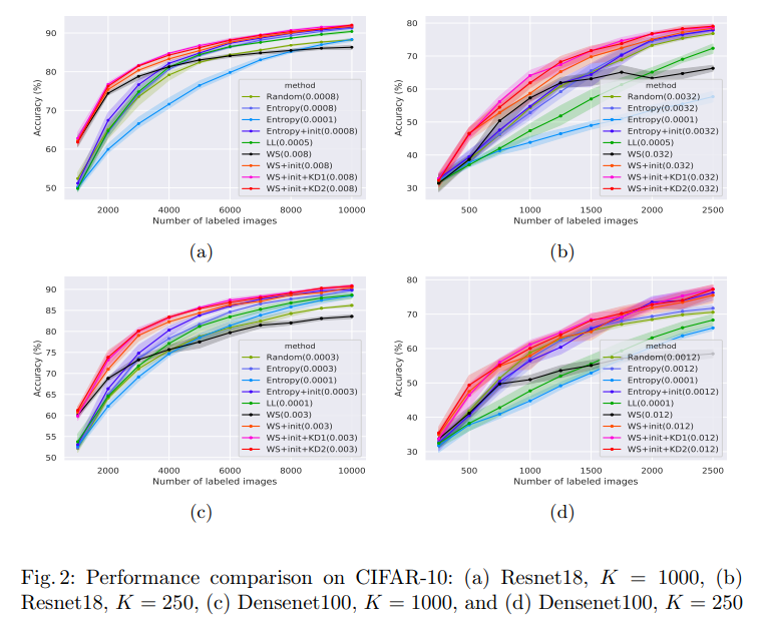

Stereo-LiDAR fusion is a promising task in that we can utilize two different types of 3D perceptions for practical usage – dense 3D information (stereo cameras) and highly accurate sparse point clouds (LiDAR). However, due to their different modalities and structures, the method of aligning sensor data is the key for successful sensor fusion. To this end, we propose a geometry-aware stereo-LiDAR fusion network for long-range depth estimation, called volumetric propagation network. The key idea of our network is to exploit sparse and accurate point clouds as a cue for guiding correspondences of stereo images in a unified 3D volume space. Unlike existing fusion strategies, we directly embed point clouds into the volume, which enables us to propagate valid information into nearby voxels in the volume, and to reduce the uncertainty of correspondences. Thus, it allows us to fuse two different input modalities seamlessly and regress a long-range depth map. Our fusion is further enhanced by a newly proposed feature extraction layer for point clouds guided by images: FusionConv. FusionConv extracts point cloud features that consider both semantic (2D image domain) and geometric (3D domain) relations and aid fusion at the volume. Our network achieves state-of-the-art performance on the KITTI and the VirtualKITTI datasets among recent stereo-LiDAR fusion methods.